Good morning. It’s Monday, January 8th.

Did you know: The iPod Mini turns 20 years old this week?

In Partnership with Wirestock

In today’s email:

AI in Scientific and Medical Advancements

AI in Consumer Technology and Entertainment

AI Tools and Frameworks in the Tech Industry

AI Ethics, Security, and Governance

5 New AI Tools

Latest AI Research Papers

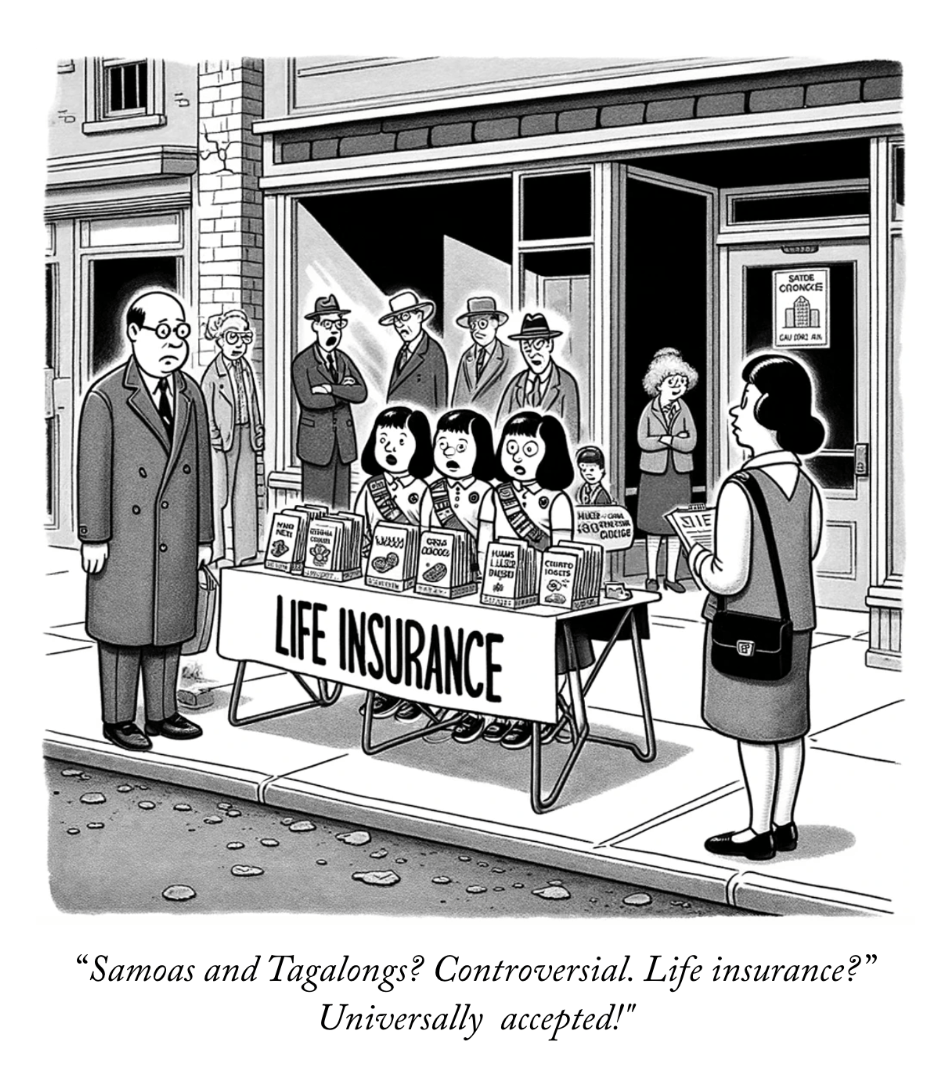

ChatGPT Attempts Comics

You read. We listen. Let us know what you think by replying to this email.

Interested in reaching 47,811 smart readers like you? To become an AI Breakfast sponsor, apply here.

Today’s trending AI news stories

AI in Scientific and Medical Advancements

> The Frontier supercomputer, housed at the Oak Ridge National Laboratory and backed by the Department of Energy, has executed a 1 trillion parameter Large Language Model (LLM), rivaling advanced AI models like GPT-4. Powered by AMD’s 3rd Gen EPYC “Trento” CPUs and Instinct MI250X AI GPU accelerators, Frontier boasts 8,699,904 cores and achieves 1.194 Exaflop/s. Its dominance in the Top500.org list is credited to its HPE Cray EX architecture and Slingshot-11 interconnect. This achievement is LLM training involved hyperparameter tuning and optimization with 3,000 Instinct MI250X AI GPU accelerators, part of Frontier’s total 37,000 accelerators, hinting at more future breakthroughs.

> Isomorphic Labs, a London-based spin-out of Google AI’s DeepMind announced strategic partnerships with pharmaceutical giants Eli Lilly and Novartis. The collaboration, worth around $3 billion, focuses on AI-driven drug discovery for treating diseases. Isomorphic will receive $45 million upfront from Eli Lilly, with potential earnings up to $1.7 billion based on performance milestones, excluding royalties. Novartis will contribute $37.5 million upfront, fund select research costs, and offer up to $1.2 bullion in performance-based incentives. The agreements leverage Isomorphic’s use of DeepMind’s AlphaFold 2 AI technology for predicting human body proteins. Despite its imperfections, AlphaFold’s capacity for accurate protein structure predictions represents advancements in drug discovery.

> China’s Ministry of Science and Technology has introduced guidelines for ethical generative AI use in scientific research. These guidelines emphasize the need for ethics, safety, and transparency in research practices. Key provisions include prohibiting AI from being listed as a co-author and mandating clear identification of AI-generated content in scientific texts. The guidelines also address intellectual property and authorship concerns, requiring AI-generated content to be labeled and not treated as original literature.

AI in Consumer Technology and Entertainment

> CES 2024 begins tomorrow in Las Vegas, focusing on the latest tech announcements and trends. Key events include press conferences from major tech giants like AMD, NVIDIA, LG, and Samsung, with a strong emphasis on AI innovations and new product releases. Highlights before the official start include Samsung’s transparent MicroLED screen, the AI-based Flappie cat door, innovative home entertainment solutions, and Samsung’s AI-driven TV lineup featuring glare-free OLEDs. Other notable mentions include a smart bidet set by Kohler, Xreal’s affordable AR glasses, and Withings’ all-in-one health device BeamO. CES 2024 spotlights the growing integration of AI in consumer technology, from everyday home appliances to cutting-edge entertainment systems.

> Meta’s GenAI team has introduced Fairy, a video-to-video synthesis model that enhances AI-powered video editing. It offers fast, temporally consistent editing with simple text prompts, like transforming an astronaut into a Yeti. Utilizing cross-frame attention for coherence, Fairy generates 512x384 pixel, 4-second videos in 14 seconds, significantly outperforming previous models. However, Fairy struggles with dynamic environmental effects like rain or fire, which appear static due to its emphasis on visual consistency.

AI Tools and Frameworks in the Tech Industry

> NVIDIA’s NeMo toolkit introduces Parakeet, a series of open-source automatic speech recognition models, surpassing OpenAI’s Whisper v3 in performance. Developed with Suno.ai, these models range from 0.6 to 1.1 billion parameters and excel in transcribing English under varied accents and sound conditions. Trained on 64,000 hours of diverse audio data, Parakeet models, available under CC BY 4.0 license, demonstrate resilience against non-speech elements like music. They provide easy integration and a demo of the most robust model is accessible online.

> Microsoft’s Phi-2, an open-source mini language model, surpasses Google’s Gemini Nano in performance benchmarks. Released under the MIT license, Phi-2 is a 2.7 billion parameter model, notable for its advancements in reasoning and safety. It outperforms larger models, including Meta’s Llama-2-7B, in certain areas. Microsoft has integrated Phi-2 into its Azure AI Model Catalog and introduced “Models as a Service” for easier AI model integration by developers.

AI Ethics, Security, and Governance

> Microsoft executive Dee Templeton has been appointed as a non-voting observer on OpenAI’s board. Templeton, who has over 25 years of experience at Microsoft and currently serves as the Vice President for Technology and Research Partnerships and Operations, has already started participating in board meetings. This move follows a major restructuring of OpenAI’s board, which included reinstating CEO Sam Altman after a brief removal and replacing nearly all directors. The current board consists of Bret Taylor, Larry Summers, and Adam D’ Angelo.

> The 2023 Expert Survey on Progress in AI, involving 2,778 AI researchers, features the fragmented views within the AI science community regarding the risks and opportunities of AI development. About 35% of respondents favor either a slower or faster development pace than currently observed, with the “much faster” group being three times larger than the “much slower” group. The survey predicts advancements in AI advancements by 2028, with milestones like autonomously creating websites and composing music expected to be achieved much earlier than previous estimates. By 2047, there’s a 50% probability that AI systems will surpass human performance in all tasks.

> Billionaire investor Bill Ackman has recently raised concerns about the potential for AI-powered tools to expose plagiarism in academic papers. In a post, he highlighted AI’s ability to efficiently analyze academic work for plagiarism, a task too cumbersome for humans. He suggests the future of academic publishing will require AI reviews for handling past works and the implications for academia, including potential faculty firings, donor withdrawal, and litigation over plagiarism definitions. Ackman’s focus is now on MIT President Sally Kornbluth after targeting Harvard and the University of Pennsylvania presidents.

In partnership with WIRESTOCK

From Passion to Profit: Monetize Your AI Art, Photos, Videos, and More

Turn your creative passion into profit by showcasing your skills and selling your creations including AI Art, photos, illustrations and videos.

5 new AI-powered tools from around the web

CamoCopy 2.0, is an advanced AI assistant that prioritizes privacy with encrypted or anonymized user data. Features include faster, more relevant responses, image creation without tracking, and over 1000 helpful prompts. Users can also earn money as an affiliate.

Invstr is an AI-driven investment platform that simplifies market analysis with real-time insights and personalized risk evaluations, making informed investing accessible to all.

Reiki by Web3Go is a platform blending AI, blockchain, and the creator economy. It offers AI agent creation toolkits, on-chain ownership proof, and a marketplace, fostering a community of over 88K members for creative monetization.

Brewed is an AI-powered web development tool that enables building anything from simple web components to complex layouts and landing pages. It is designed to let AI handle the bulk of the work, with manual edits for finishing touches.

VisualVibe AI converts images into stories, generating captions, and relevant hashtags that enhance content discoverability. It can also narrate visual journeys.

arXiv is a free online library where researchers share pre-publication papers.

TinyLlama, a compact 1.1B parameter language by Singapore University of Technology and Design, is pretrained on 1 trillion tokens for enhanced efficiency and performance. Utilizing Llama 2 architecture and community contributions like FlashAttention, it outperforms comparable models in downstream tasks. TinyLlama is open-source, facilitating broader research and application in AI, with its code and model checkpoints available on GitHub. This small, potent model marks an advancement in efficient language processing.

Open-Vocabulary SAM integrates the Segment Anything Model (SAM) and CLIP to create a unified framework for interactive segmentation and recognition. It introduces SAM2CLIP and CLIP2SAM modules for knowledge transfer, significantly improving computational efficiency and performance in recognizing and segmenting about 22,000 classes. This model demonstrates exceptional capabilities in various datasets and detectors, outperforming existing methods in both segmentation and recognition tasks.

DocGraphLM, a collaborative creation by JPMorgan AI Research and Dartmouth College, combines graph neural networks with pre-trained language models. This framework excels in extracting information from complex, visually-rich documents. It employs a joint encoder architecture and a unique link prediction method for effective document graph reconstruction. DocGraphLM demonstrates marked improvements in performance on diverse tasks and datasets, also achieving faster convergence in learning processes, highlighting the benefits of integrating structured graph semantics with language models.

DeepSeekLLM is an open-source project aimed at scaling LLMs with long-term perspective. It introduces models 7B and 76B parameters, trained on 2 trillion tokens, focusing on English and Chinese. The project explores scaling laws for optimal model/data scaling, emphasizing model scale representation by non-embedding FLOPs/token. The DeepSeek LLMs, after supervised fine-tuning (SFT) and direct preference optimization (DPO), excel in benchmarks, especially in code, mathematics, and reasoning. The 67B model even outperforms LLaMA-2 70B and GPT-3.5 in open-ended evaluations, demonstrating potential in language understanding and generation tasks.

The PHEME model, developed by PolyAI Limited and capitalizing on recent progress in neural text-to-speech (TTS) synthesis, targets real-time applications requiring natural, conversational speech. Unlike larger, autoregressive models like VALL-E and SoundStorm, PHEME is more compact and efficiently trained on smaller-scale conversational data. It outperforms the state-of-the-art MQTTS model by offering improved intelligibility and naturalness with reduced model size and faster inference, thus enabling real-time synthesis. PHEME also allows single-speaker specialization using synthetic data, further improving voice quality. This model series, through its compact design, parallel speech generation, and reduced data demands, stands as a huge advancement in conversational and efficient TTS systems.

ChatGPT Attempts Comics

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, apply here.