Good morning. It’s Monday, October 28th.

Did you know: Grand Theft Auto: San Andreas was released 20 years ago?

In today’s email:

AI artist Botto sells $351,600 at Sotheby’s

Google’s Project Jarvis AI to handle browser tasks by December

Meta's NotebookLlama turns text into podcast-style audio

Apple’s Ferret-UI 2 improves multi-device app control

OpenAI won’t release Orion model this year

Yale finds AI learns best with “edge of chaos” data

Vision models struggle with simple visual puzzles

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

In partnership with

Enhance Your Photos with MagicPhoto Pro! Give your photos the magic touch they deserve! Our human experts enhance your photos with AI to deliver jaw-dropping images in record time! No AI prompting skills required!

Our Most Popular Services:

Headshots: Professional, polished, and ready for your resume, LinkedIn, or website

Baby Photos: Transform your baby’s photos into beautiful keepsakes, from holiday to nature, animal and fantasy settings

Pet Photos: Your pets in playful settings that capture their unique personalities and many more… Ready to see the magic?

Thank you for supporting our sponsors!

Today’s trending AI news stories

An Autonomous AI artist just made $351,600 at Sotheby's

Botto, an autonomous AI artist, has made waves by generating $351,600 in sales at Sotheby’s, establishing a new benchmark in AI art. Since its inception in 2021 by Mario Klingemann and ElevenYellow, Botto has achieved over $4 million in sales, creating artworks independently while being curated by a 15,000-member community, BottoDao.

The recent exhibition "Exorbitant Stage" showcased six NFT lots that surpassed expectations, highlighting Botto's significant role in redefining artistic authorship. Despite challenges in the NFT market post-2022, Botto's success signals a potential resurgence for AI-generated art and underscores the evolving relationship between human creativity and machine intelligence in the art world. Read more.

Google Preps AI Agent That Takes Over Computers

Google is developing AI technology, under the code name Project Jarvis, that can autonomously complete tasks like web browsing, research, and online shopping directly within a browser. The technology, powered by Google’s upcoming Gemini language model, is anticipated to launch by December.

This agent-based AI aligns with efforts by Google and other companies, such as Microsoft-backed OpenAI and Anthropic, to enable models capable of interacting with computers to perform routine tasks autonomously. Project Jarvis represents Google’s approach to expanding AI’s functional role from passive language processing to active, browser-based task execution.

The development positions Google among leaders exploring computer-using agents, capable of navigating and taking actions based on real-time information without user intervention. Google has yet to release a public comment regarding Project Jarvis or its potential market applications. Read more.

Meta releases an 'open' version of Google's podcast generator

Image via: Meta

Meta’s NotebookLlama is taking a shot at Google’s NotebookLM podcast generator with an “open” alternative that turns text files—like PDFs or blogs—into conversational, podcast-style recordings. Powered by Meta’s Llama models, NotebookLlama adds a bit of scripted flair and back-and-forth interruptions to simulate live dialogue before converting it into audio via open-source text-to-speech.

The output has a robotic edge, with occasional voice overlaps, which Meta’s team attributes to the current text-to-speech model limitations. Researchers suggest improvements are possible with dual-agent setups for more natural dialogue pacing. Like its counterparts, NotebookLlama hasn’t quite sidestepped the AI “hallucination” problem, so some details in its content may still lean fictional. While early, NotebookLlama is a curious blend of open-source AI and podcasting, putting Meta on the map in automated content creation—with some fine-tuning still in sight. Read more.

Apple's new Ferret-UI 2 AI system can control apps across iPhones, iPads, Android, and Apple TV

Apple has introduced Ferret-UI 2, an innovative AI system designed to enhance app interaction across a range of devices, including iPhones, iPads, Android devices, web browsers, and Apple TV. The system achieved an impressive 89.73 in UI element recognition tests, outperforming its predecessor and even GPT-4o, which scored 77.73.

Rather than relying on precise click coordinates, Ferret-UI 2 intelligently discerns user intent, pinpointing the right buttons with ease. Its adaptive architecture balances image resolution and processing needs, achieving 68% accuracy on iPads and 71% on Androids when trained on iPhone data. Yet, it stumbles when transitioning between mobile and TV or web interfaces, grappling with layout discrepancies.

This innovation paves the way for voice assistants like Siri to tackle more complex tasks, navigating apps and the web through simple voice commands. Read more.

OpenAI says it won't release a model called Orion this year

OpenAI has announced that it will not release a model known as Orion this year, contradicting our recent reports about its product roadmap. A company spokesperson confirmed, “We don’t have plans to release a model code-named Orion this year,” while hinting at other forthcoming technologies. This statement comes in response to a report from The Verge, which suggested that Orion would launch by December, with early access given to trusted partners, including Microsoft.

Speculation surrounds Orion being a successor to GPT-4o, potentially trained on synthetic data from OpenAI’s reasoning model, o1. OpenAI has indicated it will continue developing both GPT models and reasoning models, each addressing distinct use cases. The ambiguity in OpenAI's announcement leaves room for alternative possibilities regarding its next major release. Read more.

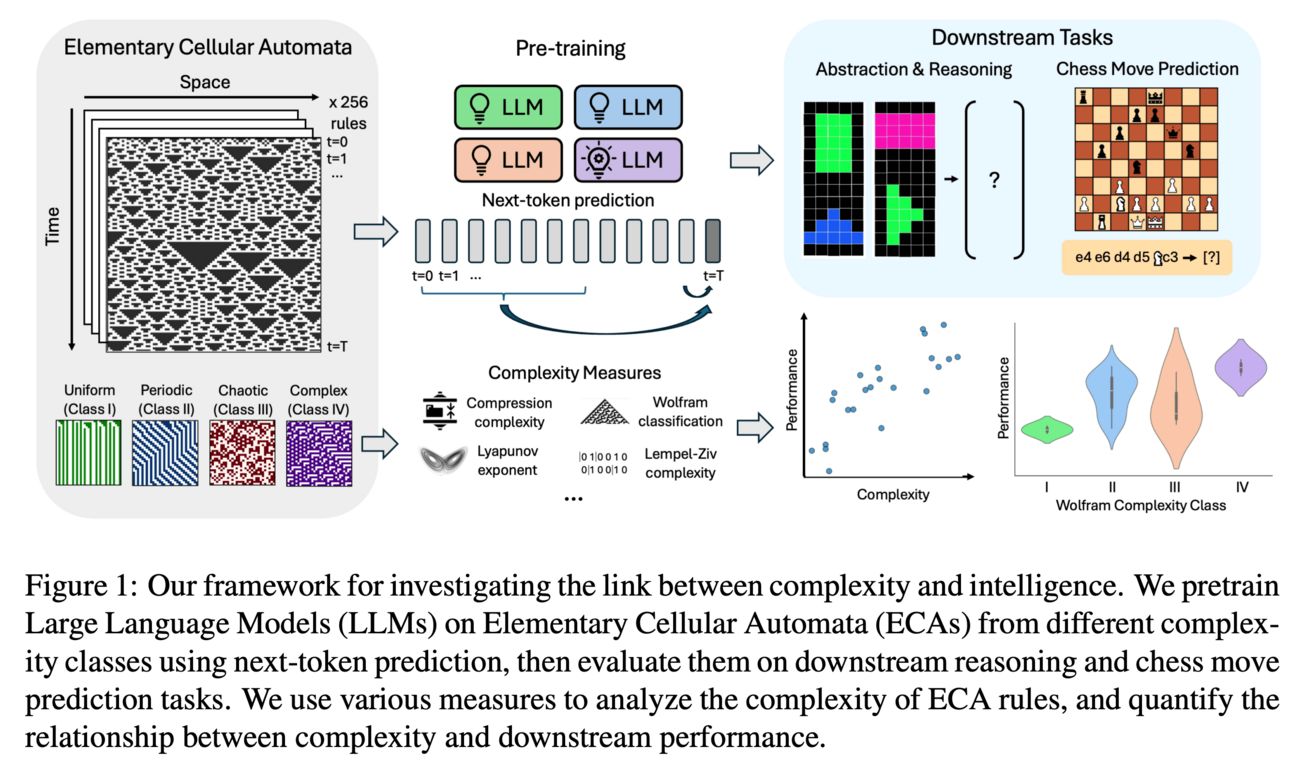

"Edge of Chaos": Yale study finds sweet spot in data complexity helps AI learn better

Researchers at Yale University have unearthed a curious phenomenon: AI models thrive when trained on data that strikes the right balance between order and chaos, dubbed the "edge of chaos." Utilizing elementary cellular automata (ECAs) of varying complexity, the team found that models trained on Class IV ECAs—those that dance between order and chaos—excelled in tasks like reasoning and predicting chess moves.

Image via: Zhang, Patel

In contrast, models fed overly simplistic patterns often learned to produce trivial solutions, missing out on sophisticated capabilities. This structured complexity appears crucial for knowledge transfer across tasks. The findings may also explain the prowess of large language models like GPT-3 and GPT-4, where the diverse training datasets could mimic the benefits seen with complex ECA patterns. More exploration is on the horizon, as the team plans to scale up their experiments with larger models and even more intricate systems. Read more.

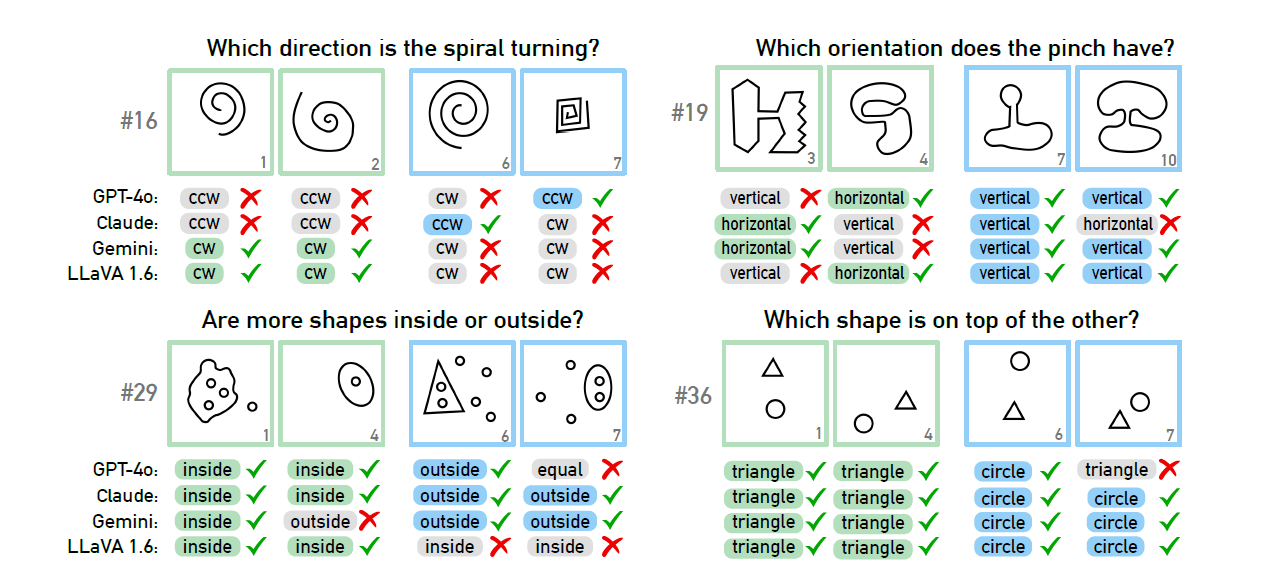

Vision language models struggle to solve simple visual puzzles that humans find intuitive

Image: Wüst et al.

A recent study from TU Darmstadt exposes the surprising shortfalls of cutting-edge vision language models (VLMs), including the much-lauded GPT-4o, when tackling Bongard problems—those deceptively simple visual puzzles that test our abstract reasoning. In a rather underwhelming performance, GPT-4o managed to solve just 21 out of 100 puzzles, with models like Claude, Gemini, and LLaVA trailing even further behind.

This glaring gap highlights the disparity in visual intelligence between humans and AI. The study also raises important questions about the efficacy of current AI evaluation benchmarks, suggesting they may inadequately reflect true reasoning capabilities. Researchers advocate for a reevaluation of assessment methods to better capture the nuances of visual reasoning in AI systems. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on X!