Good morning. It’s Friday, July 21st.

Did you know: On this day in 1999, Apple released the first iBook laptop.

In today’s email:

Apple developing 'Apple GPT' AI

Google's 'Genesis' AI writes news

Capgemini, Microsoft create generative AI platform

AI tools misuse digital artist Rutkowski’s work

MI6 Chief: AI can't replace human spies

AI21 Labs unveils Contextual Answers for enterprises

AI-related job postings surge by 450%

OpenAI enhances ChatGPT with custom instructions

GitHub's AI chatbot, Copilot Chat, enters beta

AI Showrunner makes custom South Park episodes

Tesla to build 'Dojo' AI supercomputer amid chip shortage

US Judge questions artists' lawsuit against AI firms

AI surveillance tracks fare evasion in NYC subway

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think of this edition by replying to this email, or DM us on Twitter.

Today’s edition is brought to you by:

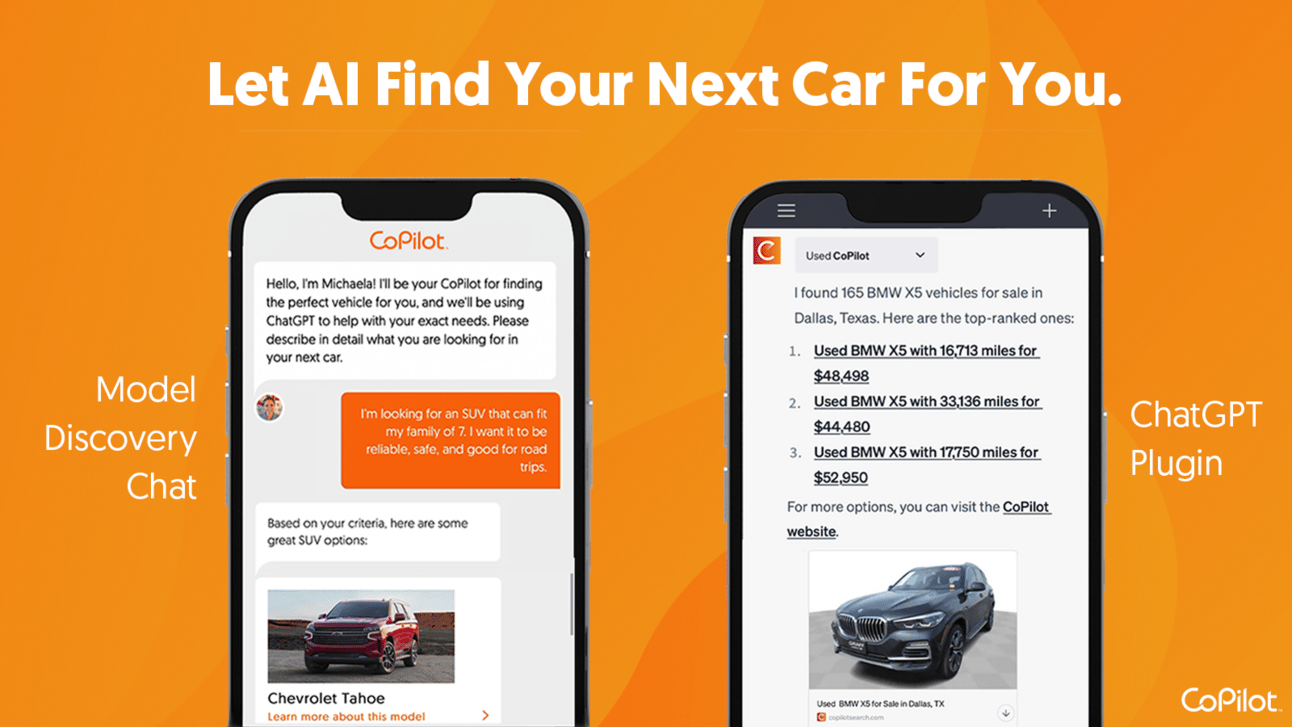

CoPilot AI

CoPilot has launched the first ChatGPT plugin for car shopping, providing actionable AI-powered analysis & rankings of every car with data from across the internet and CoPilot proprietary databases.

Your CoPilot Intelligent Agent brings actionable AI to your car shopping experience: Searches every dealer to identify every matching car for sale in your area based on your specific needs

AI-powered analysis & rankings harnessing data from across the internet in combination with proprietary CoPilot databases Unlike other major car shopping sites & apps which return results based on which dealers pay them

Two New Tools: ChatGPT Plugin & Model Discovery Chat Tool Take the grind out of car shopping, let AI find your next car for you!

Today’s trending AI news stories

Apple Developing “Apple GPT” Language Model

Apple, the $3 trillion technology giant that is now worth more than the entire UK stock market, announced it is venturing into the fiercely competitive field of AI with the development of its new AI tool, 'Apple GPT', codename 'AJAX'. This new AI service under development signifies Apple's foray into the race of Large Language Model, traditionally dominated by firms like OpenAI and Google.

While details of the ambitious project are still under wraps, speculations and possibilities regarding its applications are making waves in the tech industry. Among the possibilities being discussed is the integration of Apple GPT into Apple's existing suite of services, which includes iMessage, Keynote, Pages, and Health.

Here are some speculative integrations of Apple GPT we could see by 2024:

iMessage Integration

The iMessage service, already boasting end-to-end encryption, could see a massive upgrade with the integration of Apple GPT. By understanding the context and semantics of the unique user conversations, Apple GPT could potentially offer smart, contextual responses, streamline scheduling, and organize reminders directly from the chat interface. Moreover, this could pave the way for real-time language translation.

Keynote Integration

The integration of Apple GPT into Keynote could revolutionize the way presentations are created and delivered. An AI tool that not only helps with the design and layout of slides but also generates content based on user prompts could speed up content creation by 10 fold. It could even provide real-time suggestions to enhance the quality of presentations and facilitate a more interactive audience engagement, turning Keynote into a truly dynamic tool.

Pages Integration

When it comes to Pages, Apple GPT will be a game-changer for authors, students, and professionals. By providing contextual recommendations and corrections, and instant formatting (no more looking up APA style guides) an integration like this would drastically speed up the writing experience. It could aid in creating outlines for essays, suggest improvements to sentences, and even assist in generating content for complex topics.

Health Data Integration

Perhaps the most intriguing of all possible integrations is in the realm of health data. As health tracking becomes more prevalent with devices like the Apple Watch, integrating Apple GPT could potentially add a level of predictive and diagnostic intelligence, previously unseen. It could analyze users' health data, offer insights, provide suggestions for healthier habits, or even identify patterns that may be indicative of potential health risks.

Consider this: The effectiveness of an AI system is inherently linked to the quality and volume of the data it has access to. Imagine if the years of data you have stored on iCloud - including health metrics, iMessage interactions, photographs, and calendar entries - could be utilized by Apple GPT and Siri. This duo could transform into the most potent AI assistant yet witnessed.

They would understand your patterns, evolve alongside you, and even potentially serve as your personalized life guide, continually on the lookout for your well-being. This level of deep, personalized assistance, enabled by your own rich data tapestry, could redefine what it means to have an AI assistant.

We’ll be watching.

Google Tests A.I. Tool That Is Able to Write News Articles: Google is reportedly testing a new AI tool internally known as Genesis, which can generate news articles by taking in information about current events. The product has been demonstrated to executives from major news organizations, including The New York Times, The Washington Post, and News Corp, which owns The Wall Street Journal. However, some have raised concerns about its impact on journalistic credibility and the responsible use of generative AI in newsrooms. Google has clarified that these tools are not intended to “replace” journalists but offer writing style options. For now.

Capgemini and Microsoft collaborate to transform industries with accelerated generative AI implementations: Capgemini and Microsoft have collaborated to create the Azure Intelligent App Factory, an initiative aimed at accelerating generative AI implementations in various industries. The platform combines Capgemini’s industry knowledge data, and AI expertise with Microsoft’s market-leading technology, including Microsoft Cloud, Azure OpenAI Service, and GitHub Copilot. The Azure Intelligent App Factory is designed to help organizations maximize their AI investments, implement AI solutions responsibly, and drive tangible business outcomes.

Digital artist's work copied more times than Picasso: Digital artist Greg Rutkowski’s work has been used as a prompt in AI tools generating art over 400,000 times since September 2022, without his consent. Generative AI platforms like Midjourney, Dall-E, NightCafe, and Stable Diffusion scrape billions of images from the internet to produce new artworks, causing concerns among artists about their future work.

AI Won’t Replace Human Spies, Says Britain’s MI6 Chief: The head of Britain’s MI6, Richard Moore, stated in a public speech that AI won’t replace human intelligence gathering, as certain “human factors” are still beyond AI’s replication capabilities. Moore highlighted the unique characteristics of human agents in intelligence operations, emphasizing the value of human relationships in gathering crucial information. (though MI6 is already using AI to identify and disrupt weapons supply in Russia’s invasion of Ukraine)

AI21 Labs debuts Contextual Answers, a plug-and-play AI engine for enterprise data: AI21 Labs has introduced Contextual Answers, a plug-and-play generative AI engine aimed at helping enterprises leverage their data assets more effectively. The API can be integrated directly into digital assets, enabling users to interact with LLM technology for accessing information through conversational experiences. The AI engine is optimized to understand internal jargon and maintain data integrity by not mixing organizational knowledge with external information.

AI-skills job postings jump 450%; here's what companies want: Job postings for AI-related skills have surged 450%, particularly in generative AI roles such as prompt engineers, AI content creators, and data scientists, according to a report from Upwork. The demand for AI skills is outpacing the availability of talent, prompting companies to train existing employees while recruiting new ones. Various AI platforms, including ChatGPT, DALL-E, and Jasper, are sought-after expertise for freelance professionals.

OpenAI launches customized instructions for ChatGPT: OpenAI introduces custom instructions for ChatGPT, allowing users to fine-tune the language model for specific applications. The company assures users that data from custom instructions will enhance model performance without excessive adjustments. Users can opt out of this data collection through data control settings. The changes take effect in the next session, with a response limit of 1,500 characters.

GitHub’s AI-powered Copilot Chat feature launches in public beta: GitHub has released Copilot Chat, a coding chatbot aimed at enhancing developer productivity. Contextually aware of code and error messages, it offers real-time guidance, coding analysis and troubleshooting. Mario Rodriquez, GitHub’s VP of Product, claims Copilot X can boost efficiency tenfold, enabling developers to accomplish tasks in minutes rather than days. The beta launch is restricted to enterprise users via Microsoft’s Visual Studio and Visual Studio Code apps. GitHub is also working on voice-to-code interactions. The Copilot X system is designed to streamline coding processes and revolutionize developer productivity.

AI tool creates South Park episodes with user in starring role: Fable Simulation, a US-based company, has introduced an AI tool called, “AI Showrunner” that can create episodes of the popular animated series South Park, featuring users as the central characters. The tool, utilizing generative AI, allows users to provide a one- or two-sentence prompt, generating a unique episode complete with animation, voices, and editing. However, Fable Simulation emphasized that the South Park experiment was conducted solely for research purposes and won’t be made available to the public.

Elon Musk says Tesla will spend $1 billion to build a 'Dojo' A.I. supercomputer—but it wouldn't be necessary if Nvidia could just supply more chips: Tesla’s CEO, Elon Musk, is eyeing a substantial investment of over $1 billion to create an AI supercomputer known as “Dojo,” driven by the scarcity of Nvidia’s advanced AI training chips. Musk’s admiration for Nvidia’s CEO, Jensen Huang, is evident as he seeks to acquire the highly sought-after A100 tensor core GPU clusters. Tesla’s ambitious goal of achieving a compute capability of 100 exaFLOPS highlights its relentless demand for AI chips. Musk’s pursuit of full autonomy for Tesla vehicles hinges on Dojo’s potential, relying on extensive camera data and custom silicon to train the vehicles’ intelligence.

US judge finds flaws in artists' lawsuit against AI companies: U.S. District Judge William Orrick has urged the artists involved in the lawsuit against generative AI companies to offer more clarity and differentiation in their claims against Stability AI, Midjourney, and DevianArt. Judge Orrick expressed skepticism about the plausibility of their works being involved, given that the AI systems have been trained on an extensive dataset of “five billion compressed images.” He also cast doubt on the claim pertaining to output images, as he found little substantial similarity between the artists’ creations and the AI-generated images.

NYC subway using AI to track fare evasion: New York City’s subway stations have quietly introduced surveillance software powered by artificial intelligence to identify fare evaders, as revealed in public documents obtained by NBC News. The system, developed by Spanish company, AWAAIT, is currently operational in seven stations and is expected to expand to approximately two dozen more by year-end. The Metropolitan Transit Authority seeks to address fare evasion concerns and assess lost revenue.

🎧 Did you know AI Breakfast has a podcast read by a human? Join AI Breakfast team member Luke (an actual AI researcher!) as he breaks down the week’s AI news, tools, and research: Listen here

5 new AI-powered tools from around the web

Articula is an AI-powered call translation app supporting 24 languages with interpreter-level accuracy. Create a personalized AI voice in seconds. Choose from flexible packages for call minutes, enjoy privacy policy transparency, and explore compatibility with various Apple products.

Magic Hour is an AI video platform that simplifies video creation with remarkable ease. Automate the entire production process, repurpose existing videos, and generate captivating visual content to engage and expand your audience.

Sidekic AI: The AI assistant with infinite memory. Automatically save, tag, and organize web resources. Leverage expert knowledge through handpicked guides, resources, and share them in playlists called StarterPacs.

Superhuman AI offers the fastest email experience, saving teams over 10 million hours yearly. Increase productivity and responsiveness with Superhuman’s intuitive interface. Embrace the stress-free email solution and regain valuable time for what truly matters. Ideal for teams using Gmail or Outlook.

Agent.so is a tool for evaluating machine learning model performance. It assesses accuracy, precision, recall, and F1 score, while also offering insights into the model’s behavior, feature importance, and confusion matrices. Optimize your ML models with Agent.so’s comprehensive analysis and enhance your understanding of their capabilities and characteristics.

arXiv is a free online library where scientists share their research papers before they are published. Here are the top AI papers for today.

TokenFlow is an innovative framework for text-driven video editing, utilizing a pre-trained text-to-image diffusion model. By enforcing consistency in the diffusion feature space, the method generates high-quality videos that adhere to the target text while preserving spatial layout and motion. TokenFlow surpasses existing baselines, demonstrating significant improvements in temporal consistency. Though not suitable for structural changes, the method shows promise for enhancing video synthesis and inspiring future research in image models for video tasks.

📄 SCIBENCH: Evaluating College-Level Scientific Problem-Solving Abilities of Large Language Models

SCIBENCH puts large language models (LLMs) to the test, exploring their college-level scientific problem-solving abilities. While prior benchmarks showcased their mathematical prowess, SCIBENCH raises the bar with more complex, open-ended challenges in physics, chemistry, and math. The popular LLMs, GPT-3.5 and GPT-4, didn’t perform as well as expected, revealing their limitations in advanced reasoning.

Researchers present Brain2Music, a method to reconstruct music from functional magnetic resonance imaging (fMRI) data. They use a music generation model, MusicLM, conditioned on music embeddings derived from fMRI data. The reconstructed music semantically resembles the original stimuli, showcasing the potential text-to-music models. The study also investigates the brain regions corresponding to high-level semantic and low-level acoustic features of music. Brain2Music offers valuable insights into the intersection of AI-generated music and brain activity, but further research is needed to improve temporal alignment and explore music reconstruction from imagination.

Carnegie Mellon University researchers explore the potential Large Language Models (LLMs), like ChatGPT, in replicating crowdsourcing pipelines. LLMs have shown promise in mimicking human behavior, but can they handle more complex tasks? The study delves into LLMs’ abilities, revealing that while they can simulate some crowd workers’ tasks, their success varies. Understanding LLM strengths and human-facing safeguards can optimize task distribution between LLMs and humans. The investigation sheds light on the relative strengths of LLMs in different tasks and their potential in handling complex processes.

Researchers reveal DNA-Rendering, a groundbreaking human-centric dataset for neural actor rendering. With 1500+ diverse subjects, it captures ethnicity, age, clothing, and motion, elevating rendering realism. Employing a 360-degree indoor system with 60 calibrated RGB cameras, it ensures unparalleled quality in 5000+ video sequences and 67.5 million frames. Annotations for keypoints and segmentation facilitate research. The dataset also establishes benchmarks for view synthesis, pose animation, and identity rendering. DNA-Rendering’s potential spans computer vision, graphics, and real-world applications, igniting innovations and pushing human-centric rendering to new frontiers.

Thank you for reading today’s edition.

Your feedback is valuable.

Respond to this email and tell us how you think we could add more value to this newsletter.