Good morning. It’s Monday, January 6th.

Did you know: On this day in 2004, NASA's Spirit rover successfully landed on Mars?

In today’s email:

OpenAI Losing Money on $200/mo Plans

ByteDance’s AI Audio for Images

Halliday AI Smart Glasses

Brain-Computer Interface Decodes Thoughts

4 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

In partnership with SPEECHMATICS

👂 Speechmatics - Introducing the Best Ears in AI

For many industries such as healthcare, education, or food service, Voice AI that understands most of the words they hear, isn't good enough.

For when precision is needed, Speechmatics offers:

Ultra-accurate, real-time speech recognition, even in noisy environments

Inclusive understanding of any language, accent, or dialect

Seamless support for group conversations

Customer relationships are built on how well you listen. Speechmatics ensures your AI apps listen better than ever.

Thank you for supporting our sponsors!

Today’s trending AI news stories

OpenAI is losing money on its pricey ChatGPT Pro plan, CEO Sam Altman says

OpenAI CEO Sam Altman disclosed that the company is incurring losses on its $200-per-month ChatGPT Pro plan, driven by unexpectedly high user engagement. The plan, introduced last year, provides access to the o1 “reasoning” model, o1 pro mode, and relaxed rate limits on tools like the Sora video generator. Despite securing nearly $20 billion in funding, OpenAI remains financially strained, with reported losses of $5 billion against $3.7 billion in revenue last year.

Escalating expenditures, particularly for AI training infrastructure and operational overheads, have compounded the challenge, with ChatGPT alone once costing $700,000 daily. As OpenAI considers corporate restructuring and potential subscription price hikes, it forecasts a bold revenue target of $100 billion by 2029. Read more.

ByteDance's new AI model brings still images to life with audio

ByteDance’s INFP system redefines how static images interact with audio, animating portraits with lifelike precision. By first absorbing motion patterns from real conversations and then syncing them to audio input, INFP transforms still photos into dynamic dialogue participants.

The system’s two-step process—motion-based head imitation followed by audio-guided motion generation—ensures that speaking and listening roles are automatically assigned, while preserving natural expressions and lip sync. Built on the DyConv dataset, which captures over 200 hours of high-quality conversation, INFP outperforms traditional tools in fluidity and realism. ByteDance plans to extend this to full-body animations, though to counteract potential misuse, the tech will remain restricted to research environments—for now. Read more.

Halliday unveils AI smart glasses with lens-free AR viewing

Image Credit: Halliday

Halliday’s AI smart glasses break the mold with a design that’s as functional as it is stylish. Forget bulky lenses—thanks to DigiWindow, the smallest near-eye display module on the market, images are projected directly onto the eye. This lens-free system ensures nothing obstructs your vision.

The glasses aren’t just reactive; it features a proactive AI agent that preemptively addresses user needs by analysing conversations and delivering contextually relevant insights. With features like AI-driven translation, discreet notifications, and audio memo capture, it’s all controlled through a sleek interface.

Weighing just 35 grams, these glasses offer all-day comfort with a retro edge. Priced between $399 and $499, Halliday’s eyewear offers a sophisticated solution for those seeking both discretion and advanced technological performance. Read more.

Brain-computer interface developed in China decodes thought real-time

NeuroXess, a Chinese startup, has pulled off two significant feats in brain-computer interface (BCI) technology implanted in a 21-year-old epileptic patient. The device decodes brain signals in real-time, translating thoughts into speech and controlling robotic devices. The device’s 256-channel flexible system interprets high-gamma brain signals, correlating them with cognition and movement.

Within two weeks of the implant, the patient could control smart home systems, use mobile apps, and even engage with AI through speech decoding. The system demonstrated a 71% accuracy in decoding Chinese speech—an achievement attributed to the complexity of the language. NeuroXess also showcased the patient’s ability to control a robotic arm and interact with digital avatars, marking a milestone in mind-to-AI communication. Read more.

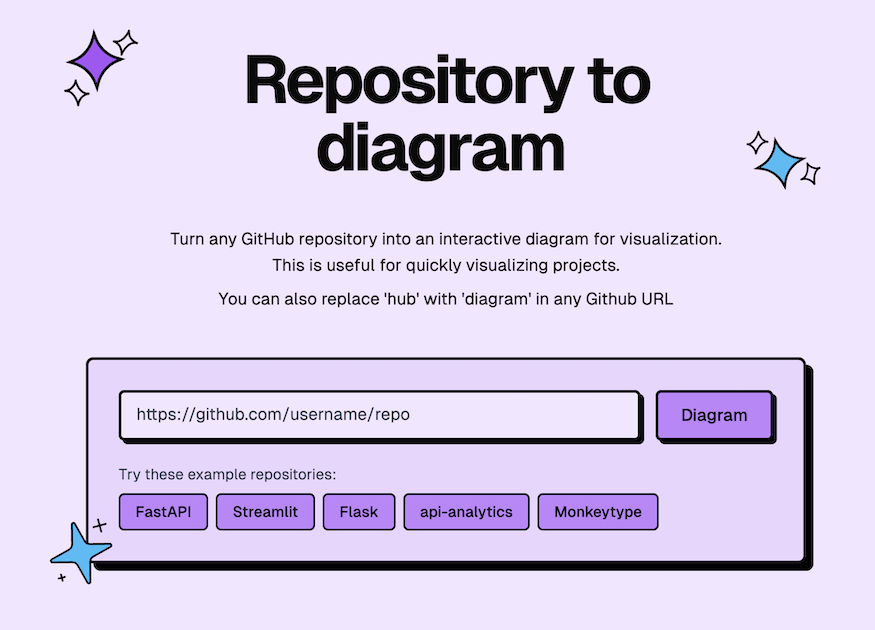

4 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on X!