Good morning. It’s Friday, September 26th.

On this day in tech history: In 2013, Facebook announced its DeepFace system, achieving 97.35 percent accuracy on the Labeled Faces in the Wild dataset, nearly matching human-level facial recognition performance at the time. The system used a nine-layer deep neural network with 120 million parameters and a 3D alignment step to normalize facial poses before feature extraction.

In today’s email:

ChatGPT debuts ‘Pulse’

DeepMind’s Gemini 1.5 lets robots plan, reason, and search the web for tasks

Meta arms AI with agent entropy handling and execution-tracking LLMs

Copilot expands with Claude and GPT orchestration while Microsoft tests AI content marketplace

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

Interested in going ad-free? View our subscriptions here and become a supporter!

AI voice dictation that's actually intelligent

Typeless turns your raw, unfiltered voice into beautifully polished writing - in real time.

It works like magic, feels like cheating, and allows your thoughts to flow more freely than ever before.

Your voice is your strength. Typeless turns it into a superpower.

Today’s trending AI news stories

GPT-5 matches human pros in 40% of real-world tasks, ChatGPT debuts ‘Pulse’

GPT-5-high now rivals human experts in 40.6% of tasks on OpenAI’s new GDPval benchmark, covering 44 roles across nine industries from healthcare to finance and manufacturing. Up from GPT-4o’s 13.7%, the gains reflect sharper applied reasoning, faster research synthesis, and more precise report generation. Claude Opus 4.1 hits 49%, boosted by polished visuals, showing how presentation can sway benchmark outcomes.

Image: Tejal Patwardhan on 𝕏

For users, ChatGPT Pulse upgrades your assistant from reactive to proactive, scanning your chat history, emails, and apps like Google Calendar overnight to deliver personalized visual “cards” each morning. From travel plans and workouts to meals, meetings, and task tracking, Pulse anticipates your day and adapts to your context. With feedback and the “Curate” tool, you fine-tune what it surfaces, giving a real-world glimpse of autonomous AI that acts intelligently without being prompted.

Monetization is on the radar, too. The company is hunting for an ad lead to steer subscriptions, ad products, and other revenue streams under Fidji Simo, who now runs everything outside research, infrastructure, hardware, and security.

Meanwhile, ChatGPT users have spotted “alpha” agent variants, “truncation” and “prompt expansion,” that auto-activate browsing and tools. Early signs point to GPT-5 agents moving from reactive chatbots to proactive, autonomous task managers. Read more.

DeepMind’s Gemini 1.5 lets robots plan, reason, and search the web for tasks

Google DeepMind’s latest Gemini Robotics 1.5 and Robotics-ER 1.5 models are taking robots beyond single-step instructions, letting them plan multistep actions, adapt to real-world complexity, and pull guidance from the web. ER 1.5 interprets environments and converts web insights into stepwise instructions, which Robotics 1.5 executes via advanced vision-language processing. Skills transfer across platforms, so dual-arm ALOHA2 routines work on Apollo’s humanoid frame.

Gemini 2.5 Flash and Flash-Lite sharpen agentic reasoning for software workflows, cutting output tokens by 24–50% to reduce latency and compute costs. Flash gains 5% on SWE-Bench Verified, and both models now follow complex instructions with higher fidelity, support richer multimodal outputs, and handle extended task chains, letting autonomous agents execute intricate sequences with minimal human oversight.

NotebookLM is also evolving. Users can soon pull sources directly from Google Drive and, via a “deep research” mode, query the web for multi-source references. Hints of audio and video overview generation suggest teams may soon produce multimedia briefings straight from their notes.

Productivity tools are catching up too. Google Vids converts Slides decks into video with autogenerated scripts, narration, and background music, while Veo 3 adds AI-generated clip storyboarding with human oversight.

Search Live, embedded in Lens, turns queries into interactive, task-oriented AI guidance.

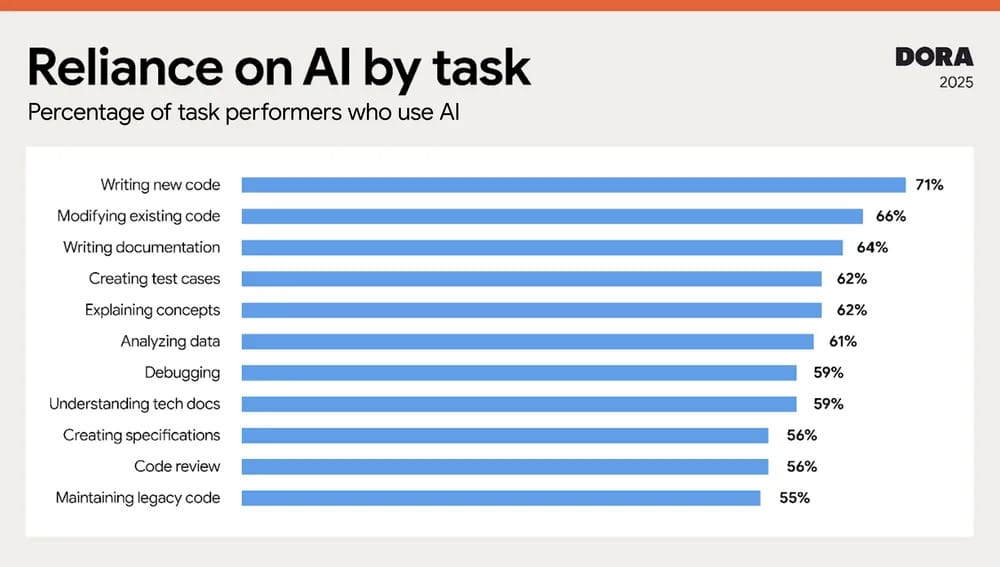

Google findings on the adoption and use of AI by software developers point to a broad adoption of and deep reliance on AI across a range of tasks. | Image: Google

Also, a new 2025 DORA Report finds 90% of coders use AI daily, but only 24% trust it; Gemini Code Assist generates two hours of work per engineer per day, powering over a quarter of Google’s new code. The report recommends “small-batch workflows” and guardrails to balance speed with reliability. Read more.

Meta arms AI with agent entropy handling and execution-tracking LLMs

Meta is sharpening AI for real-world complexity with three major moves. The Agents Research Environment (ARE) and Gaia2 benchmark stress-test agents in dynamic simulations packed with stateful apps, async events, deadlines, API failures, and multi-agent coordination. Gaia2 scores resilience and accuracy, not just output correctness. Early runs across 1,120 mobile tasks put GPT-5 at the top, setting a high bar for multi-agent robustness.

On coding, Meta’s 32B-parameter Code World Model (CWM) goes beyond code generation. Trained on 120M Python execution traces and fine-tuned with reinforcement learning across coding, math, and reasoning tasks, it simulates execution line by line, predicts infinite loops, runtime behavior, and algorithm complexity. Benchmarks show 65.8% pass@1 on SWE-bench Verified, 68.6% on LiveCodeBench, 96.6% on Math-500, and 76% on AIME 2024, with a 131k-token context window and open checkpoints for experimentation.

Image: Meta

Meta is also launching Vibes, a new feed in its Meta AI app and on meta.ai that generates short-form AI videos for users to browse, remix, and share.

The feature draws on AI models from Meta as well as partnerships with Midjourney and Black Forest Labs, producing content ranging from surreal animations to historical vignettes with minimal human input. Read more.

Copilot expands with Claude and GPT orchestration while Microsoft tests AI content marketplace

Copilot is no longer bound to a single engine. Anthropic’s Claude Sonnet 4 and Opus 4.1 are now embedded alongside OpenAI’s GPT models across Microsoft 365 Copilot and Copilot Studio. In practice, that means a research agent drafting a market report can run on Claude for sharper reasoning, or on GPT for faster narrative polish.

Copilot Studio takes it further with orchestration, letting enterprises assign different models to different steps of a workflow. A compliance-heavy check might sit on Claude, customer-facing copy on GPT-5, all stitched together into a multi-agent system with fallback logic if one model is disabled. Early rollout is already live, with preview in two weeks and production by year’s end.

On the content side, Microsoft is piloting a Publisher Content Marketplace (PCM), pitched to outlets in Monaco last week. Instead of flat fees, PCM would compensate publishers based on content usage inside AI products like Copilot, a step toward usage-linked royalties in an industry still dominated by one-off licensing deals. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!