Good morning. It’s Monday, September 15th.

On this day in tech history: In 2011, PLOS ONE published MultiMiTar, an early machine-learning tool for predicting microRNA targets using feature engineering and multi-objective optimization. It’s a neat snapshot of how bioinformatics tackled messy data with handcrafted ML pipelines before deep learning took over.

In today’s email:

ChatGPT Prepares Native Ordering System

Google’s VaultGemma sets privacy milestone

Speed over nuance, specialists over generalists: xAI’s new AI play

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

How 433 Investors Unlocked 400X Return Potential

Institutional investors back startups to unlock outsized returns. Regular investors have to wait. But not anymore. Thanks to regulatory updates, some companies are doing things differently.

Take Revolut. In 2016, 433 regular people invested an average of $2,730. Today? They got a 400X buyout offer from the company, as Revolut’s valuation increased 89,900% in the same timeframe.

Founded by a former Zillow exec, Pacaso’s co-ownership tech reshapes the $1.3T vacation home market. They’ve earned $110M+ in gross profit to date, including 41% YoY growth in 2024 alone. They even reserved the Nasdaq ticker PCSO.

The same institutional investors behind Uber, Venmo, and eBay backed Pacaso. And you can join them. But not for long. Pacaso’s investment opportunity ends September 18.

Paid advertisement for Pacaso’s Regulation A offering. Read the offering circular at invest.pacaso.com. Reserving a ticker symbol is not a guarantee that the company will go public. Listing on the NASDAQ is subject to approvals.

Today’s trending AI news stories

ChatGPT prepares native ordering system, Altman’s biotech startup learns to hack aging

OpenAI has a new Orders hub in development, designed to store credit cards, enable one-click checkout, and track purchases across desktop and mobile. Voice Mode is being folded directly into the main app window for tighter multimodal workflows, and parental controls on the web client hint at future deployments in classrooms and youth-focused contexts. Rollout details remain unconfirmed, but the groundwork indicates a staged release.

Image: Screenshot by TestingCatalog

At the same time, OpenAI is recalibrating its partnership with Microsoft. According to The Information, Microsoft’s revenue share is set to fall from roughly 20% to 8% by 2030, freeing up more than $50 billion to fund compute-intensive training and inference. Microsoft, in exchange, is expected to gain one-third equity in a restructured OpenAI entity, though without a board seat. This underscores the asymmetry of the relationship: hyperscalers provide GPUs and cloud scale, but model companies retain governance.

On the narrative front, the company’s leadership is framing the industry’s turbulence as both necessary and inevitable. Board chair Bret Taylor likens the AI surge to the dot-com bubble, excessive in the short term but foundational for trillion-dollar industries.

Joe Betts-LaCroix is the CEO of Retro Biosciences, a longevity startup backed with cash from OpenAI's Sam Altman

Sam Altman, meanwhile, flags healthcare as one of the few jobs AI can’t touch, even as he pushes deeper into biotech. His longevity startup, Retro Biosciences, is about to run its first human trial of RTR242, a pill designed to kickstart autophagy, the cell’s recycling process, to clear misfolded proteins tied to Alzheimer’s and potentially reverse neural aging. Retro is also working on blood regeneration (RTR890) and central nervous system repair (RTR888), with a boost from OpenAI’s GPT-4b micro, which has already delivered fifty-fold gains in cellular reprogramming markers. Read more.

Google’s VaultGemma sets privacy milestone as Hassabis tempers AGI hype

DeepMind just dropped VaultGemma, a 1-billion-parameter language model trained end-to-end with differential privacy. That means it can’t memorize and leak sensitive data, because statistical noise is injected during training. Researchers introduced “DP Scaling Laws,” adapting traditional scaling rules to private regimes without accuracy collapse.

VaultGemma is a 26-layer, decoder-only transformer with multi-query attention and a 1,024-token context length. The model matches the performance of non-private LLMs of comparable size, making it the first differentially private system that isn’t years behind state-of-the-art. Weights are now available on Hugging Face and Kaggle.

The structure of our DP scaling laws. We establish that predicted loss can be accurately modeled using primarily the model size, iterations and the noise-batch ratio, simplifying the complex interactions between the compute, privacy, and data budgets. | Image: Google

Meanwhile, Demis Hassabis made it clear that today’s AI isn’t “PhD-level intelligence,” dismissing OpenAI’s framing of GPT-5 as misleading. Current models, he argues, still fail at basic arithmetic and lack continual learning, intuitive reasoning, and cross-domain creativity. He pegs AGI at five to ten years away, requiring breakthroughs beyond brute-force scaling. Speaking in Athens, Hassabis said the defining skill for the next generation won’t be coding but “learning how to learn,” as workers will need to reskill continuously.

On the consumer front, Google’s Gemini app just knocked ChatGPT off the top of the US App Store, picking up 23 million new users in just two weeks. Read more.

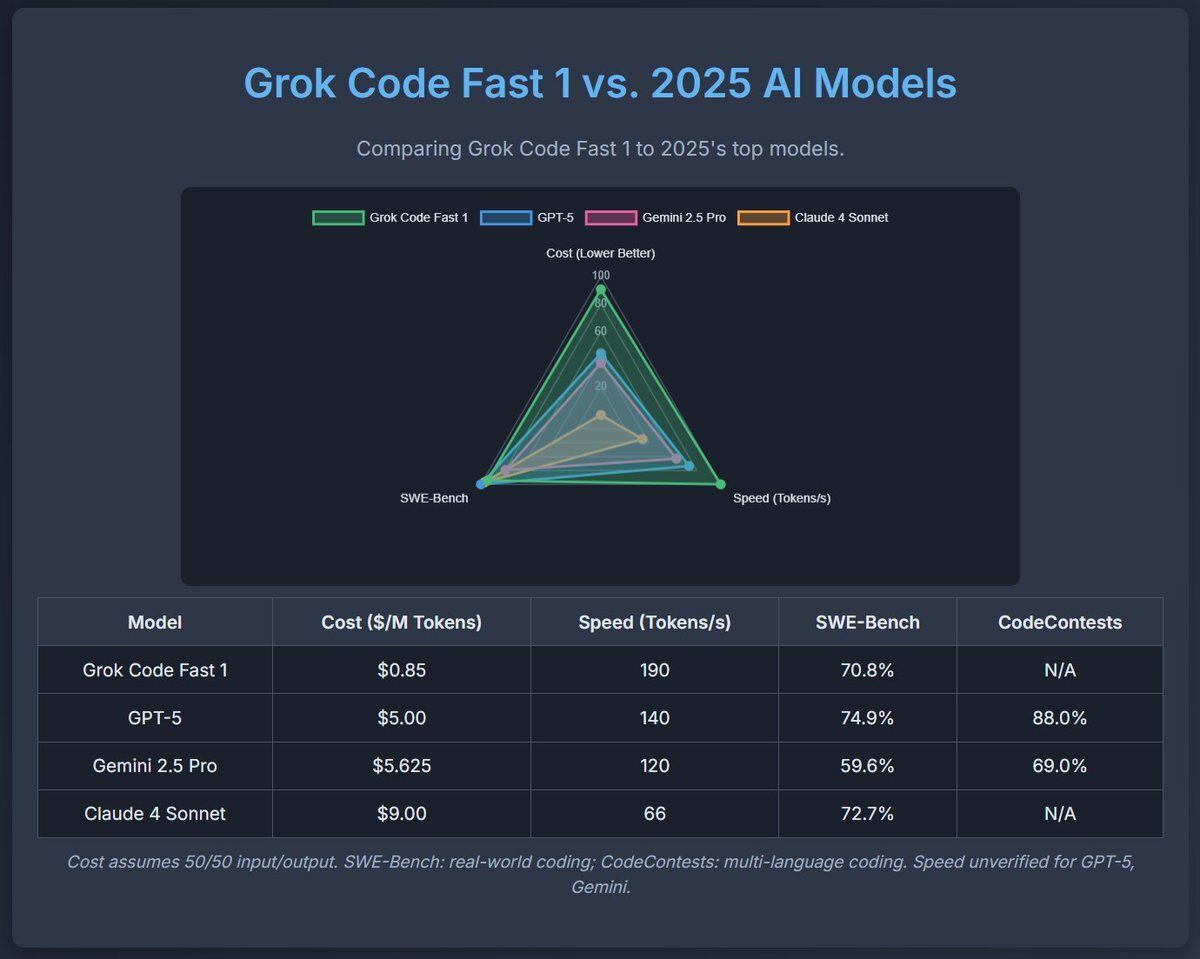

Speed over nuance, specialists over generalists: xAI’s new AI play

xAI has launched Grok 4 Fast, an early access beta model that trades depth for raw speed, delivering answers up to 10 times faster than Grok 4 by reducing compute spent on deep reasoning. The result is a leaner model suited for straightforward factual lookups, simple code generation, and quick tool integrations which means less useful if you’re after nuanced analysis or creative writing.

It’s essentially a “lean mode” LLM, first tested under the codename Sonoma, and it could soon replace Grok 3 for free-tier users. A new changelog page has also been introduced to provide clearer update transparency, hinting at a push toward more iterative, user-facing development. But speed isn’t the only lever xAI is pulling.

Image: Tetsuo on 𝕏

The company also laid off about 500 staff, roughly a third of its data annotation team. These were the generalist tutors who sorted and explained raw data to train Grok. They’ve been swapped out for a planned wave of domain specialists in medicine, finance, and science, which xAI says it will expand “tenfold.” Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!