Good morning. It’s Friday, May 9th.

On this day in tech history: 1949: The EDSAC (Electronic Delay Storage Automatic Calculator) executed its first calculation at the University of Cambridge.

In today’s email:

Chrome’s On-Device AI Catches Scams In Real-Time

OpenAI’s Stargate Testimony and Developer Releases

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

In partnership with Balzac AI

Balzac is your fully autonomous AI-powered SEO copywriter — designed to help you scale your content production without lifting a finger.

From reading your website to analyzing your competitors, Balzac creates a tailored content strategy and automatically generates high-quality, SEO-optimized blog articles that match your tone of voice. No prompts, no editors, no content writers needed.

Balzac runs continuously in the background, delivering valuable, human-friendly content that ranks. It's like having a full-time content team — minus the overhead.

Whether you're an early-stage startup, a marketing agency, or a SaaS company looking to boost your organic traffic, Balzac makes AI-powered SEO effortless, predictable, and scalable.

Oh — and the best part? He never gets sick, takes days off, or misses deadlines 😉

👉 Try it now at https://hirebalzac.ai

Today’s trending AI news stories

Chrome’s On-Device AI Catches Scams In Real-Time

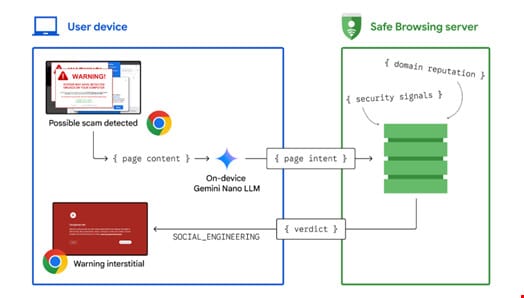

Google has embedded Gemini Nano—an on‑device large language model—into Chrome’s Enhanced Protection on desktop and Android. By analyzing page content locally, it flags phishing, tech‑support fraud and deceptive push notifications in real time, labelling suspect alerts as “Possible scam” and letting users block or override them. Early results show an 80% cut in scammy Search results and hundreds of millions of blocked scam attempts daily.

Overview of how on-device LLM assistance scam mitigation works. Source: Google

Cost‑saving for developers

The Gemini 2.5 API now supports implicit caching: requests sharing a prefix with a prior call earn automatic cache hits and a 75% token discount, no explicit setup needed. Minimum prompt lengths have dropped to 1,024 tokens for Flash and 2,048 for Pro, widening eligibility. Usage metadata now reports cached token counts; explicit caching remains available for guaranteed savings.

Gemini on iPad

Gemini 2.0 Flash’s image‑generation update improves text rendering and loosens content restrictions. Google also launched a dedicated Gemini iPad app with split‑view multitasking, bringing the AI assistant’s capabilities to tablet workflows ahead of further Google I/O announcements. A GitHub notebook is available for those looking to experiment with the new features.

OpenAI Fortifies Regional Data Control, Unlocks Bespoke AI Tuning

OpenAI’s massive Stargate initiative—backed by SoftBank, Oracle and MGX—will deploy AI supercomputing hubs worldwide under the banner of “democratic AI.” Beyond expanding access to cutting‑edge models, Stargate advances OpenAI’s soft‑power strategy, forging government partnerships and balancing sovereignty with economic opportunity.

At a Senate hearing, OpenAI’s Sam Altman, AMD’s Lisa Su, CoreWeave’s Michael Intrator and Microsoft’s Brad Smith pressed for streamlined permitting of data centres, power plants and chip fabs to underpin the AI tech stack. Altman cited the $500 billion Stargate project and warned that fragmented rules or strict export controls risk ceding ground to China. Senators flagged data privacy, cybersecurity and global competition, with Ted Cruz proposing an AI sandbox and Brad Smith stressing that ‘no country can win AI alone’.

OpenAI also rolled out reinforcement fine‑tuning (RFT) on its o4‑mini reasoning model, enabling enterprises to define Python‑based grading functions that score outputs (0–1) and train models on custom reward signals. Early adopters saw a 39% lift in tax analysis accuracy, 12‑point ICD‑10 gains and a 20% F1 boost on legal citation extraction. RFT runs at $100/hour (pro-rated) with a 50% discount for shared datasets; GPT‑4.1 nano supervised fine‑tuning also launched for all paying developers.

Following its Europe rollout, OpenAI’s Asia data‑residency program lets ChatGPT Enterprise, Edu and API customers store data at rest in Japan, India, Singapore and South Korea. The move addresses regional sovereignty rules and complements OpenAI for Countries, building local infrastructure for regulated sectors like finance and healthcare.

Meta Redefines Perception Standards For AI Glasses

Meta’s FAIR lab has rolled out a suite of open-source models pushing new boundaries in perception, localization, and reasoning. The Meta Perception Encoder leads with top-tier zero-shot performance on image and video tasks. The Perception Language Model (PLM), trained on 2.5M human-labeled videos, excels in video QA and spatiotemporal reasoning. Meta Locate 3D decodes natural language prompts for pinpoint 3D object localization, while the Collaborative Reasoner enhances multi-agent collaboration in large language models—enabling more transparent and reproducible AI systems.

Super-Sensing Smart Glasses

Meta’s AI-powered smart glasses—Aperol and Bellini— are currently being tested with an always-on “super-sensing” mode.

Activated by “Hey Meta, start live AI,” the glasses continuously track your surroundings, flagging forgotten items or offering reminders. Current Ray-Ban hardware runs super-sensing for only 30 minutes; the new models aim for multi-hour battery life. Meta is also overhauling its privacy and safety protocols to balance real-time data capture with user control, with ear-worn devices featuring integrated cameras in the works.

Fact-based news without bias awaits. Make 1440 your choice today.

Overwhelmed by biased news? Cut through the clutter and get straight facts with your daily 1440 digest. From politics to sports, join millions who start their day informed.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

📄 PrimitiveAnything: Human-Crafted 3D Primitive Assembly Generation with Auto-Regressive Transformer

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!