In partnership with

Good morning. It’s Friday, January 16th.

On this day in tech history: In 2018, Alibaba's iDST team dropped an AI that smashed Stanford's SQuAD benchmark with 82.44, beating the human ceiling of 82.30. Powered by deep bidirectional transformers, this extractive QA breakthrough proved attention mechanisms were ready to dominate. It directly paved the way for BERT and the modern LLM era.

In today’s email:

Google builds first personal AI ecosystem

OpenAI ‘walks away’ from Apple, and now building its own U.S. robotics supply chain

Real-world Claude data forces Anthropic to halve productivity estimates

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

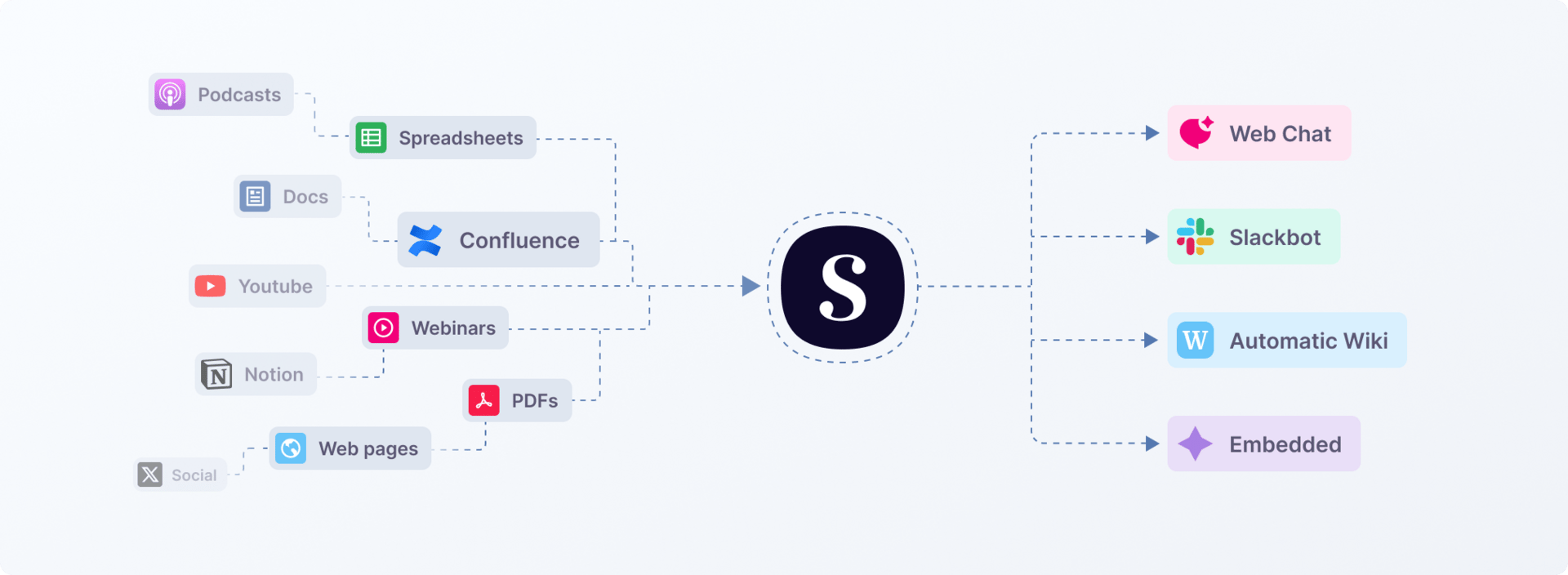

In partnership with Scroll

Turn any knowledge base into enterprise-grade chatbots and agents

Build AI experiences that people actually trust.

Scroll.ai's platform delivers accuracy, nuance, and depth that generic models can't touch. Your users will feel the difference from the first message.

⚡ Accelerate your team with on-demand knowledge 📚 Centralize fragmented information 🔧 Automate repetitive knowledge workflows 🧘 Free yourself from reactive work

Use the AI-BREAKFAST-2026 coupon to get two free months of the Starter plan ($158 value).

Thank you for supporting our sponsors!

Today’s trending AI news stories

Google builds first personal AI ecosystem

Google is building the only AI ecosystem that actually integrates your digital life. The company launched "Personal Intelligence," an opt-in system connecting Gmail, Photos, YouTube, and Search through Gemini.

Users control which apps link in, can disable personalization per chat, and see exactly when personal data gets referenced. The data produces context-aware responses but never trains the model. Guardrails protect sensitive information like health records.

The infrastructure upgrades extend across Google's product stack. Trends now automatically identifies, compares, and contextualizes queries with a revamped Explore page that doubles rising topics and suggests related searches.

NotebookLM gained Data Tables, converting unstructured notes into interactive, structured formats. Meeting notes, clinical trial results, and historical readings become analyzable datasets instead of static text.

Google DeepMind has deployed a math-specialized Gemini internally to prove a novel theorem in algebraic geometry, demonstrating AI’s growing role as a research collaborator rather than just a verification tool.

The company also released TranslateGemma, an open-weight model supporting 55 languages across mobile, consumer, and cloud deployments. Three versions handle different use cases: 4B for phones, 12B for laptops, 27B for servers. TranslateGemma retains multimodal capabilities including image text translation and functions as a chatbot through integrated general instruction data. Read more.

OpenAI ‘walks away’ from Apple, and now building its own U.S. robotics supply chain

OpenAI is done playing the model game. Bloomberg reports the company is building a U.S. supply chain for robotics, AI hardware, and data centers, including domestic silicon, motors, actuators, and cooling systems. A separate Financial Times report adds that OpenAI has ‘strategically’ walked away from being Apple’s custom model provider, choosing instead to build its own AI devices that could compete directly with major consumer platforms.

The hardware play runs deeper than chips. OpenAI now backs Merge Labs, Sam Altman's brain-computer interface startup that raised $252M seed. Merge uses ultrasound-based BCIs with molecular reporters for high-bandwidth, non-invasive neural interfaces.

On the product side, ChatGPT Translate launched quietly: standalone, no account, 25 languages. Testing verticalized consumer tools beyond core chat interface.

At the same time, OpenAI is scaling its backend: a partnership with Cerebras will add 750MW of ultra low-latency inference capacity through 2028, leveraging wafer-scale chips optimized for real-time workloads.

Complementing that infrastructure, OpenAI has released GPT-5.2 Codex to developers, expanding multimodal coding, reasoning, and security analysis capabilities via the Responses API. Read more.

Real-world Claude data forces Anthropic to halve productivity estimates

Anthropic measured what actually happens when people use Claude and the results forced them to cut productivity forecasts in half.

The fourth Economic Index tracked one million conversations from November 2025 using five metrics: task complexity, skill level, purpose, autonomy, and success rates. The findings: Claude accelerates high-skill, college-level tasks 12× faster than humans, but success rates dip slightly for complex work. Adjusted for reliability, AI could lift U.S. labor productivity by 1–1.2 percentage points annually which is significant, but well below early hype.

Adoption remains uneven. Wealthy countries use Claude for work and personal tasks; lower-income regions lean on it for education. Within jobs, Claude handles nearly half of sampled tasks, concentrating on computer and math work, and favors higher-education tasks, raising potential deskilling concerns. Users increasingly collaborate with AI (52% of interactions) rather than fully delegate.

Separately, Claude Code got a major infrastructure upgrade and now supports MCP Tool Search using ‘lazy loading’ to eliminate context bloat. Developers can now connect hundreds of APIs without memory penalties. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!