Good morning. It’s Monday, December 15th.

On this day in tech history: In 1962, NASA's Mariner 2 became the first spacecraft to successfully fly by Venus, relaying data on its hellish atmosphere via autonomous attitude control and sequencing. This deep-space feat relied on rudimentary onboard logic - an early echo of AI autonomy in robotics and pathfinding algorithms. Its Canopus star tracker and gyros prefigured SLAM techniques in modern AI-driven probes, enduring 42 million miles without real-time human input.

In today’s email:

The Open-Source Voice Model That Changes Everything

Google just made real-time translation seamless on ANY headphones

$18-per-hour AI agent beats 9 out of 10 pro hackers in Stanford test

Runway drops Gen-4.5 with native audio edits and scene-wide ripple changes

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

In partnership with Tiny Fish

Mino: Build Agents for 95% of the Web — No APIs Needed

Most software only works where APIs exist. That’s the smallest part of the internet.

Mino lets your software and AI agents operate real websites directly—no SDKs, no integrations, no approvals. Seriously, this is the best AI Agent I’ve seen.

Automate websites the way humans use them

Click, navigate, fill forms, complete workflows programmatically

Build on legacy tools, internal dashboards, and niche sites

Ship products without waiting on API access or roadmaps

Turn the “hidden web” into usable surface area for software

If a human can do it in a browser, Mino can do it in code.

The web just became executable.

Thank you for supporting our sponsors!

Today’s trending AI news stories

The Open-Source Voice Model That Changes the Economics

For the last year, ElevenLabs has set the bar for high-quality text-to-speech. But it comes with the usual tradeoffs: closed models, usage caps, and rising costs as you scale. Chatterbox flips that model entirely. Built by Resemble AI, Chatterbox delivers comparable voice quality while being open-source (MIT-licensed), faster in real-time use, and dramatically cheaper to run—because you can host it yourself.

Unlike ElevenLabs’ API-only approach, Chatterbox runs locally or on your own infrastructure, eliminating per-character pricing and vendor lock-in. It supports zero-shot voice cloning, expressive speech control, and real-time synthesis fast enough for live agents and interactive apps. In blind listening tests, Chatterbox has even been preferred over ElevenLabs—despite costing nothing to use.

The strategic difference is simple: ElevenLabs sells access to voice. Chatterbox gives you ownership of it. You control the model, the latency, the deployment, and the economics. Built-in watermarking supports responsible use, while the open architecture makes it ideal for startups, internal tools, and AI agents that need voice at scale.

If ElevenLabs proved what was possible, Chatterbox proves it doesn’t need to be closed—or expensive. For builders who care about speed, cost, and control, this is the voice model that changes the game. Try it here.

Google just made real-time translation seamless on ANY headphones

Google just removed one of the biggest friction points in live translation. Translate’s Android beta now delivers real-time speech translation through any headphones in 70+ languages. Powered by Gemini, it focuses on meaning, not literal word swaps, so tone, rhythm, and intent survive the translation, along with slang and local expressions, at lower latency.

At the same time, Translate is shifting from “use it when you travel” to “use it every day.” Google is expanding its AI-powered practice features to 20 more countries, with adaptive vocabulary, listening drills, and feedback that adjusts to how you actually learn.

Voice is also getting more disciplined. Gemini Live is picking up basic conversational manners such as pausing without cutting you off and letting you mute the mic while it’s speaking. Small changes that make real-time voice feel usable instead of awkward. In parallel, Google Search Live is rolling out a new Gemini-based audio model that sounds less synthetic and supports continuous voice conversations while pulling in live web results.

Google is also fixing a less visible problem: wasted compute. A new research framework teaches AI agents to monitor their own token and tool budgets in real time and adjust behavior accordingly. Early results show higher accuracy with up to 50 percent lower costs, a shift away from brute-force scaling toward efficiency and control. That strategy matches DeepMind’s longer timeline. Chief AGI Scientist Shane Legg recently put a 50 percent chance of reaching “minimal AGI” by 2028. Read more.

$18-per-hour AI agent beats 9 out of 10 pro hackers in Stanford test

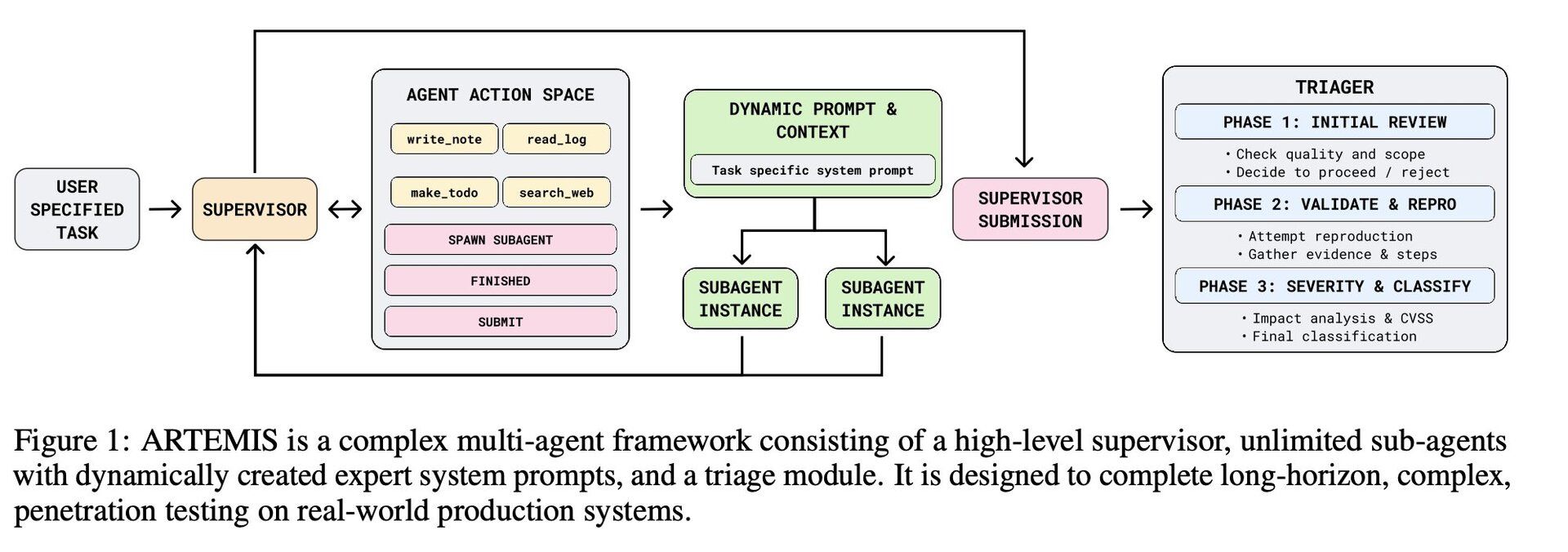

Stanford researchers put an AI agent, ARTEMIS, to the test against professional penetration testers and found it outperformed nine of ten humans while running at a fraction of the cost. Over a 16-hour period, ARTEMIS scanned roughly 8,000 devices, uncovering vulnerabilities that humans missed, including on older servers inaccessible through standard browsers. Unlike human testers, the agent could deploy sub-agents to investigate multiple targets simultaneously, giving it a scale and parallelism advantage.

Stanford tossed ARTEMIS into a live 8,000-host network—Unix, Windows, IoT, VPNs, Kerberos—the exact chaos real attackers face. | Screenshot grab: Robert Youssef on X

Within the first 10 hours, ARTEMIS discovered nine valid vulnerabilities with an 82% success rate. Its strengths lie in parsing code-like inputs and outputs, though it struggles with graphical interfaces, occasionally producing false positives. The study highlights how AI agents can drastically reduce the time, cost, and cognitive load of complex security tasks, even while exposing new challenges in verification and GUI-dependent testing. Read more.

Runway drops Gen-4.5 with native audio edits and scene-wide ripple changes

Runway has rolled out Gen-4.5 with major upgrades and unveiled its first General World Model, GWM-1. The new video model adds native audio generation and editing, plus multi-shot editing that applies changes across an entire scene. GWM-1 builds an internal simulation of environments in real time, allowing interactive control via camera movements, robot commands, or audio inputs.

The system ships in three versions: GWM Worlds for explorable environments, GWM Avatars for lifelike speaking characters, and GWM Robotics for synthetic robot training data, with plans to eventually unify these capabilities. Runway joins a growing field of AI labs including DeepMind, Yann LeCun’s startup, and Fei-Fei Li’s World Labs racing to build models that understand and simulate the physical world beyond text. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!