Good morning. It’s Wednesday, April 9th.

On this day in tech history: 1979: The first fully functional Motorola DynaTAC prototype, a precursor to the modern mobile phone, was demonstrated.

In today’s email:

Gemini 2.5 Pro

Deep Analysis of Llama 4

MS Copilot Turns Vision into Context

New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

Ready to level up your work with AI?

HubSpot’s free guide to using ChatGPT at work is your new cheat code to go from working hard to hardly working

HubSpot’s guide will teach you:

How to prompt like a pro

How to integrate AI in your personal workflow

Over 100+ useful prompt ideas

All in order to help you unleash the power of AI for a more efficient, impactful professional life.

Today’s trending AI news stories

Google Rolls Out Deep Research and New AI Tools with Gemini 2.5 Pro

Google has launched Deep Research for Gemini Advanced users on the experimental Gemini 2.5 Pro model, now accessible via web, Android, and iOS. Designed as a personal research assistant, it excels in reasoning and structured synthesis, surpassing competitors with a 2-to-1 user preference margin. Features like Audio Overviews provide podcast-style narration, making insights more portable. Positioned not as a chatbot but a full-stack tool, it integrates deeply into real-world knowledge workflows.

In tandem, Google Research introduced a Geospatial Reasoning framework, enhancing crisis response and urban planning by leveraging remote sensing models trained on satellite imagery. On a separate front, Google Cloud has deepened its collaboration with the Allen Institute for AI (Ai2), unlocking open-source models like OLMo 2 for sectors demanding transparency. Google also previewed its AI-powered 3D remastering of The Wizard of Oz for Sphere’s 16K immersive screen, pushing cinematic boundaries.

On the AI tools front, Google has upgraded both AI Mode and NotebookLM. AI Mode now includes visual recognition, enabling users to upload images for contextual insights and suggestions. Meanwhile, NotebookLM allows users to pull live web content into their notes, integrating external sources for enhanced generative capabilities. These updates mark a significant leap in AI-driven, real-world applications across Google’s platforms. Read more.

Meta's Llama 4 Models Impress in Some Areas, But Face Criticism Over Long-Context Tasks

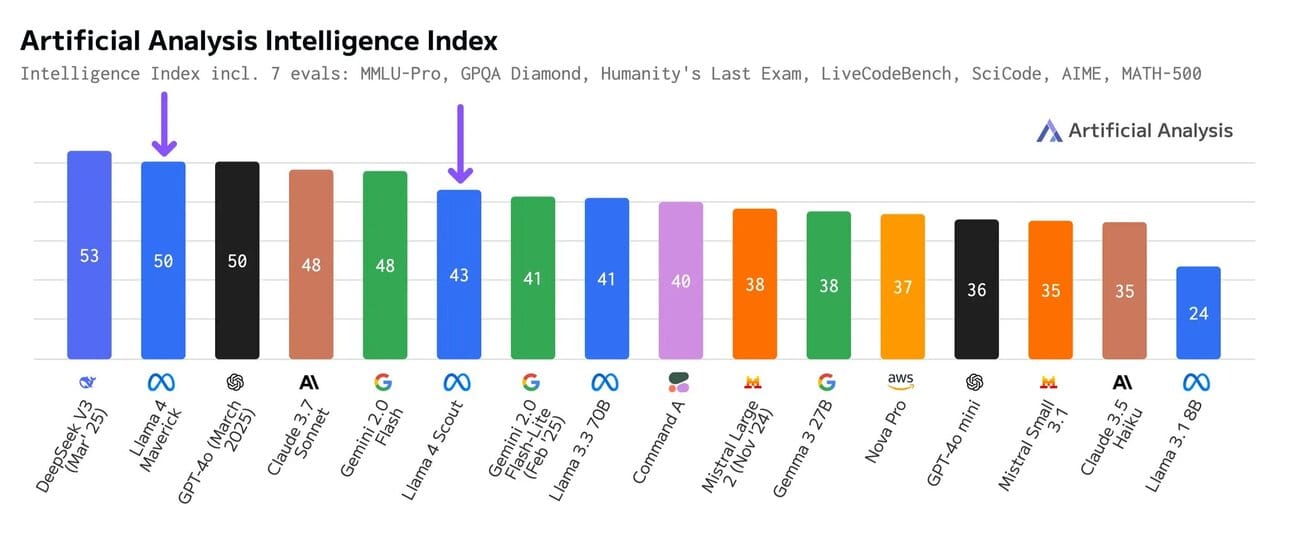

Image: Artificial Analysis

Meta's submission of the customized Llama 4 models to LM Arena has stirred up a storm of questions, particularly around transparency. The "Llama-4-Maverick-03-26-Experimental" version was fine-tuned for human preference, but this wasn’t made clear initially. Meta's VP of generative AI, Ahmad Al-Dahle, has denied rumors that the company artificially boosted its Llama 4 models' benchmark scores.

In response, LM Arena dropped over 2,000 battle results, highlighting how style and tone swayed evaluations. They’ve also revamped their leaderboard policies, reinforcing their commitment to fair and reproducible tests. Artificial Analysis has also updated its Llama 4 Intelligence Index scores for Scout and Maverick, following adjustments to account for discrepancies in Meta’s claimed MMLU Pro and GPQA Diamond results.

On the performance front, Meta’s Llama 4 models—Maverick and Scout—impressed with strong scores in reasoning, coding, and mathematics, outpacing rivals like Claude 3.7 and GPT-4o-mini. Maverick clocked 49 points, while Scout followed with 36. However, when it came to long-context tasks, both models hit a wall. Maverick managed just 28.1%, and Scout trailed even further at 15.6%. Meta points to ongoing tweaks and optimizations as the models are gradually rolled out.

Meanwhile, NVIDIA has turbocharged Llama 4’s inference on its Blackwell B200 GPUs, pushing the models to over 40,000 tokens per second. With a multimodal, multilingual architecture and TensorRT-LLM optimization, these models now handle tasks like document summarization and image-text comprehension with impressive speed. Read more.

Microsoft’s Copilot Turns Vision into Context

Microsoft has extended Copilot Vision from web to mobile. Now accessible via the Copilot app for iPhone users subscribed to Copilot Pro, the feature turns a phone’s camera into a real-time visual search tool. Point your camera, and Copilot deciphers what it sees—plant health, product specs, interior tweaks—all processed in real time.

The tech runs inside Voice mode of the Copilot app and only activates with permission. Powered by OpenAI’s latest models, it’s a cognitive overlay for the physical world. Microsoft’s broader push is clear: collapse the distance between seeing and knowing. $20/month buys you early access and premium speeds. Read more.

4 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

One more thing…

You’ve heard the hype. It’s time for results.

After two years of siloed experiments, proofs of concept that fail to scale, and disappointing ROI, most enterprises are stuck. AI isn't transforming their organizations — it’s adding complexity, friction, and frustration.

But Writer customers are seeing positive impact across their companies. Our end-to-end approach is delivering adoption and ROI at scale. Now, we’re applying that same platform and technology to build agentic AI that actually works for every enterprise.

This isn’t just another hype train that overpromises and underdelivers. It’s the AI you’ve been waiting for — and it’s going to change the way enterprises operate. Be among the first to see end-to-end agentic AI in action. Join us for a live product release on April 10 at 2pm ET (11am PT).

Can't make it live? No worries — register anyway and we'll send you the recording!

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!