Good morning. It’s Wednesday, May 28th.

On this day in tech history: 2014: Apple acquired Beats Electronics for $3 billion, folding its advanced audio technology and streaming service into Apple’s ecosystem. A strategic move that not only reshaped the consumer tech landscape but also paved the way for the launch of Apple Music and marked the company's first major push into subscription services.

In today’s email:

Google’s Thought Summaries

Anthropic’s Claude With Voice

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

In partnership with Atla

Why AI Agents Fail & How to Fix Them

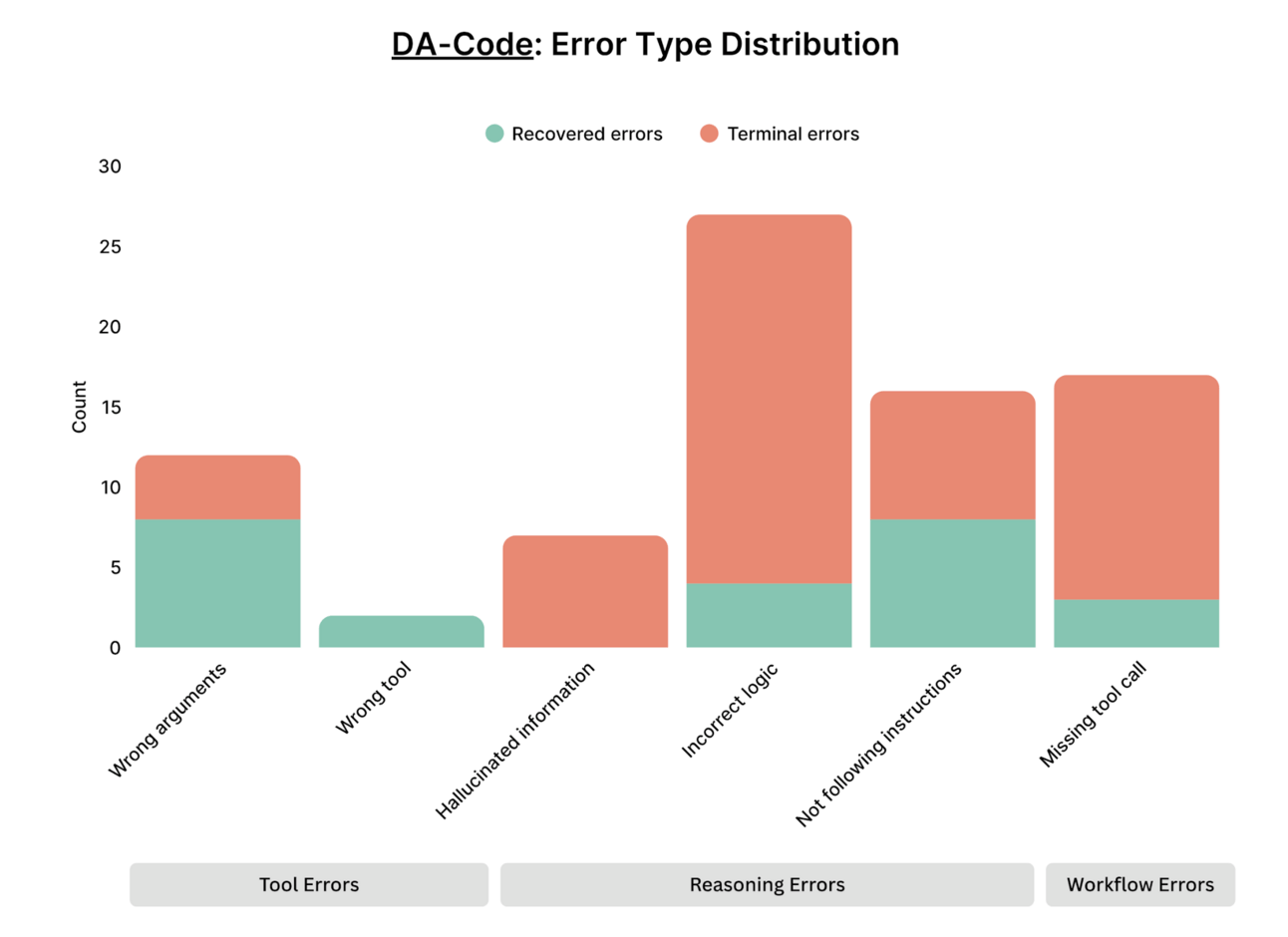

A new study explores why AI agents fail, especially in coding tasks, and what we can do about it. Researchers at Atla analyzed traces from DA-Code, a benchmark designed to assess LLMs on agent-based data science tasks, and found that reasoning errors like “incorrect logic” dominate task failures.

These errors often slip past detection, causing hours of manual debugging.

To tackle this, the team built a tool that automatically identifies step-level errors using a robust taxonomy of error types that has also been tested on customer support agents.

Building agents? Get free early access to the tool and find out why your agents fail.

The researchers also piloted a feedback loop that boosts agent task completion by up to 30%, proving that targeted critiques can dramatically improve performance, even without re-prompting. Read more.

Today’s trending AI news stories

Google’s ‘Thought Summaries’ Let Machines Do The Thinking For you

Google is giving developers deeper visibility into the model’s reasoning process. In the Gemini API, “thought summaries” now provide concise, human-readable glimpses into the model’s internal reasoning, generated by a secondary summarization model that trims down the full chain of thought without altering output.

The feature is free, optional, and takes just a line of code to activate, assuming you’re already using “thinking budgets.” It’s still experimental, with plans to support custom styles, but billing remains tied only to full thought metadata, not the summaries themselves.

Google for Developers also dropped a new video for Gemma 3n, a mobile-optimized model built for on-device use with support for text, audio, and image inputs. It’s now available for early testing via Google AI Studio and AI Edge.

Adding to its technical toolkit, Google quietly launched LMEval, an open-source benchmarking suite for language and multimodal models. Built on the LiteLLM framework, LMEval smooths over the friction of comparing models from providers like OpenAI, Anthropic, Ollama, Hugging Face, and Google itself. It supports a wide range of input types—including code, images, and freeform tex, and features safety checks that flag evasive or risky answers. Results are encrypted and locally stored, then visualized via LMEvalboard, a dashboard that offers side-by-side comparisons, radar charts, and granular performance breakdowns. Incremental testing means only new evaluations are rerun, saving time and compute. The full suite is available now on GitHub.

Topping it off, Sundar Pichai framed AI as “bigger than the internet,” pointing to next-gen interfaces like Android XR smart glasses as early hints of where this all leads. That optimism is echoed in public interest: DeepMind’s site traffic jumped to over 800,000 daily visits following the debut of Veo 3, Google’s high-end video generation model launched at I/O 2025.

Veo 3 has expanded rapidly, launching in 71 additional countries shortly after its debut at I/O 2025. Pro subscribers can experiment with a 10-generation trial on the web, while Ultra subscribers enjoy up to 125 monthly generations in Flow, a boost from 83, with daily refreshes. Users can access Veo 3 through Gemini’s Video chip or via Flow’s specialized filmmaking environment, depending on subscription level. A demo video titled The Prompt Theory shows four continuous minutes of Veo in action.

Anthropic Powers ‘Claude with Voice’, Bug Fixing and Smart Controls

Anthropic has advanced Claude’s functionality by integrating conversational voice interaction with deep technical improvements and carefully designed behavioral controls.

The new voice mode, now available on iOS and Android, allows users to interact with their Google Workspace data—Docs, Drive, Calendar, and Gmail—through natural speech, with Claude delivering concise summaries and reading content aloud in distinct voice profiles such as Buttery, Airy, and Mellow. Though limited to English and mobile apps for now, free users can access real-time web search for up-to-date responses, while Pro and Max subscribers unlock enhanced Workspace integration and richer search capabilities.

Beyond interface upgrades, Claude Opus 4 showcased a leap in AI-assisted debugging by pinpointing a four-year-old shader bug hidden within 60,000 lines of C++ code. In just 30 focused prompts, it exposed an overlooked architectural flaw that had eluded human engineers and prior AI models, demonstrating a new dimension of code analysis that addresses complex design oversights rather than simple errors.

Underpinning these advances, detailed but partly hidden system prompts shape Claude 4’s behavior, suppressing flattery, limiting list use, enforcing strict copyright rules, and guiding the model to provide emotional support without encouraging harmful actions. Independent research into these prompts reveals Anthropic’s intricate balancing act between utility, safety, and transparency, underscoring the company’s nuanced behavioral governance on AI outputs while leaving room for broader disclosure.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!