Good morning. It’s Friday, February 23rd.

Did you know: It was revealed that Sam Altman is one of the largest shareholders of Reddit?

In today’s email:

AI Advancements and Innovations

AI in Industry and Economy

AI in Business and Investment

5 New AI Tools

Latest AI Research Papers

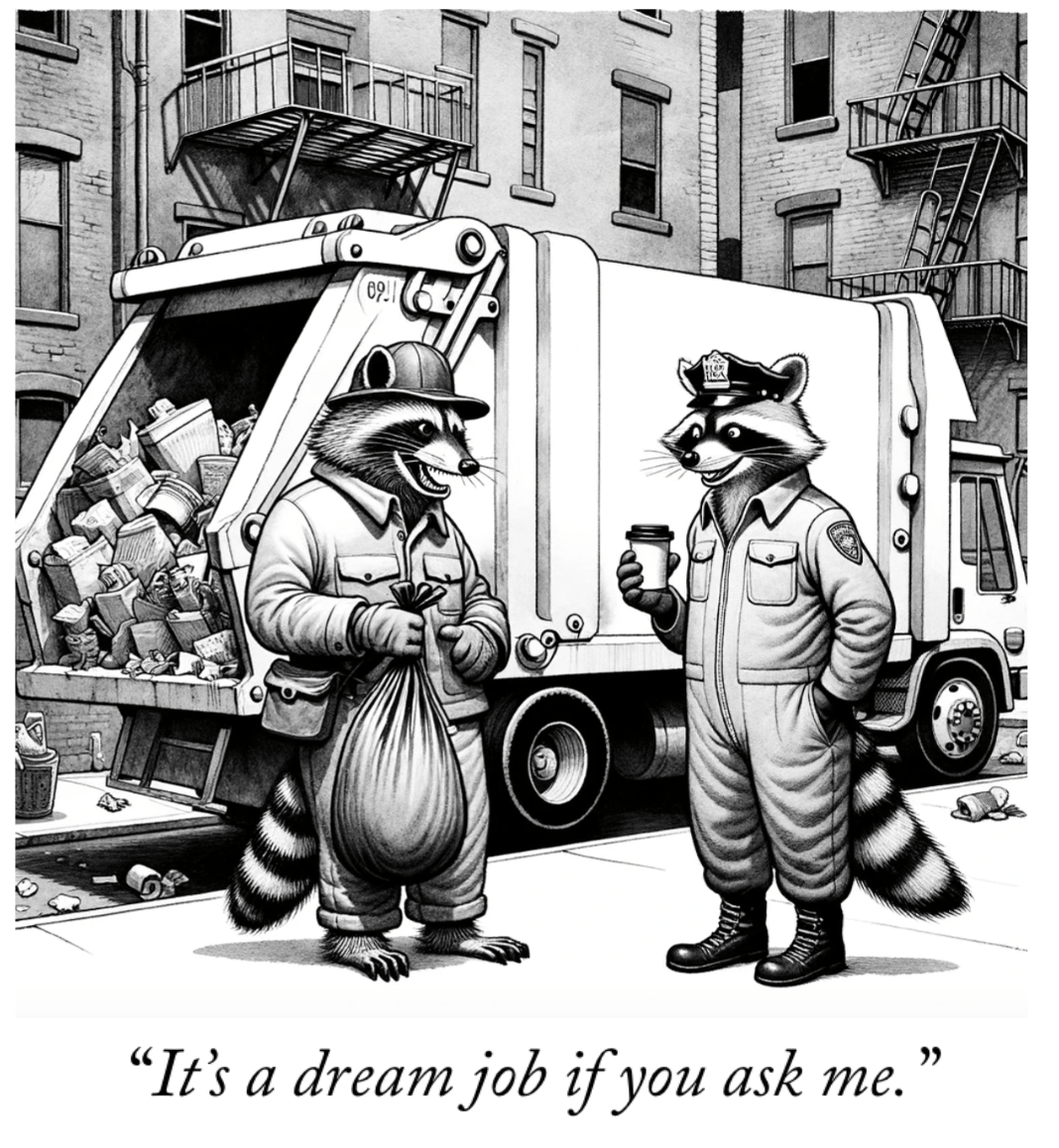

ChatGPT Creates Comics

You read. We listen. Let us know what you think by replying to this email.

Today’s trending AI news stories

AI Advancements and Innovations

> Google AI is currently developing ScreenAI, a new Vision-Language Model for understanding user interfaces (UIs) and infographics. Combining advanced visual analysis with language understanding, ScreenAI surpasses larger models in efficiency while delivering advanced results on various benchmarks. The model introduces a novel textual representation of UIs and utilizes Large Language Models to create training data. Google AI will also release three new datasets to facilitate further research. ScreenAI's success highlights a significant advancement in visual data comprehension, potentially improving the way users interact with complex digital content.

> Google has introduced Gemma, a suite of lightweight, open-source AI models designed to promote responsible development in the field. Derived from the technology underpinning Google's larger Gemini models, Gemma offers two sizes and both pre-trained and instruction-tuned versions. To support safer AI use, the release includes a Responsible Generative AI Toolkit with safety classification tools and debugging aids. Optimized for use with Google Cloud and NVIDIA GPUs, Gemma is compatible with various frameworks. Google emphasizes that Gemma has undergone thorough safety and reliability testing in line with its AI Principles. Developers and researchers can access Gemma for free through Kaggle, Colab notebooks, and Google Cloud credits. Read the technical report.

> Stability AI has announced the release of Stable Diffusion 3.0, an updated text-to-image generation model. The new model promises improved image quality and performance compared to previous versions. It uses a new architecture that the company says is both faster and more efficient. Stability AI's CEO, Emad Mostaque, says the model is more versatile and hints that it could eventually be used for applications beyond images, such as video generation.

> OpenAI has updated its GPT Store, adding user ratings and expanded developer profiles. Users can now rate the third-party AI tools on the platform, providing feedback for both OpenAI and creators. Developers can now customize their profiles with more information, including social media links. The move comes after recent issues with OpenAI's ChatGPT and highlights the company's efforts to improve transparency. OpenAI has not yet announced plans to share revenue with developers who list their AI tools in the GPT Store.

> Suno AI, the impressive AI music and song generator, released a limited-access V3 Alpha for public testing, offering paying subscribers 300 free credits to explore the evolving music generation platform. Users can switch between V2 and V3 Alpha on the company's website, providing feedback to shape the final version. Suno AI acknowledges V3 Alpha's limitations, including difficulty following complex prompts, occasional mixing issues, and potential inaccuracies – especially with short prompts. Despite these, the new version boasts extended song lengths. Suno AI emphasizes that user experimentation and feedback are crucial for V3 Alpha's continued development as it aims for a full release.

AI in Industry and Economy

> Nvidia delivered impressive fourth-quarter financial results, beating Wall Street estimates with significant year-over-year revenue growth of 265%. The company's adjusted earnings per share reached $5.16 on revenue of $22.10 billion. Nvidia projects this strong performance to continue, forecasting $24.0 billion in sales for the current quarter. CEO Jensen Huang highlighted the surge in generative AI and the increasing use of GPU accelerators as key growth drivers. While acknowledging supply chain constraints, particularly for next-generation chips, Nvidia remains optimistic about its position in the market due to the diverse demand for its products in sectors like healthcare and enterprise software.

> Google acknowledges that its Gemini AI chatbot's image generation capabilities are "missing the mark" and has temporarily removed the feature. Concerns about bias and historical inaccuracies prompted the decision, following user criticism of the model's inability to produce images of caucasians. Google has committed to addressing these issues and improving the feature before reintroducing it. The company emphasizes its dedication to developing a more inclusive and accurate AI.

AI in Business and Investment

> Microsoft has confirmed its plans to integrate OpenAI's Sora video-generating chatbot into its Copilot coding assistance tool. Mikhail Parakhin, head of Microsoft's advertising division, indicated the move on X (formerly Twitter) but offered no firm timeline. Sora has the ability to create detailed videos from text descriptions, potentially adding new capabilities to Copilot. The integration date remains uncertain as Microsoft addresses technical hurdles, but the company is clearly committed to enhancing Copilot with advanced AI features.

> Reddit and Google have struck a groundbreaking $60 million AI content licensing deal, marking Reddit's first major collaboration with an AI company. This strategic alliance enables Google to harness Reddit's content for training its AI models, aligning with Google's broader strategy to enhance its services.

Simultaneously, as Reddit prepares for its IPO, OpenAI CEO Sam Altman emerges as the third-largest shareholder, with an 8.7% stake. Reddit reported $804 million in revenue for 2023, primarily from advertising, alongside a $90.8 million net loss. The IPO filing underscores the critical role of AI in Reddit's future growth, emphasizing the value of its content for AI model training. Notably, less than a year ago, Reddit introduced a paid model for third-party apps, allowing users to personalize their experience while safeguarding data privacy.

5 new AI-powered tools from around the web

Swizzle.co is a low-code, multimodal tool for web app development. Utilize natural language, visual aids, or coding for rapid creation.

Flipner is an AI-powered writing assistant that transforms notes into structured drafts or polished texts, offering multiple styles and translation into 50+ languages.

Dorik is a no-code website builder with drag-and-drop functionality, customizable templates, and collaboration features.

Hoji AI automates code reviews with precision, providing fast feedback while safeguarding code privacy. Ideal for deves and teams seeking workflow efficiency.

LLM List is a comprehensive directory of large language models. It offers detailed information and comparisons to help select suitable models for text generation, translation, and data analysis.

arXiv is a free online library where researchers share pre-publication papers.

LongRoPE introduces a groundbreaking method to extend large language models' (LLMs) context window to 2048k tokens while preserving performance. It addresses challenges like catastrophic values and limited long texts. Leveraging non-uniform positional interpolation, it optimizes RoPE embedding, crucial for maintaining model performance. LongRoPE's progressive extension strategy fine-tunes LLMs efficiently, achieving the extended context without excessive training. Through empirical analysis, it unveils key non-uniformities in RoPE, informing an evolutionary search algorithm for optimal rescale factors. Experimental results validate LongRoPE's effectiveness across various tasks, ensuring low perplexity and high accuracy. By maintaining the original model architecture with minor adjustments, LongRoPE offers a versatile solution applicable to different LLMs.

USER-LLM integrates user embeddings distilled from diverse interactions into large language models (LLMs), enhancing adaptability to user context. Through cross-attention and soft-prompting, it enables personalized responses, showing superior performance in user-centric tasks on MovieLens, Amazon Review, and Google Local Review datasets. Compared to text-prompt-based methods, it excels in efficiency while maintaining efficacy. Incorporating Perceiver layers further improves computational efficiency. USER-LLM's versatility across tasks underscores its potential for user modeling and personalization, stimulating further research in refining user embedding generation and enhancing generalization capabilities.

This paper from Google Research investigates the under-utilization of network parameters in deep reinforcement learning (RL) agents. Introducing gradual magnitude pruning, the study demonstrates its effectiveness in maximizing parameter efficiency. Significant performance improvements of pruned networks over traditional dense networks are observed, with performance scaling noted with network size. Applying gradual magnitude pruning across various agents and training scenarios establishes its general usefulness. The findings not only offer insights into network optimization but also suggest implications for broader RL methodologies and hardware advancements, potentially enabling efficient deployment on edge devices.

The Aria Everyday Activities (AEA) Dataset, from Meta Reality Labs Research, offers a rich egocentric multimodal dataset captured via Project Aria glasses. With 143 daily activity sequences in diverse indoor locations, it includes sensor data like 3D trajectories, scene point clouds, eye gaze vectors, and speech transcriptions. Supporting applications such as neural scene reconstruction and prompted segmentation, AEA provides updated formats, machine perception outputs, and open-source tools. Addressing the need for comprehensive datasets in egocentric AI and 3D multimodal understanding, AEA facilitates exploration of real-world longitudinal activities with spatial-temporal context, serving as a valuable asset for AI and AR research, with Meta's involvement ensuring high-quality resources.

ChatGPT Creates Comics

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, apply here.