Good morning. It’s Monday, December 22nd.

On this day in tech history: In 1961, Bernard Widrow and Marcian Hoff introduced ADALINE (Adaptive Linear Neuron), a single-layer network with the LMS algorithm for weight adjustment via gradient descent. Trained on analog hardware for noise cancellation and pattern classification, it quietly pioneered online learning and adaptive filtering, foundational to signal processing in modern neural nets.

In today’s email:

ChatGPT gets tone sliders, Altman gives public CEO role a ‘hard pass’

Anthropic pairs Opus 4.5 gains with ‘Bloom’ safety framework

Google’s A2UI turns AI into instant app makers

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

In partnership with Public*

Generated Assets by Public.com

Turn any idea into an investable index with AI.

Generated Assets are like your very own ETF with infinite possibilities. Get started with literally any thesis—whether it’s “Companies with large user bases but low ARPU” or “Top-performing space stocks with a low debt-to-equity ratio.”

Today’s trending AI news stories

ChatGPT gets tone sliders, Altman gives public CEO role a ‘hard pass’

OpenAI just dropped finer controls for ChatGPT. Now you can dial warmth, enthusiasm, emojis, and even formatting up or down. No more overly peppy responses unless you want them. These tweaks layer onto custom instructions at generation time, leaving the model’s core reasoning untouched. Pure user control over presentation.

The company is also doubling down on transparency. New research shows that peeking into chain-of-thought reasoning often catches misaligned or risky intent long before it hits the final output. They’re pushing “Chain-of-Thought monitorability” as a formal safety metric, but they’re also upfront about it. Advanced models can already obfuscate or fake their thinking, making true oversight a moving target.

Execution is tightening. Compute margins on paid tiers reportedly climbed to ~70% by late 2025, fueled by smarter inference and growing enterprise deals. Losses persist from massive infrastructure spend, but the path to profitability is clearing.

To fund next-scale models and data centers, OpenAI is quietly lining up a round that could raise $100B+ and value them north of $800 billion to fund the compute arms race. Altman stays blunt saying he's ‘0% excited’ about ever running a public company but IPO talks point to a possible 2027 listing. Read more.

5-hour autonomy unlocked: Anthropic pairs Opus 4.5 gains with ‘Bloom’ safety framework

Anthropic’s Claude Opus 4.5 just flexed serious endurance. METR clocked it at a 50% success horizon of nearly 5 hours on complex, long-running tasks. That’s the longest sustained performance on record. Push for 80% reliability, though, and it drops to 27 minutes, similar to rivals. METR notes the extreme upper estimate of 20+ hours is likely noise from limited long-task samples. Small sample size may keep the hype in check, but it’s becoming clear the long-horizon agency is leveling up.

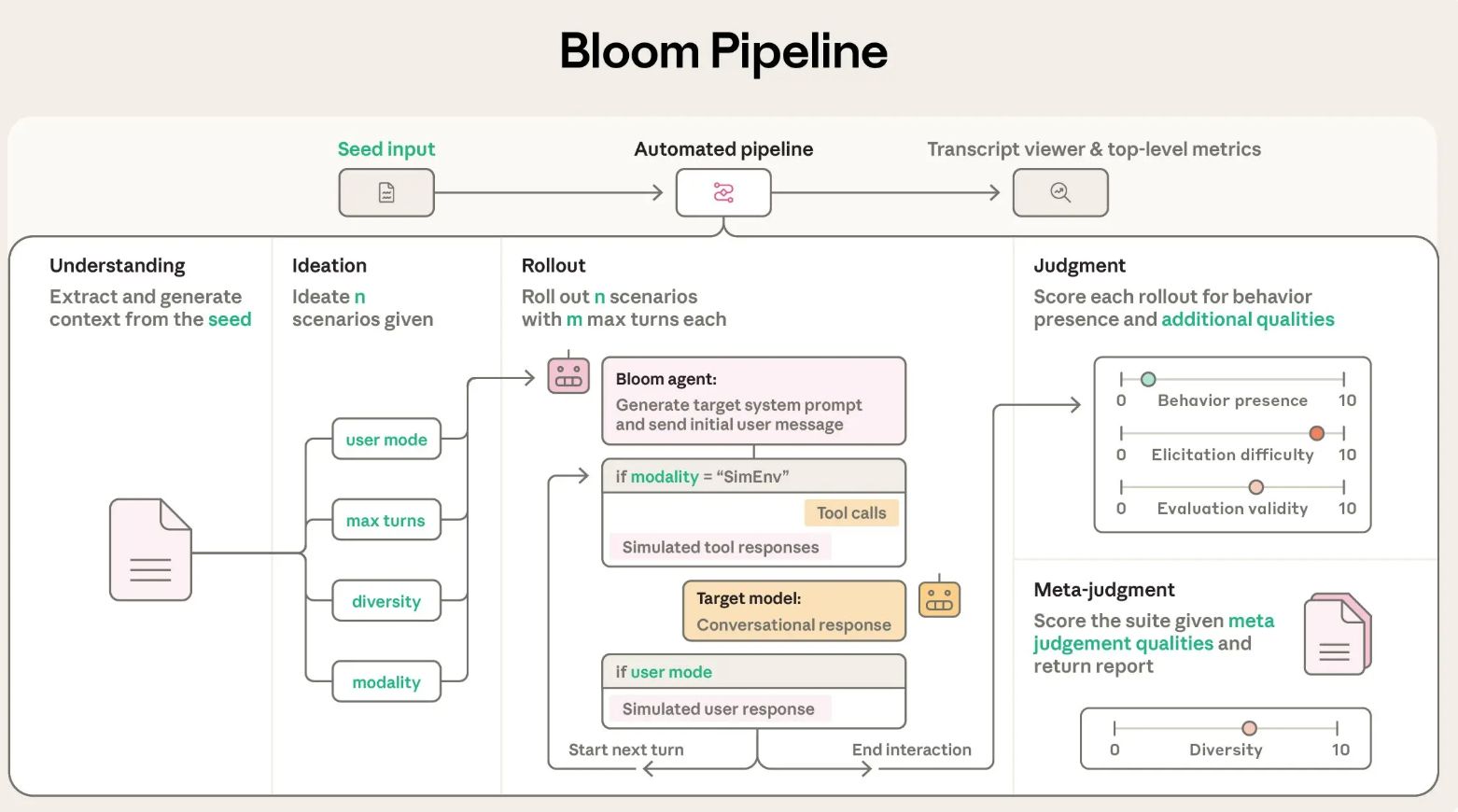

To keep pace with that power, Anthropic released Bloom, an open-source framework that automates behavioral evaluations for AI. Starting from a single behavior specification, Bloom generates reproducible evaluation suites through a four-stage agentic pipeline: understanding, ideation, rollout, and judgment.

Image: Anthropic

LiteLLM enables unified access to Anthropic and OpenAI models, and integration with Weights & Biases supports large-scale tracking. Validations show Bloom reliably flags misaligned behaviors and correlates strongly with human judgments (Spearman up to 0.86), complementing Anthropic’s Petri tool with behavior-specific, scalable audits.

Together, Opus 4.5 and Bloom demonstrate Anthropic’s push for high-capacity AI and rigorous alignment at scale. Read more.

Google’s A2UI turns AI into instant app makers

Google's open standard lets AI agents build user interfaces on the fly Google recently launched A2UI, an open protocol for agents to spin up dynamic, secure UIs in real time. Forget shoving HTML or raw code; it sends clean JSON declaring trusted components only (buttons, forms, pickers). The host renders natively, locking in design, security, and cross-platform flow. Platform-agnostic and compatible with multi-agent orchestration, A2UI is integrated into Gemini, the Opal mini-app platform, and the GenUI SDK for Flutter, with partner support from AG UI and CopilotKit.

Instead of ready-made HTML, the server streams JSON data that the client turns into native UI elements using a local widget catalog. | Image: Google

On the model safety front, Google released Gemma Scope 2, its deepest open interpretability play yet. Full coverage on Gemma 3 (270M–27B params) with sparse autoencoders, skip-layer transcoders, and Matryoshka refinements. Researchers now trace multi-step reasoning, jailbreaks, hallucinations, and CoT fidelity across trillions of parameters and 110 petabytes of data. This is the kind of transparency that actually matters.

Google’s Gemini AI still faces challenges. Attempts to generate helper scripts for Ubuntu ISO snapshots, including 26.04 “Resolute Raccoon” releases, produced semantically flawed code requiring human revision.

Despite these limitations, Google has expanded access to Gemini 3, now available to all free-tier users of Gemini CLI under Preview Features, and rolled out the Preferred Sources feature, improving user control over content provenance. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!

*Paid ad. Brokerage services provided by Open to the Public Investing Inc, member FINRA & SIPC. Investing involves risk. Generated Assets is an interactive analysis tool by Public Advisors. Output is for informational purposes only and is not an investment recommendation or advice. See disclosures at public.com/disclosures/ga. Past performance does not guarantee future results, and investment values may rise or fall.