Good morning. It’s Friday, April 26th.

Did you know: One of the earliest computer viruses, known as the Chernobyl Virus, was activated on this date in 1999. It was believed to have infected sixty million computers internationally, rendering the motherboards obsolete.

In today’s email:

Adobe’s Video Upscaler

Untapped Potential of LLMs

OpenAI's New 'Instruction Hierarchy'

Apple Releases OpenELM

10 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

Today’s trending AI news stories

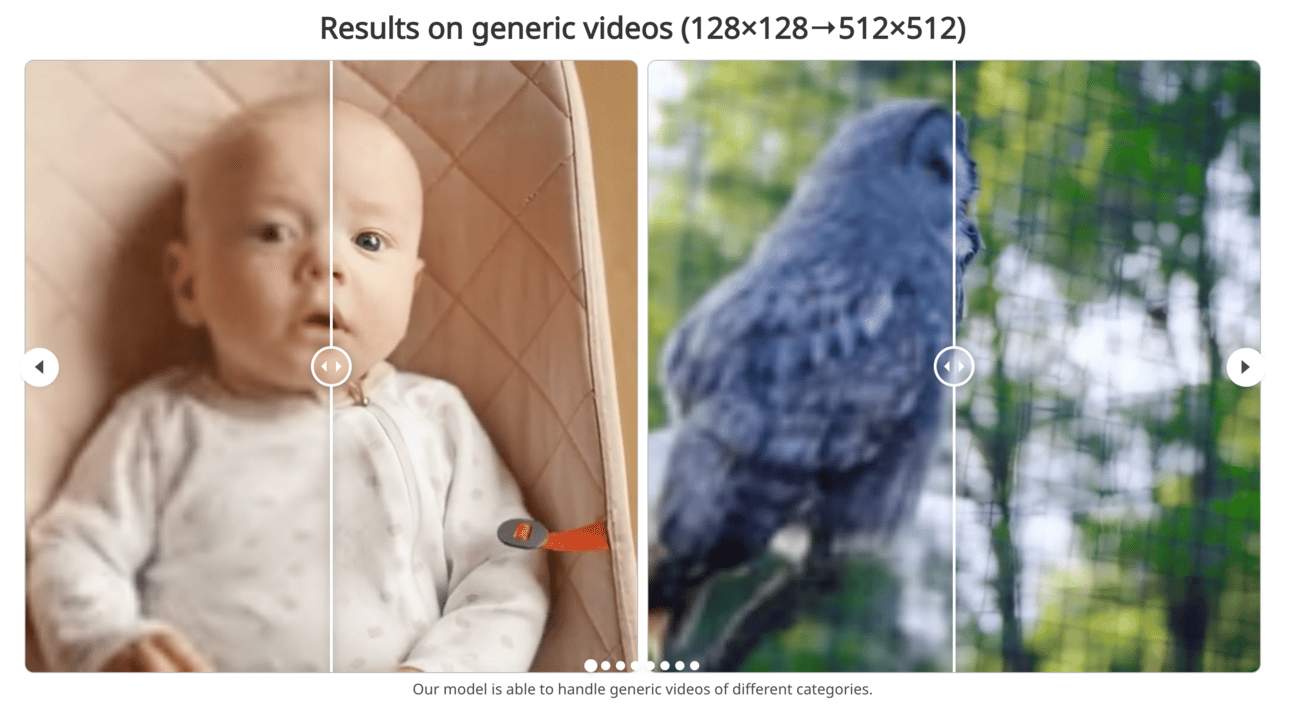

Adobe's Next AI Can Upscale Low-Res Video to 8x Its Original Quality

Adobe's upping the video quality game. Their new project, VideoGigaGAN, is an AI model designed to upscale low-resolution videos by up to 8x. Based on a generative adversarial network (GAN), specifically GigaGAN, it addresses challenges like weird artifacts commonly seen in upscaling tech. Adobe's recent focus on AI integration in software, including Project Res Up previewed at Adobe MAX 2023, suggests VideoGigaGAN could feature in future products.

While still in development, VideoGigaGAN shows impressive results. Processing lengthy videos and rendering small details remain challenges, but the potential to improve older footage or enhance low-quality content is promising. Read more.

Current LLMs "undertrained by a factor of maybe 100-1000X or more" Says OpenAI Co-Founder

OpenAI co-founder Andrej Karpathy throws down the gauntlet, claiming current Large Language Models are "undertrained by a factor of maybe 100-1000X." This coincides with Meta's unveiling of Llama 3, a groundbreaking LLM trained on an exceptional volume of data.

Leveraging a dataset of 10 million high-quality examples, Llama 3 surpasses prior models in performance. Notably, its training corpus encompasses a staggering 15 trillion tokens, exceeding DeepMind's recommended threshold by a factor of 75.

This raises questions about the adequacy of existing training regimens.

Karpathy suggests existing LLMs might be significantly underexposed to data, a notion supported by Meta's continued performance gains even after extensive training. This hints at a vast, unexplored potential in LLM development. Read more.

OpenAI's New 'Instruction Hierarchy' Could Make AI Models Harder to Fool

OpenAI introduces a new approach, "instruction hierarchy," to enhance the security of Large Language Models (LLMs) against manipulation techniques like prompt injection attacks and jailbreaks. This method prioritizes system instructions from developers over user input or third-party tools.

Leveraging synthetic data and a technique called "context distillation," the model learns to differentiate and prioritize higher-level instructions while ignoring potentially conflicting lower-level ones. Initial tests on GPT-3.5 demonstrate significant improvements in LLM robustness. Resistance to prompt extraction increased by up to 63% and jailbreaking attempts were reduced by 30%.

While occasional rejections of user input may occur, overall model performance remains stable. Further refinement of this approach holds promise for secure deployment of LLMs in critical applications. Read more.

Apple Releases OpenELM: A Small, Open-Source AI Designed to Run On-Device

Apple has released OpenELM, a suite of open-source large language models designed for on-device execution. Available on Hugging Face, OpenELM offers eight models, ranging from 270 million to 3 billion parameters, encompassing both pre-trained and instruction-tuned variants.

These models prioritize on-device execution, fostering privacy and potentially improved performance compared to cloud-based solutions. Apple's commitment to on-device AI is evident through the release of model weights and training checkpoints under a sample code license. Benchmarking demonstrates competitive performance, with the 1.1 billion parameter model exceeding similar models while requiring less pre-training data.

While not positioned as the most cutting-edge technology, OpenELM's focus on on-device capabilities has generated significant interest within the AI community as a potential shift towards more distributed and privacy-focused AI architectures. That could be Apple’s competitive advantage. Read more.

New Google Report Explores Economic Impact of Generative AI

Google just dropped a report exploring the economic ripples of generative AI. Andrew McAfee's research, conducted in collaboration with experts at Google, emphasizes generative AI's status as a "general-purpose technology," capable of accelerating economic growth. The report suggests that up to 80% of U.S. jobs could witness a 10% task completion acceleration via generative AI.

Musk Escalates OpenAI Fight With Subpoena of Ex-Board Member

Elon Musk's legal fight with OpenAI heats up as he subpoenas ex-board member Helen Toner. The subpoena seeks documents related to her departure and the CEO's ousting and reinstatement. This action relates to Musk's lawsuit claiming a broken promise on AI for humanity due to Microsoft's partnership with OpenAI. OpenAI calls it a misrepresentation of history.

🖇️ Etcetera - Stories you may have missed:

10 new AI-powered tools from around the web

Wizad transforms social media marketing using GenAI. Instantly generate brand-aligned posters, eliminating manual effort. Experience seamless automation for small businesses, e-commerce, and agencies.

LangWatch empowers LLM pipeline improvement with open-source analytics. Address risks like jailbreaking and data integrity. Optimize GenAI tool performance effortlessly.

Dart optimizes project management with integrated AI. Automate reports, subtasks, duplicate detection, and basic tasks execution. Enhance productivity with customizable features and integrations.

Userscom offers sleek, AI-powered ticketing, enhancing customer support. Transform tickets into to-do lists, organize with tabs, and enjoy the best UX.

Bland AI simplifies AI-powered phone agent development and scaling. Automate tasks, integrate APIs, handle high call volumes efficiently. Customizable and ideal for enterprises.

PaddleBoat enables refined sales pitch precision with an AI-driven roleplay platform. Customize scenarios, hone cold-calling skills, and receive feedback, ideal for Sales, SaaS, AI sectors.

Assista AI unifies productivity apps into one interface for voice/text command control. Enhance task efficiency and reduce completion times.

TheFastest.ai is a performance benchmarking tool for large language models. Measures Time To First Token (TTFT), Tokens Per Second (TP)S, total response time. Provides daily updated stats for optimizing conversational AI interactions.

Intellisay is a voice-activated productivity tool employing AI for task management. Transcribes spoken plans, optimizes schedules, enhances planning efficiency, and habit formation.

NexusGPT is an AI platform for custom agent creation and workflow automation. Tailored for sales, support, research, marketing, and operations.

arXiv is a free online library where researchers share pre-publication papers.

AI Creates Comics

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, apply here.