Good morning. It’s Monday, September 28th.

On this day in tech history: In 2011, DARPA’s “Mind’s Eye” program, an attempt to teach machines to recognize actions instead of just objects, hit its first public demo on September 28. The early prototypes were taking raw video and spitting out semantic verb labels using a mix of hand-crafted spatiotemporal features and probabilistic graphical models to translate motion primitives into a structured verb ontology.

In today’s email:

GPT-5 solves hard conjectures, ChatGPT reroutes sensitive, emotional prompts

Meta’s Next Big Bet is Robotics

Google AI Upgrades NotebookLM, Flow, and Labs

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

AI voice dictation that's actually intelligent

Typeless turns your raw, unfiltered voice into beautifully polished writing - in real time.

It works like magic, feels like cheating, and allows your thoughts to flow more freely than ever before.

Your voice is your strength. Typeless turns it into a superpower.

Today’s trending AI news stories

GPT-5 solves hard conjectures, ChatGPT reroutes sensitive, emotional prompts

OpenAI is quietly testing a new routing layer in ChatGPT that swaps users into stricter model variants when prompts show emotional, identity-related, or sensitive content. The switch happens at the single-message level and isn’t disclosed unless users ask directly. The safety filter, originally meant for acute distress scenarios, now also triggers on topics like model persona or user self-description.

Image: OpenAI

Some users see the move as overreach that blurs the line between harm prevention and speech control. The reroutes appear to involve lighter-weight variants, raising questions about transparency and whether even paying users are being silently shifted to less compute-heavy models.

Meanwhile, OpenAI’s historic week just redefined how investors think about the AI race. The company is making an audacious, high-risk infrastructure bet. Deedy Das of Menlo Ventures credits Sam Altman for spotting early how steep the scaling curve would be, saying his strength is “reading the exponential and planning for it.” Altman has now publicly committed to trillions in data center spending despite scarce grid availability, no positive cash flow, and reliance on debt, leases, and partner capital. To make the math work, OpenAI is weighing revenue plays like affiliate fees and ads.The business case depends on deep enterprise adoption and timely access to energy and compute.

Separately, early research tests are exposing GPT-5’s expanding problem-solving range. In the Gödel Test, researchers from Yale and UC Berkeley evaluated the model on five unsolved combinatorial optimization conjectures. It delivered correct or near-correct solutions to three problems that would typically take a strong PhD student a day or more to crack. In one case, it went further than expected, disproving the researchers’ conjecture and offering a tighter bound.

Its failures were equally revealing: it stalled when proofs required synthesizing results across papers, and on a harder conjecture it proposed the right algorithm but couldn’t complete the analysis. The model now shows competence in low-tier research reasoning with occasional flashes of originality but still struggles with cross-paper composition and deeper proof strategy. Read more.

Humanoid robots are Meta’s next ‘AR-size bet’

In an interview with Alex Heath at Meta HQ, CTO Andrew Bosworth revealed the company’s humanoid robotics project, Metabot, positioning it as a software-driven move to dominate the platform layer that other developers must plug into.

Andrew Bosworth. Image: Facebook

Bosworth says Zuckerberg greenlit the project earlier this year, and the spend will likely hit AR-scale levels. He says locomotion is largely solved across the industry and the real technical bottleneck is dexterous manipulation. Tasks like picking up a glass of water demand fine-grained force control, sensory feedback, and rapid motor coordination. Current systems can walk and flip but still crush or drop objects because they lack adaptive grasping. Meta wants to solve that layer and treat it the way it treated the VR stack: hardware-agnostic, platform-led, and licensable.

Image: Meta

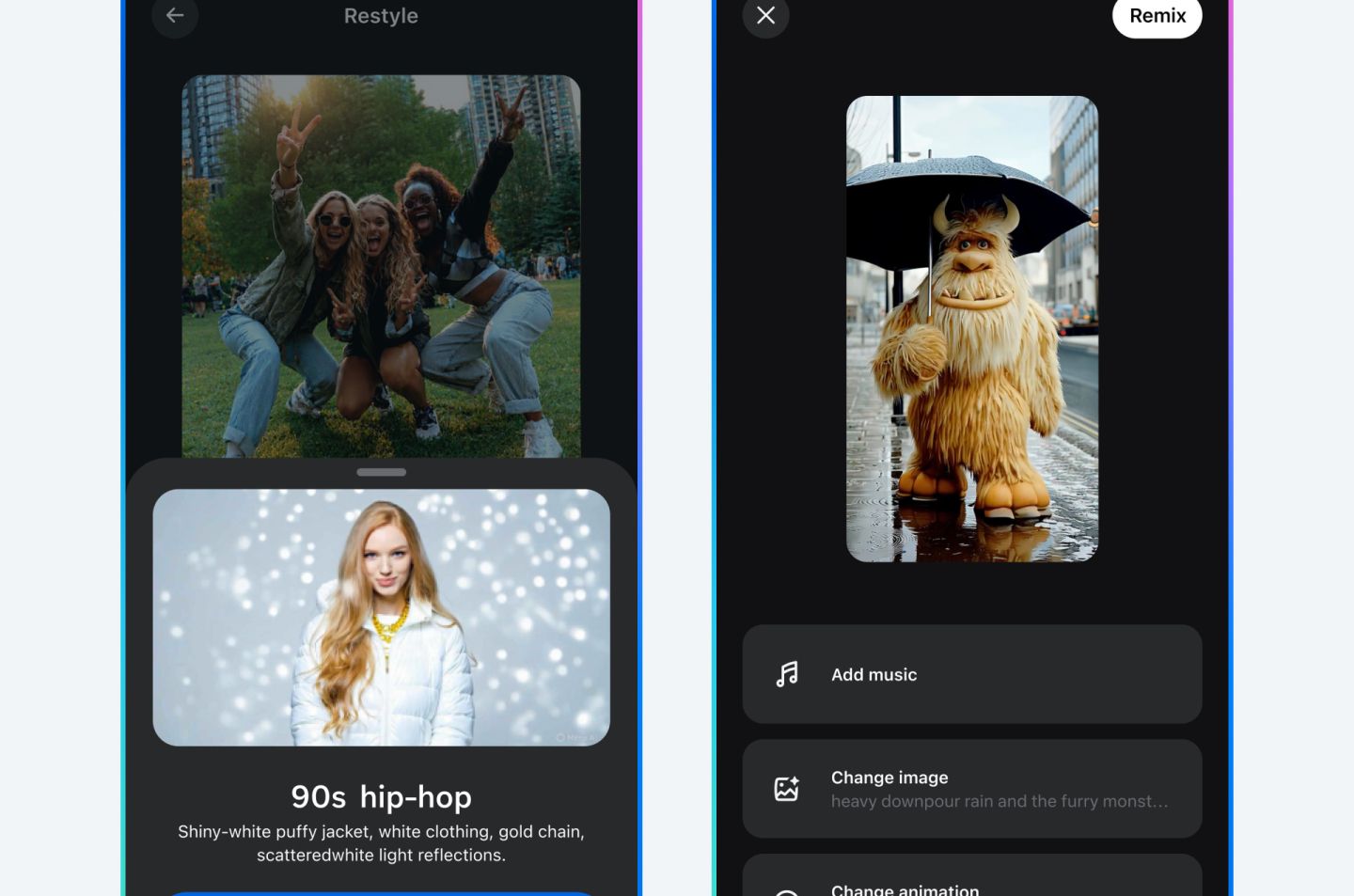

Simultaneously, Meta is repositioning AI video as participatory media rather than a novelty. The new ‘Vibes’ feed has started channeling AI-generated videos from creators and communities into a dedicated feed. Instead of highlighting user prompts or chatbot interactions like the old Discover tab, Vibes acts as a distribution layer for short-form AI video sourced from people already experimenting with Meta’s generation tools. Each video appears with the prompt that produced it, making the feed both a showcase and a template library for remixing. It’s TikTok logic applied to generative media: seed content, encourage replication, and let the network handle distribution. Read more.

Google makes AI a core stack layer with Flow, NotebookLM, and Labs upgrades

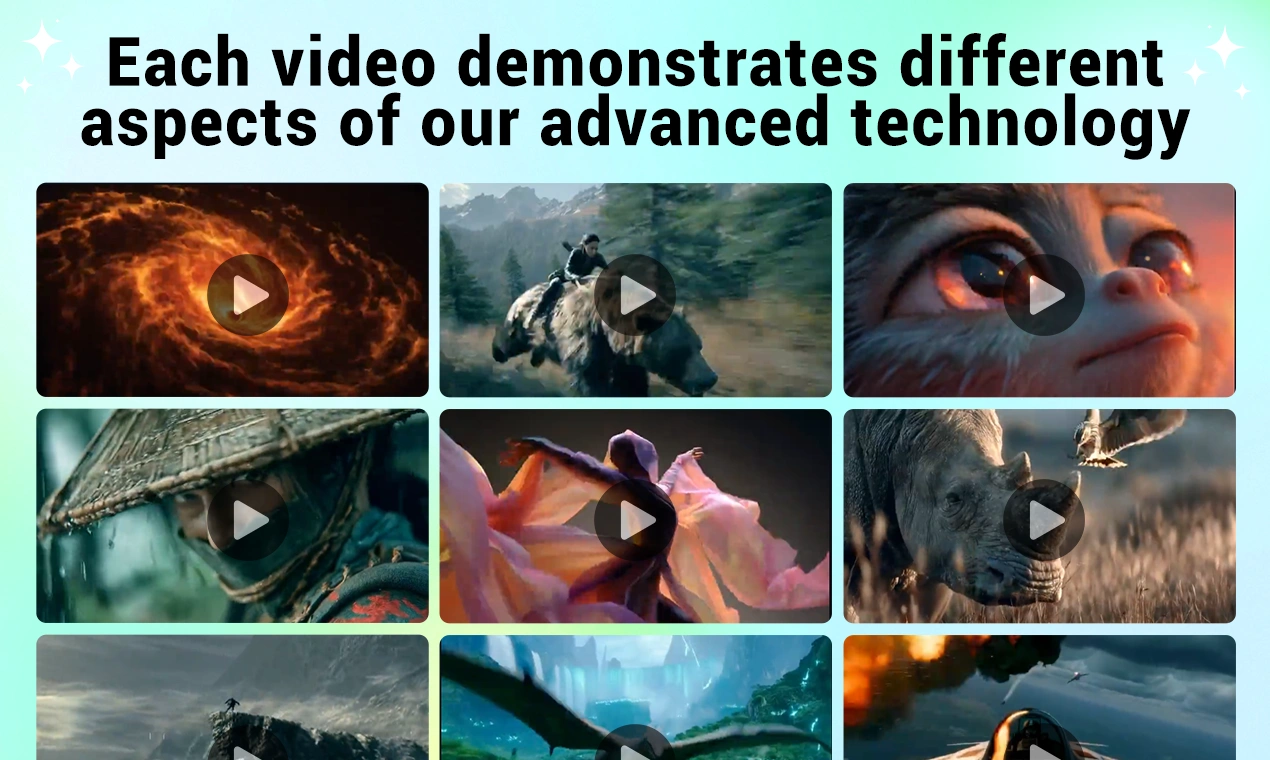

Google’s Flow, once a generative side project, is turning into a modular creation environment. The new Nano Banana editing layer lets users adjust outputs directly inside ingredients mode instead of exporting to external editors. A prompt expander now converts shorthand inputs into structured, stylistic instructions with presets and custom expansion logic, making it usable for animation workflows, storyboards, and sequential design. The only loose thread is model dependency: Flow still runs on Veo 2, and Google hasn’t said whether Veo 3 will slot in cleanly or alter generation behavior.

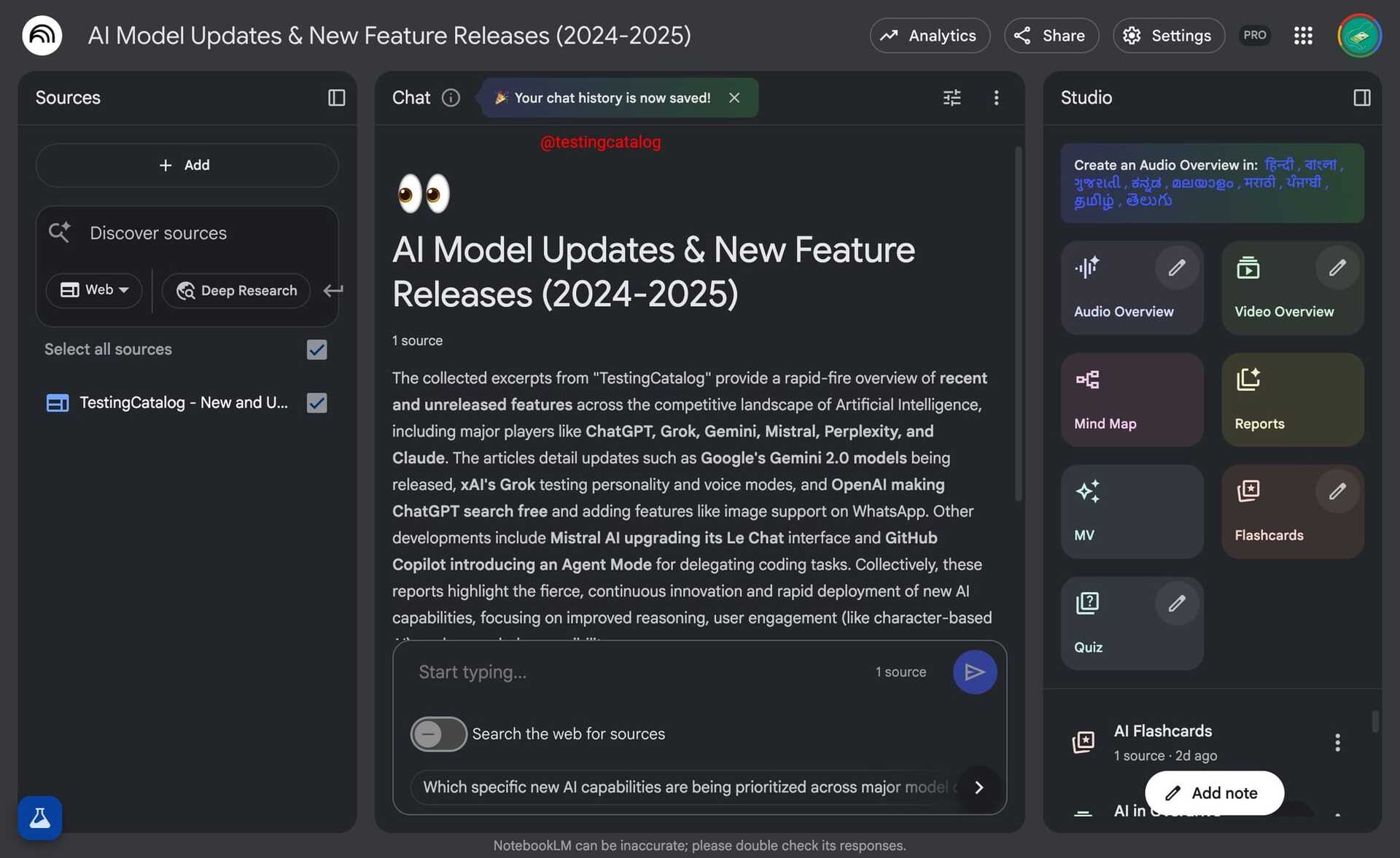

NotebookLM, on the other hand, is finally addressing its biggest usability flaw: volatile sessions. Persistent chat history is now buried in the interface and poised for rollout. Saved transcripts will surface in the main chat view, enabling multi-session workflows for classrooms, research groups, and internal teams that rely on cumulative context. No word yet on searchability or permissions, but the move closes a gap with rivals already logging conversation state.

In YouTube Music, Google is quietly testing AI radio hosts through its Labs program. These synthetic presenters pull from metadata, fandom knowledge graphs, and genre presets to inject commentary, trivia, and context between tracks. Access is limited to a small US tester pool, similar to NotebookLM's invite-only features.

The blue section of the figure shows an experimental research pipeline that led to a discovery of DNA transfer among bacterial species. The orange section shows how AI rapidly reached the same conclusions. Image: José R. Penadés, Juraj Gottweis, et al.

Meanwhile, Google’s AI co-scientist just scored two lab-validated wins. At Stanford, it mined biomedical literature and surfaced three existing drugs for liver fibrosis, two worked in tissue tests, and one is headed toward clinical trials. At Imperial College London, it reverse-engineered how parasitic DNA jumps across bacterial species, correctly proposing that fragments hijack viral tails from neighboring cells. That took the human team years.

Unlike generic LLMs, the system uses a multi-agent setup that generates, critiques, and ranks hypotheses with a supervisor pulling external tools and papers. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!