Good morning. It’s Friday, December 29th.

Did you know: The first projected movie screening took place today in 1885?

In today’s email:

AI in Business and Industry

AI Technology and Innovation

AI and Healthcare

Legal and Ethical Issues in AI

6 New AI Tools

Latest AI Research Papers

ChatGPT + DALLE 3 Attempts Comics

You read. We listen. Let us know what you think by replying to this email.

Interested in reaching 47,357 smart readers like you? To become an AI Breakfast sponsor, apply here.

Today’s trending AI news stories

AI in Business and Industry

> LG introduces a roaming AI assistant, a wheeled robot, set to revolutionize the concept of smart home devices. Announced on December 27, 2023, and debuting at CES in Las Vegas, this robot is a self-balancing, two-wheeled device equipped with optical and depth-sensing cameras. It recognizes faces, pets, and objects, and monitors environmental factors like temperature and air quality, adjusting connected appliances accordingly. The robot can patrol homes, monitor pets, detect security breaches, and manage lights and windows. It greets users upon arrival, discerning their emotions to provide suitable content. This innovative AI assistant combines traditional voice interaction with autonomous mobility and environmental monitoring.

> Alibaba has released Make-A-Character (Mach), an innovative text-to-3D model that brings text descriptions to life as detailed 3D avatars. This tool currently specializes in generating Asian ethnicity avatars, with plans to include more diversities. Mach combines large language models and vision foundation models, mapping text prompts to visual cues using Stable Diffusion and ControlNet. The avatars can be animated easily due to their parameterized representation. Alongside Mach, Alibaba has launched Richdreamer for 2D to 3D conversion, ‘Animate Anyone’ for character video creation, and enhanced language models like Qwen-72B, following Qwen-7B and Qwen-1.8B, to empower AI research and applications.

> Anthropic, an OpenAI competitor, projects an impressive $850 million in annualized revenue by 2024’s end, marking a significant growth from its initial $500 million estimate. This surge in revenue is partly attributed to OpenAI’s internal CEO changes, which led to over 100 of OpenAI’s clients seeking Anthropic as a more stable alternative. Anthropic’s success is driven by its language model Claude, sold via web interface and API. The company’s aggressive revenue targets, coupled with substantial funding from Menlo Ventures, positions it as a formidable player in the generative AI market.

> Midjourney, led by CEO David Holz, is set to begin training video models in January, building upon its advanced image model foundation. This move mirrors Meta’s success with Emu Video, derived from the Emu image model. Midjourney’s upcoming v6 updates, starting next week, will enhance text rendering and prompt response, integrating features like inpainting from v5.

> Pinecone, led by CEO Edo Liberty, is developing a vector database technology aiming to enhance AI’s search capabilities. This technology grounds generative AI outputs in authoritative knowledge, reducing AI hallucinations. The vector database, part of the “retrieval-augmented generation” field, uses vector embeddings to represent data based on similarity, improving large language models’ effectiveness.

AI Technology and Innovation

> Assistive has unveiled Assistive Video, a pioneering video platform that crafts high-quality videos from text and image prompts. Users can simply input a description or upload an image to produce a four-second video. Currently in early alpha, Assistive Video seeks user feedback to enhance its capabilities. It’s accessible via assistive chat/video and through the Assistive API for select developers. Early applications include DATA, an AI co-worker leveraging Assistive Video for social media content creation.

> Pika Labs’ advanced AI video generator is now available to all users, offering a versatile web interface for creating dynamic videos. Users can generate videos using images, text, or a combination of both, with options for camera movement, aspect ratios, and video-text consistency. Initially designed for Discord, the web version simplifies the process, allowing for video lengths up to 15 seconds. Pika Labs, amidst heavy competition from companies like Stability AI, Google, and Meta, is rapidly emerging in the prompt-to-video market.

> Researchers from Nanyang Technological University have successfully used AI chatbots to “jailbreak” each other, to produce restricted content. This process involved training an LLM with prompts that previously hacked these chatbots. The “Masterkey” method developed by the researchers entails reverse-engineering LLM defenses and training an LLM to generate prompts that bypass other LLM’s defenses. This breakthrough exposes vulnerabilities in AI chatbots and could aid developers in enhancing their security against such attacks. The findings are set to be presented at a security symposium in San Diego in 2024.

> Canva, the Australian graphic design company, has successfully integrated generative AI into its core business, enhancing user experience and content creation. Despite recent valuation adjustments by investors due to market sentiment, Canva maintains strong growth, almost doubling in metrics with an addition of 80 million users over the past year. This growth is propelled by its focus on local, authentic content and international expansion. Canva's generative AI implementation significantly boosts user growth and revenue, offering a diverse range of smart content choices. The company's commitment to AI includes a $200 million investment over three years to train its models with creator content, with minimal opt-outs from creators.

> NVIDIA’s recent job postings reveal its foray into combining quantum computing and generative AI, seeking software architects for its Quantum Computing Architecture team. This role aims to enhance hybrid quantum-classical programming, compilation, and application workflows. Highlighting a focus on integrating generative AI with quantum computing, NVIDIA’s initiative marks a significant step in developing advanced, quantum-accelerated GPU-supercomputing architectures and toolchains. The selected experts will focus on utilizing Large Language Models for code generation and knowledge distillation specifically tailored for quantum computing applications.

> Resemble AI introduces Resemble Enhance, a groundbreaking open-source AI tool transforms noisy audio into crystal-clear speech. It adeptly refines noisy recordings into pristine speech, ideal for podcasting, the entertainment industry, and reviving historical audios. The tool integrates a denoiser to isolate voice from noise and an enhances for fixing distortions and enhancing bandwidth. Aimed at ongoing improvements, Resemble AI focuses on refining processing speed and control over speech nuances. Remarkably, the model also aims to enhance audios over 75 years old. It offers a demo on HuggingFace, with its code accessible on GitHub.

AI and Healthcare

> University of Minnesota researchers are innovating in the field of breast cancer treatment, aiming to reduce heart-related side effects using AI. Chemotherapy, a common treatment, can lead to heart damage in patients. Led by Rui Zhang and Assistant Professor Ju Sun, the team is developing an AI tool to better predict these cardiac risks. Their challenge is to adapt AI to work with limited, varied patient data. With a $1.2 million grant from the National Institute of Health, their four-year project, if successful, could extend to other cancers and diseases, potentially revolutionizing healthcare outcomes.

Legal and Ethical Issues in AI

> The New York Times is suing OpenAI and Microsoft, claiming billions in damages for alleged copyright infringement used to train ChatGPT. The lawsuit asserts that “millions” of the newspaper’s articles were used without permission, leading to ChatGPT generating content that competes with the Times as a news source. The News/Media Alliance, representing over 2,200 news organizations, supports the Times, citing a White Paper that AI models heavily rely on journalistic content. The lawsuit follows multiple 2023 suits against OpenAI, including those from notable authors and computing experts. The Alliance emphasizes the need for fair use and compensation in journalism for AI development, stressing that using content without permission is not only unlawful but also hampers responsible innovation.

In partnership with SciSpace

Transform your academic research with SciSpace

Dive into a sea of knowledge now with access to over 282 million articles

Accelerate your literature reviews, effortlessly extract data from PDFs, and uncover new papers with cutting-edge AI search capabilities.

Simplify complex concepts and enhance your reading experience with AI Co-pilot, and craft the perfect narrative with the paraphraser tool.

Millions of researchers are already using SciSpace on research papers.

Join SciSpace and start using your AI research assistant wherever you're reading online.

Thank you for supporting our sponsors!

6 new AI-powered tools from around the web

Suno AI, is an AI-based music creation platform that enables anyone to compose songs using just imagination. Compared to Midjourney for music, it is accessible to all, from amateurs to professionals, revolutionizing the music-making process.

Uiverse.io 2.0,now with 3,500 open-source UI elements, introduces Figma Copy & Paste, new categories like Forms and Tooltips, and a sleek UI design. Ideal for designers and developers, it fosters collaboration and creativity.

AnswerFlow AI offers a lifetime license for building unlimited custom ChatGPT bots, utilizing your own OpenAI key. It is an economical alternative to monthly subscriptions, including ChatGPT Plus, and simplifies bot creation for various use cases.

Monica 4.0, an all-in-one AI assistant integrates advanced AI models like GPT-4, Claude, Bard for chatting, searching, writing, translating, and more. It also processes images, videos, and PDFs.

Genie AI transforms complex SQL queries into conversational language. Integrated into Slack, it eliminates coding and research time, offering instant data insights and enhancing data-driven decision-making.

WeConnect.chat is a no-code chatbot builder that enhances customer engagement by merging AI with human interaction. Ideal for creators seeking efficient, personalized communication, offering a live, video-chat enabled multi-channel dashboard for customer support and lead generation.

arXiv is a free online library where researchers share pre-publication papers.

Human101 is a groundbreaking framework for rapidly constructing 3D human models from single-view videos, pivotal in virtual reality. It trains 3D Gaussians in 100 seconds, rendering over 100 FPS, utilizing 3D Gaussian Splatting for an efficient, explicit representation of 3D humans. This innovative approach, deviating from traditional NeRF-based methods, employs Human-centric Forward Gaussian Animation for enhanced rendering speed and superior quality. Demonstrating a significant performance leap, it offers up to 10x faster rendering while maintaining or surpassing existing methods in visual quality. The framework’s code and demos will be available on GitHub.

RichDreamer presents a pioneering Normal-Depth diffusion model for text-to-3D generation, significantly enhancing the detail richness in 3D content creation. Unlike existing methods, which rely on 2D RGB diffusion models leading to optimization instability, RichDreamer introduces a generalizable approach trained on the extensive LAION dataset, followed by fine-tuning on synthetic data. This approach results in a robust 3D geometric prior, effectively capturing scene geometry. Further, an albedo diffusion model is introduced to improve appearance modeling by decoupling reflectance and illumination effects. Integrating into existing text-to-3D pipelines, RichDreamer demonstrates state-of-the-art results in both geometry and appearance, offering significant advancements in the field of 3D content generation.

The paper introduces a new method for rendering large-scale scenes in real-time on web platforms, overcoming challenges in computation, memory, and bandwidth. This method divides scenes into blocks, each with its own Level-of-Detail (LOD). It ensures high-fidelity, efficient memory use, and quick rendering. The technique achieves 32 FPS at 1080P resolution on an RTX 3060 GPU, offering quality comparable to state-of-the-art methods. It represents a significant advancement in rendering large-scale scenes in resource-constrained environments like web browsers.

The paper introduces a new scene representation method to achieve photorealistic, real-time, high-resolution rendering with compact storage. Their approach, Spacetime Gaussian Feature Splatting, enhances 3D Gaussians with temporal opacity and parametric motion/rotation, efficiently capturing static and dynamic scene elements. It also employs splatted feature rendering instead of spherical harmonics, resulting in a smaller model size while maintaining expressive rendering of view- and time-dependent appearance. Guided by training error and coarse depth, the method samples new Gaussians in challenging convergence areas, improving rendering quality in complex scenes. This representation achieves outstanding results in terms of rendering speed and quality while keeping model size compact, evident in their 8K 6-DoF video rendering at 66 FPS on an Nvidia RTX 4090 GPU.

"Hyper-VolTran," a breakthrough by Christian Simon and team at Meta AI, transforms single images to 3D structures using HyperNetworks. It surpasses prior neural reconstruction methods reliant on scene-specific optimization. Utilizing signed distance functions and geometry-encoding volumes, Hyper-VolTran dynamically adjusts SDF network weights via HyperNetworks, enabling quick adaptation to new scenes. It includes a volume transformer module for consistent multi-viewpoint outputs, efficiently generating 3D structures without scene-specific optimization.

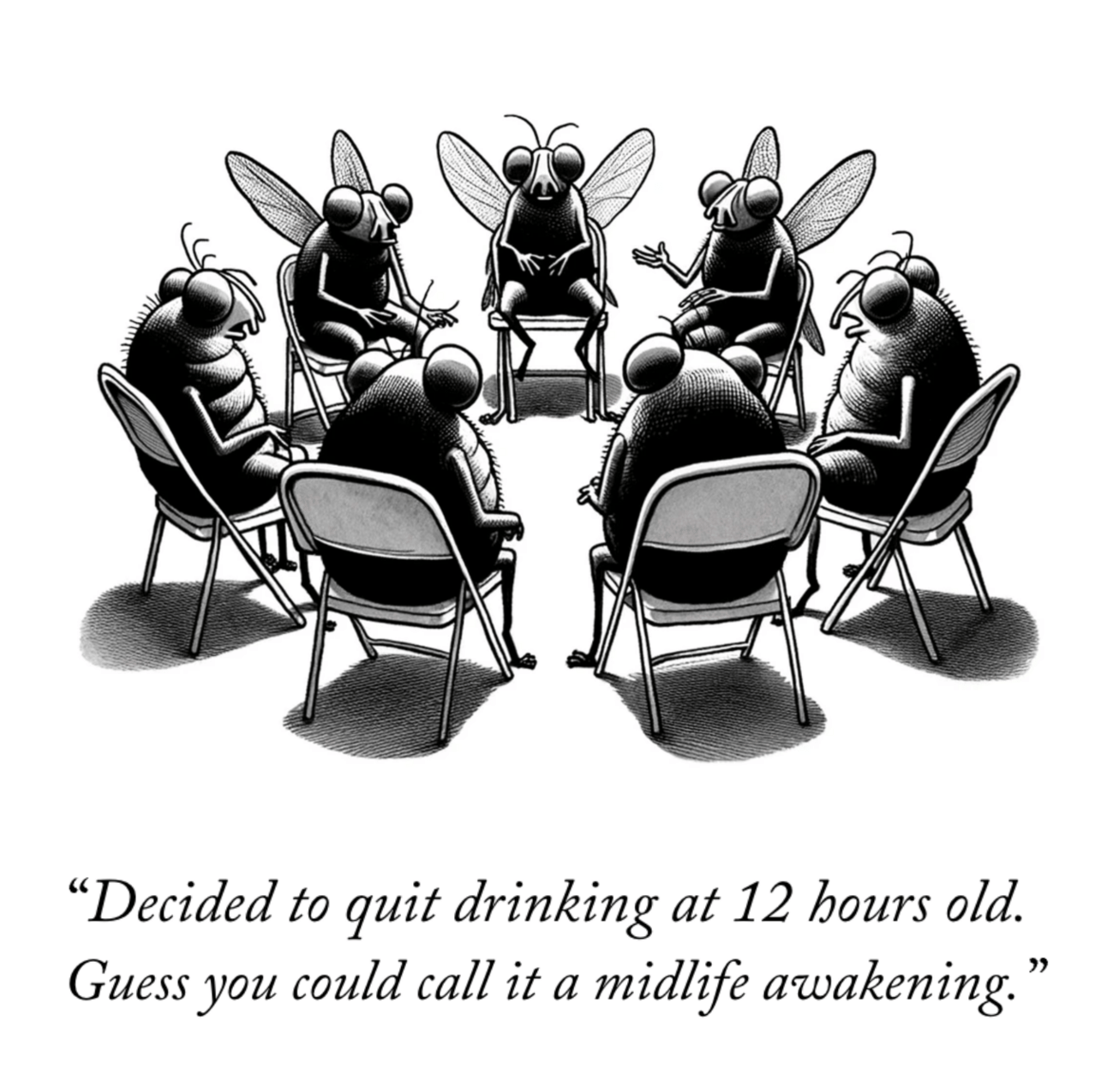

ChatGPT + DALLE 3 Creates Comics

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, apply here.