Good morning. It’s Wednesday, October 2nd.

Did you know: On this day in 1991, the AIM alliance between Apple, IBM, and Motorola was formed.

In today’s email:

OpenAI’s DevDay Announcements

o1 Model Can Handle “5-hour Tasks”

Liquid Foundation Models

Copilot AI Event

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

In partnership with

Writer RAG tool: build production-ready RAG apps in minutes

RAG in just a few lines of code? We’ve launched a predefined RAG tool on our developer platform, making it easy to bring your data into a Knowledge Graph and interact with it with AI. With a single API call, writer LLMs will intelligently call the RAG tool to chat with your data.

Integrated into Writer’s full-stack platform, it eliminates the need for complex vendor RAG setups, making it quick to build scalable, highly accurate AI workflows just by passing a graph ID of your data as a parameter to your RAG tool.

Today’s trending AI news stories

OpenAI's DevDay 2024: 4 Major Updates

At DevDay 2024, OpenAI favored substance over spectacle, rolling out four updates to make AI more accessible and affordable for developers.

Here’s what’s new:

Realtime API: The newly introduced Realtime API grants developers access to six AI voices designed for seamless integration into applications. Distinct from those in ChatGPT, these voices enable lifelike conversations across various contexts, including travel planning and phone-based ordering systems at roughly $18/hr. The API supports real-time responses, enhancing user experiences in diverse applications, although developers are responsible for disclosing the use of AI-generated voices.

Vision fine-tuning API: The Vision Fine-Tuning API allows developers to bolster GPT-4o by incorporating image data with text, significantly improving the model's visual comprehension. This feature supports advanced visual search, object detection for autonomous vehicles, and precise medical image analysis, all achievable with as few as 100 images. OpenAI maintains transparency by granting developers full control over data ownership and usage, complemented by automated safety evaluations to ensure compliance.

Prompt Caching in the API: The Prompt Caching feature enables developers to save costs and reduce latency by reusing input tokens from prior prompts. This functionality is especially beneficial for code editing and multi-turn conversations, providing potential savings of up to 50% in processing times. The feature automatically applies to the latest GPT-4o and GPT-4o mini versions, activating for prompts longer than 1,024 tokens while ensuring privacy commitments are met.

Model Distillation in the API: OpenAI's Model Distillation allows developers to refine cost-effective models using outputs from advanced models like GPT-4o and o1-preview. This integrated process simplifies the creation of high-performance models, such as GPT-4o mini, without the need for multiple tools. Key features include Stored Completions for automatic dataset generation and Evals for performance assessments. Model Distillation is now available, offering 2 million free training tokens daily for GPT-4o mini and 1 million for GPT-4o until October 31, after which standard fine-tuning pricing will apply.

Plus, a new prompt generator?

OpenAI has a leaked prompt for generating system prompts on the playground, aimed at improving clarity and effectiveness.

Additionally, OpenAI announced that access to the o1-preview model is now extended to developers on usage tier 3, with increased rate limits equivalent to those of GPT-4o.

For more details, visit the official announcement or check the live blog.

OpenAI's Marketing Chief Says o1 Can Handle “5-hour tasks”

At HubSpot's Inbound event, Dane Vahey, OpenAI's Head of Strategic Marketing, underscored AI’s growing role in marketing's shifting landscape. He detailed a toolkit of AI-driven essentials—ranging from data analysis and automation to AI-supported research and content generation—urging marketers to harness these skills. AI, he noted, isn't just a tool but a "thinking partner," sharpening ideas through collaboration.

Vahey also gave a spotlight to OpenAI’s latest creation, the o1 model, capable of handling tasks up to five hours long, such as crafting intricate strategies. A leap from GPT-3's quick-hit answers and GPT-4's ability to tackle five-minute tasks, o1 excels in planning, though it's still prone to a few hiccups. Yet, its potential reaffirms AI’s growing indispensability for marketers grappling with increasingly complex demands. Read more.

Liquid AI debuts new LFM-based models that seem to outperform most traditional large language models

Liquid AI, an MIT spinoff from Boston, has rolled out its Liquid Foundation Models (LFMs), a sleek new class of AI systems built for both efficiency and power. These LFMs, unlike their more neuron-hungry large language model (LLM) cousins, rely on liquid neural networks that use fewer neurons and smart mathematical techniques to do more with less.

The lineup includes LFM-1B, a 1.3 billion-parameter model for tight-resource setups, LFM-3B for edge devices like drones, and the heavyweight LFM-40B with 40.3 billion parameters, built for cloud-heavy applications. Early results show these models surpassing Microsoft’s Phi-3.5 and Meta’s Llama in key benchmarks.

Available through Liquid Playground and Lambda, they’re also being fine-tuned for hardware from Nvidia, AMD, and Apple. Liquid AI invites the community to push these models to their limits, welcoming red-team tests to gauge their true potential. Read more.

Microsoft Copilot and Windows AI Event

At its New York City event, Microsoft introduced a revamped Copilot experience, featuring a card-based interface for mobile, web, and Windows.

Key updates include Copilot Vision, which visually interprets user environments, and "Copilot Voice," offering four distinct voice options for interactive engagement. "Discover Cards" provide personalized content recommendations, while "Copilot Daily" delivers news and weather updates read aloud, supported by partnerships with major news outlets.

Integrating Copilot into Microsoft Edge enables users to summarize web pages and translate text without compromising personal data. Experimental features in Copilot Labs, including "Think Deeper," leverage the new OpenAI language model, o1, and will be available across platforms.

Creative tools like Paint and Photos will gain advanced features, including Generative Fill and Generative Erase, allowing users to add or remove objects with precision, inspired by Adobe Photoshop's capabilities. The Photos app will also introduce a Super-Resolution feature for on-device image upscaling, achieving up to eight times the original resolution.

This comprehensive overhaul positions Microsoft's Copilot and Windows ecosystem as more responsive and user-centric, aiming to establish itself as a true AI companion. To mark this occasion, AI CEO, Mustafa Suleyman, has authored a memo discussing what he refers to as a “technological paradigm shift” towards AI models capable of understanding human visual and auditory experiences. Read more.

Quick Hits

Nvidia just dropped a bombshell: Its new AI model is open, massive, and ready to rival GPT-4: NVLM-D-72B excels in interpreting complex visual inputs, analyzing memes, and solving mathematical problems, improving its text-only task performance by an average of 4.3 points after multimodal training. The AI community has welcomed this release, recognizing its potential to accelerate research and development. However, it raises concerns regarding potential misuse and poses challenges to existing AI business models. Read more.

Meta Introduces Digital Twin Catalog from Reality Labs Research: Meta has launched the Digital Twin Catalog (DTC), featuring over 2,400 realistic 3D models with sub-millimeter accuracy. This dataset is designed to democratize access to digital twins—3D representations of physical objects—especially for common items like kitchen utensils. With advanced scanning tech, the DTC captures intricate details, leaving no pixel unturned. Read more.

Luma Unveils 10x Faster AI Video Generation, Reduces Creation Time to Under 20 Seconds: Luma AI has announced a breakthrough in video generation with its latest release, Dream Machine v1.6, achieving 10x faster inference speeds. Users can now produce full-quality video clips in under 20 seconds without sacrificing quality, eliminating the need for "turbo" or "distilled" models. Read more.

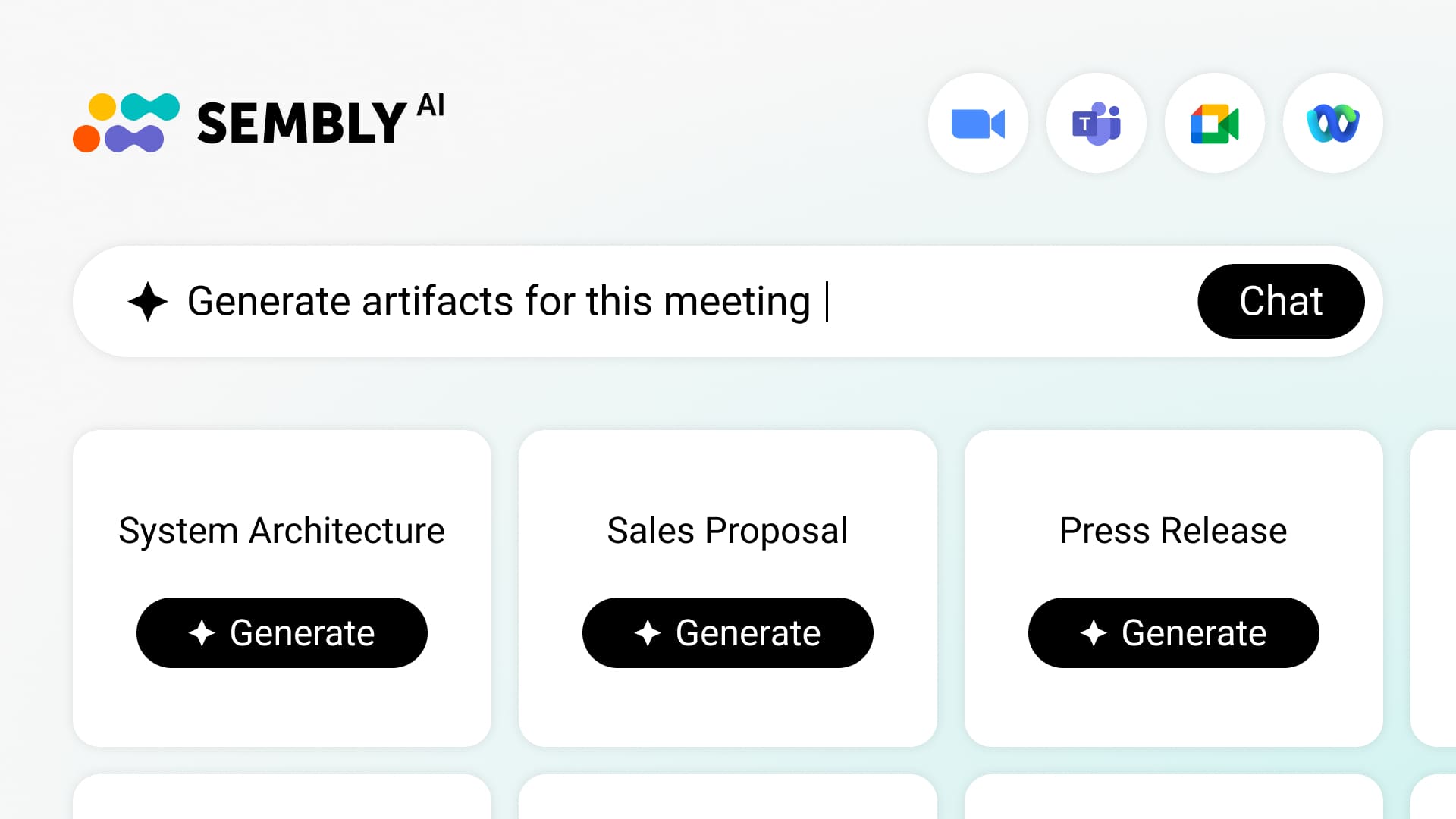

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email!