Good morning. It’s Friday, August 15th.

On this day in tech history: In 2000, MIT’s Cynthia Breazeal introduced Kismet, an expressive humanoid robot capable of recognizing and mimicking emotional cues, an early, influential example of social robotics and affective AI. It fused real-time gaze tracking, prosody-aware speech parsing, and an ethology-inspired behavior engine to produce surprisingly lifelike mood shifts.

In today’s email:

Q&A

OpenAI Invests in Finance Agents

Google Releases mini-model

Claude's learning mode

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

AI Breakfast Q&A

Every edition, we’ll answer real questions from AI Breakfast readers—practical, weird, and everything in between. Got something you’ve been wondering about AI? Looking for a specific tool? Hit “reply” to this email and ask! We may feature it in the next edition!

Pritty A: I head the risk management function and want to build workflows like compiling KRIs from different departments without any IT background. What’s the easiest tool? To do this without code, start with Airtable or Notion. Both let you collect KRIs via simple forms, store them in a central table, and create dashboards. Pair them with Zapier or Make to automatically pull in data from emails, spreadsheets, or forms so your reports build themselves. If you prefer a risk-specific platform, LogicGate Risk Cloud and Resolver are excellent (though pricier) options.

Ernie H: What is a "token?" What does it refer to in the world of AI? A token is just a small piece of text the model reads or writes. It might be a whole word, part of a word, or even punctuation. Models process text as a series of these tokens, and limits like “200k tokens” Like in Claude Sonnet 4 mean the total number of pieces of text it can handle at once.

A.S. Ganesh: When I come across a job posting in LinkedIn/ company's career page, I want AI to customize my resume as per the job description and apply on my behalf. Can you explain how I can achieve this? You can do this by combining a few automation tools. Use Zapier or Make to capture the job description from LinkedIn or a company’s careers page. Then feed that text, along with your current resume, into whatever AI model you like (ChatGPT, Grok, etc.) to rewrite your resume so it highlights your most relevant skills, uses keywords from the job description, and matches the role’s tone. Once customized, automation services such as LoopCV, LazyApply, or Simplify.jobs can upload your resume and auto-fill applications. Also browser-based tools like UiPath or Robocorp can handle LinkedIn submissions specifically. Good luck!

Reply to this email with your question and it may get featured!

Today’s trending AI news stories

OpenAI juggles cost-cuts, new monetization plays, and $14M bet on finance agents

OpenAI’s Startup Fund just injected $14M into Endex, a Thiel Fellow–founded startup integrating an AI agent directly into Excel. Powered by OpenAI’s reasoning stack, Endex can grind through multi-hour financial workflows: building cash flow models, parsing SEC filings, converting PDFs to structured tables, all GDPR- and SOC 2–compliant. It’s aimed squarely at finance teams that need speed without losing audit trails, potentially stepping on Microsoft’s turf.

Meanwhile, The Register calls GPT-5 “a cost cutting exercise” saying the model is tuned for efficiency over brute force. Instead of one giant model, it routes simple queries to a “tiny” LLM and complex ones to a heavyweight core. MXFP4 quantization slashes compute/memory by up to 75% and reasoning is auto-throttled to cut token spend. Gains are modest aside from an 80% drop in hallucinations, though GPT-5 showcased efficiency in style, completing Pokémon Red in just 6,470 steps versus o3’s 18,184.

OpenAI remains cautious on monetization strategy. However, ChatGPT head Nick Turley says advertising is “not ruled out” but would require careful integration, while CEO Sam Altman frames it as a “last resort.” OpenAI is also piloting “Commerce in ChatGPT,” enabling purchases directly via chatbot recommendations without allowing affiliate revenue to skew results. Read more.

Google releases pint-size open-weight AI model, adds personalization and memory to Gemini

Google released Gemma 3 270M, an ultra-compact open model with just 270 million parameters, small enough for efficient on-device inference yet scoring 51.2% on the IFEval benchmark, outperforming several larger lightweight models. A Pixel 9 Pro test showed 25 multi-turn conversations consumed only 0.75% battery, making it a candidate for privacy-preserving, offline AI. Weights are freely available on Hugging Face, Kaggle, and Vertex AI, with licensing terms blocking harmful uses.

Gemini, on the other hand, gains new personalization features, including a default memory mode that stores prior conversation context, “Temporary Chats” for privacy, and a “Keep Activity” setting arriving September 2, that lets users opt out of having chats, uploads, and other data sampled for model training.

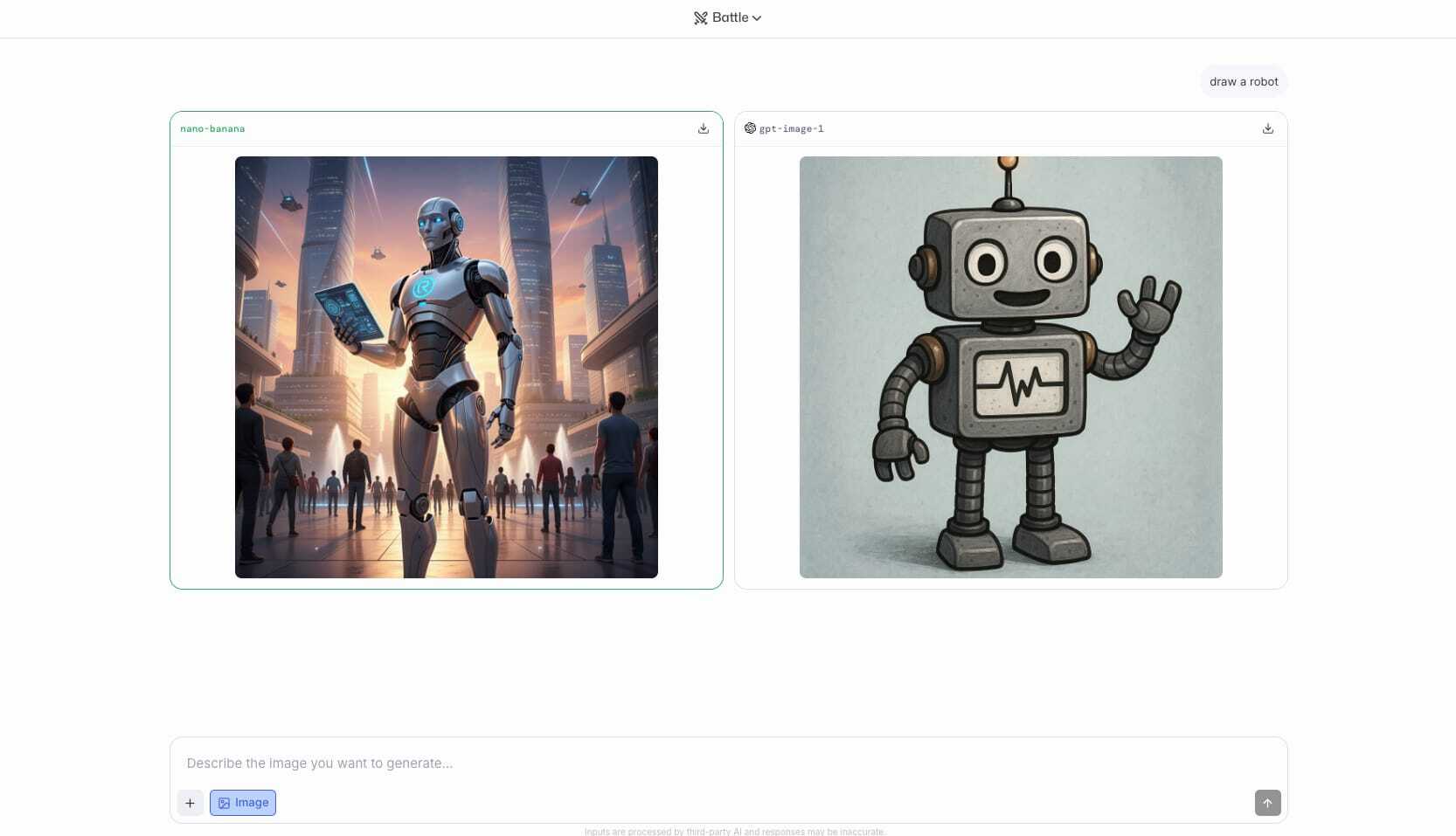

left: nano-banana, right: gpt-image-1 | Image: Testing Catalog

Ahead of the August 20 Pixel event, Google is also preparing a major Gemini image generation upgrade, likely debuting as “GEMPIX.” Leaks also reveal “Nano Banana,” a lightweight image model found in LM Arena, suggesting on-device optimization. Another discovery, “Magic View” in NotebookLM, shows a pixel-themed animation, hinting at branding synergy.

Consumer-facing tools are also expanding with Flight Deals, now in beta in the US, Canada, and India, which parses natural-language trip requests to surface unconventional but cost-effective routes, competing with Skyscanner-style aggregators. Read more.

Anthropic brings Claude's learning mode to regular users and devs

Claude AI’s new Learning Mode now guides users with a Socratic, hands-on approach: in Claude Code, Explanatory mode breaks down reasoning, trade-offs, and decisions, while Learning mode pauses for developers to complete #TODO code segments. With a 1M-token memory, Claude maintains long-term context across complex projects, supporting iterative problem-solving and collaborative coding.

Anthropic also recently acqui-hired Humanloop’s co-founders and core engineering team, gaining expertise in LLM prompt management, evaluation, and observability for enterprise clients. Though the IP itself wasn’t acquired, the team equips Anthropic to enforce safety, bias mitigation, and compliance at scale.

Combined with extended context windows and agentic capabilities, this gives enterprises fine-grained control over AI behavior, performance, and monitoring. Developers can now create custom learning and evaluation pipelines, merging interactive guidance with enterprise-grade control, letting users and businesses co-evolve with the model. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!