Good morning. It’s Wednesday, July 23rd.

On this day in tech history: In 1972, Terry Winograd released SHRDLU, an AI that could understand and manipulate a virtual block world via typed language. A landmark in symbolic AI, it combined a semantic parser, planner, and reasoning system to maintain a dynamic model of its environment and reason about spatial relationships, thereby bridging language, vision, and planning.

In today’s email:

OpenAI pivots to modular GPT‑5 as Stargate project stalls

DeepMind’s Gemini clinches AI’s first math gold as controversy clouds celebrations

xAI’s Grok 4 triples iOS revenue; next-gen Colossus 2 supercluster nears launch

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

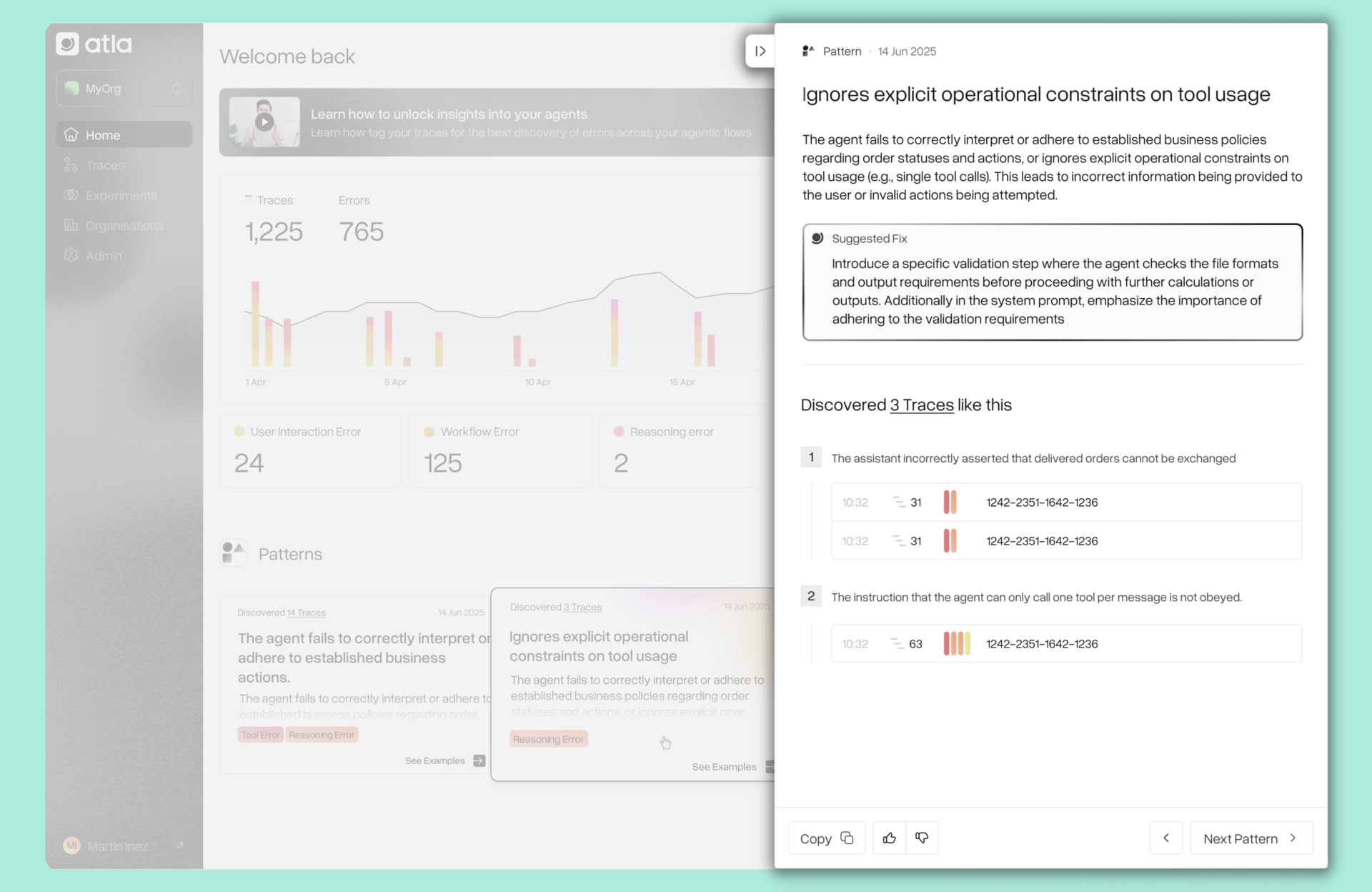

In partnership with Atla

Your agent failed. Here’s why.

Debugging agents usually means reading through traces and trying to piece together what went wrong. It’s slow, manual, and often leaves teams unsure of the actual issues.

Atla helps by automatically detecting where and why failures happen, summarizing traces so you don’t have to dig through every step, and showing error patterns across runs. The platform also provides actionable suggestions for improvement, and allows teams to test prompt changes and compare results side-by-side.

Atla is built upon proprietary research on evaluating agents, and is designed to replace vibe checks with grounded analysis.

The platform is open for early access–try it for free and improve your agents.

Thank you for supporting our sponsors!

Today’s trending AI news stories

OpenAI pivots to modular GPT‑5 as Stargate project stalls

OpenAI is reportedly close to launching a dynamic “router” for ChatGPT that would automatically select the best AI model for each user prompt. Leaked posts from OpenAI insiders hint the router won’t kill manual control but will quietly switch to stronger models for complex or critical queries, like a real-time triage between reasoning, creative, and tool‑using subsystems. Rumors suggest this is part of a move toward GPT‑5 as a modular, task-aware system rather than a single monolithic model.

Open is set to pay Oracle $30B/year for a massive 4.5GW Stargate data center. Image: Sam Altman on 𝕏

On the infrastructure side, SoftBank and OpenAI’s ambitious $500 billion Stargate project to build massive AI data centers has stalled just six months after launch. After disagreements over land use and strategy, plans have scaled back to a single Ohio facility by year’s end. SoftBank raised $3 billion, but OpenAI has pivoted, securing a $30 billion deal with Oracle to build a Texas data center housing 400,000 Nvidia GB200 chips and partnering separately with CoreWeave.

ChatGPT is now fielding over 2.5 billion prompts daily, adding up to nearly a trillion a year. Of those, about 330 million requests come from U.S. users alone, OpenAI confirmed to The Verge. The firm's first economic report, led by Chief Economist Ronnie Chatterji, shows ChatGPT driving sharp productivity gains. Despite this explosive growth, JPMorgan cautions OpenAI’s moat is “increasingly fragile,” citing rising litigation, price competition, and dependence on consumer subscriptions. Read more

DeepMind’s Gemini clinches AI’s first math gold as controversy clouds celebrations

Google DeepMind’s Gemini has set a new milestone in AI reasoning, earning a gold medal–level score at the 2025 International Mathematical Olympiad by solving five of six complex problems using only natural language.

This historic feat makes Gemini the first AI to be officially graded at gold level, outperforming last year’s silver-winning AlphaGeometry. Its success stems from a “parallel thinking” architecture that explores multiple solution paths simultaneously, combined with advanced reinforcement learning and curated mathematical proof data.

Controversy arose when rival OpenAI rushed to announce similar results ahead of official IMO verification, stirring criticism for putting hype ahead of the process.

Alongside this, Google released Gemini 2.5 Flash-Lite, its fastest, most cost-efficient model yet, designed for scaled production use with a large context window and native reasoning tools. Early adopters have reported significant latency reductions and energy savings.

Complementing this, Gemini 2.5 now supports conversational image segmentation: users can identify objects or abstract concepts like “damage” or “clutter” using natural language, even reading on-screen text. Results return as JSON with pixel masks and coordinates, all accessible through the Gemini API and Google AI Studio.

I

These wins also reignite an old but practical question: is intelligence grounded in rigid symbols, or do symbols simply bend to thought? By reaching gold‑level IMO scores through pure natural language reasoning, i.e., no formal solvers, DeepMind and OpenAI’s models challenge the idea that true understanding must be symbolic.

DeepMind’s Andrew Lampinen frames symbols as scaffolding for intuition, echoing Wittgenstein: meaning emerges through use, not structure alone. Hybrid neuro‑symbolic systems like AlphaProof once led this field, but these results show deep learning alone can now rival human mathematical reasoning. Read more.

xAI’s Grok 4 triples iOS revenue; next-gen Colossus 2 supercluster nears launch

Elon Musk’s xAI is scaling at an aggressive pace on both infrastructure and product fronts. The company is finalizing Colossus 2, a massive new supercomputing cluster slated to deploy over 550,000 NVIDIA GB200 and GB300 GPUs for AI training within weeks. This follows Colossus 1, which already runs 230,000 GPUs, including 30,000 GB200s, to train the Grok family of models, while inference workloads remain on external cloud providers. NVIDIA CEO Jensen Huang has described xAI’s build-out speed as unmatched in the industry.

xAI saw a huge revenue spike after launching its new model, Grok 4. Released on July 9, Grok 4 pushed daily iOS revenue from $99,000 to $419,000 by July 11, a jump of 325%, according to Appfigures. Downloads also surged 279% to nearly 197,000. The momentum continued for days before dipping slightly. A week later, xAI added raunchy AI companions for “Super Grok” subscribers at $30/month. While these drove downloads up 40% to 171,000, revenue only rose by 9%, showing less impact than the model launch itself.

Image: Appfigures

Despite the steep price, well above many competitors, demand was strong enough to briefly lift Grok to No. 3 overall in the U.S. App Store. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on 𝕏!