Good morning. It’s Monday, December 18th.

In Partnership with SciSpace

Did you know: 25 years ago today, Mario Party was released in Japan?

In today’s email:

AI Technology and Innovation

AI in Business and Finance

AI in Social, Environmental, and Global Issues

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

Interested in reaching 46,994 smart readers like you? To become an AI Breakfast sponsor, apply here.

Today’s trending AI news stories

AI Technology and Innovation

> Mixtral 8x7b and Gemini Pro, two advanced language models comparable to GPT-3.5, are now available for testing in the Hugging Face ChatBot Arena. Mixtral 8x7b, an open-source and potentially more cost-effective option, currently leads the leaderboard, slightly ahead of Gemini Pro and on par with GPT-3.5 Turbo. These models can be used to answer questions or complete tasks, showcasing the evolving landscape of AI-powered chatbots.

> OpenAI released a prompt guide to optimize ChatGPT and LLM performance, offering six key strategies: providing clear instructions, supplying reference text, breaking down complex tasks, allowing models time to 'think,' utilizing external tools, and conducting targeted evaluations. The guide aims to improve AI language models' performance, ensuring users get more effective and accurate responses. It also includes examples for different scenarios, aiding users in navigating the complexities of AI interactions effectively.

> Hyperspace positions itself as the “Android for AI,” promoting AI decentralization by running models on a laptop network, not centralized data centers. Their peer-to-peer AI engine reduces reliance on costly data centers, democratizing AI access. Their VectorRank technology, a vector-based system, enhances AI’s web information access, contrasting with conventional algorithms like Google’s PageRank. This approach aims to shift control from large corporations to the open-source community, marking a significant shift in AI development.

AI in Business and Finance

> OpenAI has suspended ByteDance's account for using GPT data to secretly develop its own competing AI model, "Project Seed." This action, reported by Alex Heath, breaches OpenAI and Microsoft's terms of service, which ByteDance accessed through Azure. Investigations focus on ByteDance's policy violations and attempts to "whitewash" evidence, raising significant concerns about IP practices and ethical standards in the competitive generative AI industry.

> A class action lawsuit accuses Google and Alphabet of antitrust violations, harming news publishers’ bottom line due to AI being able to generate news results without sending readers to the publication’s website. Filed by Helena World Chronicle, the lawsuit alleges Google’s AI technologies like Search Generative Experience (SGE) and Bard AI worsen content and ad revenue siphoning from publishers. The suit cites Google’s use of “Knowledge Graph” and “Featured Snippets” for redirecting traffic away from original content providers. Concerns focus on AI’s impact on publishers, as Google-driven traffic forms a significant part of their web visitors. The lawsuit seeks damages and an injunction for consent from publishers to use their content for AI training, and for non-participating publishers to remain in search results.

> Visa introduces Visa Provisioning Intelligence, an AI-powered system to detect and prevent token fraud in payment systems. It assigns risk scores from 1 to 99 to transactions, identifying potentially fraudulent token provisions. In 2022, losses from token provisioning fraud reached about $450 million. This tool aims to enhance security for financial institutions by distinguishing legitimate from fraudulent requests, improving the trust in payment networks.

> EVE Online players have launched an AI-driven ‘empire’ using ChatGPT-4, creating the game’s first AI-led player corporation named Neural Nexus. This innovative approach decentralizes leadership, allowing AI guidance for all corporation members and fostering a consistent vision. The AI consults on major decisions monthly, while a council records decisions in codex for future reference. This pioneering project in the EVE Online community opens dynamic role-playing opportunities and is still in its early stages, with its impact yet to be fully understood.

> NYC Councilwoman-elect Susan Zhuang admitted using AI for constituent and media responses. Accused by the New York Post, her answers were AI-flagged by Copyleaks. Representing a new Asian-majority district in Brooklyn, she justified AI use for deeper understanding and personal growth, citing her immigrant background and English as a second language. Her AI-generated responses differed significantly from her usual communication style.

> The National Science Foundation (NSF) is introducing new guidelines for the use of generative AI in its merit review process. These guidelines prohibit reviewers from uploading proposal content to non-approved AI tools and encourage proposers to disclose any generative AI usage in their submissions. NSF currently only approves publicly accessible, commercial generative AI for public information use. The agency is exploring options to safely implement generative AI technologies within its data ecosystem and is developing approved applications for generative AI use.

> Conservationists are using AI to protect vanishing coral reefs, facing threats from climate change and other environmental factors. The Coral Restoration Foundation (CRF) developed Cerulean AI, a tool that analyzes 3D reef maps, offering insights for effective coral restoration. This approach transitions from manual monitoring to AI-assisted analysis, enabling quicker, more informed decision-making in coral conservation efforts. The tool is set for release to other groups, aiding global reef restoration.

In partnership with SciSpace

Transform your academic research with SciSpace

Dive into a sea of knowledge now with access to over 282 million articles

Accelerate your literature reviews, effortlessly extract data from PDFs, and uncover new papers with cutting-edge AI search capabilities.

Simplify complex concepts and enhance your reading experience with AI Co-pilot, and craft the perfect narrative with the paraphraser tool.

Millions of researchers are already using SciSpace on research papers.

Join SciSpace and start using your AI research assistant wherever you're reading online.

Thank you for supporting our sponsors!

5 new AI-powered tools from around the web

Taskade AI Mobile revolutionizes productivity with AI Project Studio for effortless project and workflow restructuring, combined with AI-enhanced task management, brainstorming and document collaboration on iOS and Android.

GrowEasy is a generative AI-powered app for swift Facebook and Instagram lead generation campaigns. Tailored for small businesses, it automates ad creatives, copies, lead forms, and targeting in under 5 minutes.

Siena AI is an empathetic AI customer service agent, blending human empathy with AI efficiency. Designed for e-commerce, it transforms customer experience by offering detailed, empathetic support around the clock.

InterviewJarvis Master tech job interviews with this AI-powered tool. Practice over 200 tailored questions based on your resume, experience realistic interview simulations, and develop precise responses.

Roast My Web Elevate your AI-driven feedback. This AI tool optimizes design, user experience, conversions, and content on all devices.

arXiv is a free online library where researchers share pre-publication papers.

This paper introduces an innovative AI model blending human empathy with AI efficiency for answering complex, open-ended questions. It incorporates ReAct and ReST methods for continuous self-improvement through AI feedback and synthetic data, effectively distilling large models into smaller, efficient ones while maintaining high performance. It is ideal for compositional question-answering.

This paper introduces an efficient technique to initialize scaled-down transformer models using weights from larger pretrained counterparts, enhancing training speed and performance. It involves neuron importance ranking and block removal to match the smaller model’s architecture. This method significantly accelerates training for various tasks, like image classification and language modeling, by utilizing important weights from larger models for quick and effective scaling down.

This paper introduces a framework using Large Language Models (LLMs) to create extensive, high-quality conversational datasets. It leverages a Generator-Critic architecture to expand initial datasets while enhancing conversation quality. Key features include automated persona generation, user profile pairing, and iterative improvement of conversation samples. This method significantly boosts the dataset’s relevance and faithfulness to user personas, contributing to more engaging and personalized AI interactions.

The paper explores enhancing the reliability of large language models (LLMs) for content generation through self-evaluation techniques. Traditional sequence-level probability metrics like perplexity are inadequate for judging generation quality. This study introduces methods to convert open-ended tasks into token-level predictions, utilizing LLMs’ stronger calibration at this level. By instructing LLMs to self-evaluate using multi-way comparison or point-wise evaluation, the model can more accurately gauge its confidence in generated content. The approach, tested on PALM-2 and GPT-3 models using TRUTHFULQA and TL;DR benchmarks, demonstrates improved accuracy and better correlation with content quality.

The paper critically analyzes the effectiveness of unsupervised methods in discovering latent knowledge with LLMs. It contents that these methods are prone to identifying prominent features of LLM activations, which may not necessarily represent actual knowledge. The authors provide theoretical proofs and empirical evidence to demonstrate that arbitrary features can satisfy the consistency structure of a well-known unsupervised knowledge-elicitation method, namely contrast-consistent search. Various experiments reveal that in practice, these unsupervised methods often yield classifiers predicting features unrelated to knowledge, thereby challenging the effectiveness of current unsupervised approaches in knowledge discovery from LLMs.

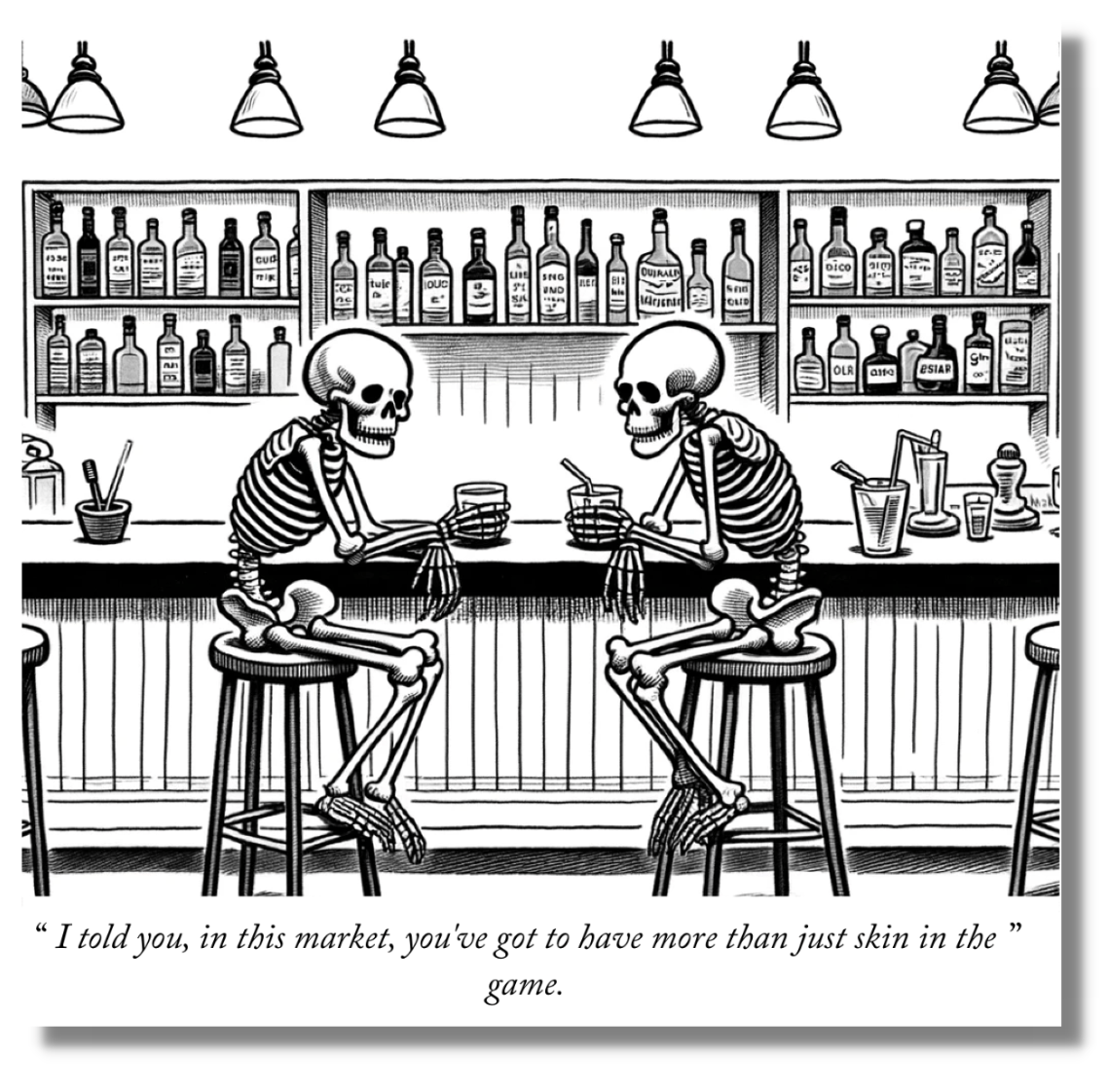

ChatGPT + DALLE 3 Attempts Comics

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, apply here.