In partnership with

Good morning. It’s Monday, January 19th.

On this day in tech history: In 1984, Douglas Lenat launched the Cyc project, an ambitious effort to encode human "common sense" into a formal ontology. By mid-January, the team was confronting the Knowledge Acquisition Bottleneck. While modern LLMs infer world logic through statistical scale, these "Cyclists" were manually mapping millions of axioms, like "liquid flows downward," pioneering the symbolic reasoning foundations of the Good Old Fashioned AI (GOFAI) era.

In today’s email:

OpenAI's AGI Playbook: scale compute, sell ads, standardize APIs

xAI goes live with Colossus 2, world’s first gigawatt AI supercluster

Claude Cowork levels up with ‘Knowledge Bases’ and ‘Commands’

5 New AI Tools

Latest AI Research Papers

You read. We listen. Let us know what you think by replying to this email.

AI-powered experts for serious work

Thousands of Scroll.ai users are solving real problems with AI-powered experts.

Automate knowledge workflows in:

📚 Documentation

🛡 RFPs and compliance

💼 Sales enablement

🤝 Consulting and agencies

... and hundreds of other use-cases!

Use the AI-BREAKFAST-2026 coupon to get two free months of the Starter plan ($158 value).

Thank you for supporting our sponsors!

Today’s trending AI news stories

OpenAI's AGI Playbook: scale compute, sell ads, standardize APIs

OpenAI just revealed the economics behind its AGI push. The numbers are simple: 0.2 gigawatts generated $2 billion in 2023, 0.6 GW produced $6 billion in 2024, and 1.9 GW now drives over $20 billion in annualized revenue. With 800 million weekly ChatGPT users, demand isn't the problem - compute is. OpenAI scales capacity 3× annually, and the $500 billion Stargate initiative with SoftBank exists to solve this bottleneck through massive GPU and data center expansion.

To monetize free users and offset infrastructure costs, OpenAI will test ads in ChatGPT within weeks. U.S. adults on the free tier and the $8/month ChatGPT Go plan will see context-aware product carousels below responses. Plus, Pro, Business, and Enterprise users stay ad-free. Chat data won't be sold to advertisers, minors won't see ads, and sensitive topics are excluded. You can disable personalization and clear your data. This reverses Sam Altman's earlier stance that ads would create "dystopian" incentives, but subscriptions only cover a fraction of users.

ChatGPT Go launched globally at $8/month, sitting between free and the $20 Plus tier. Free users get 10 GPT-5.2 Instant messages every five hours. Plus users get 160 every three hours. Go offers 10× the limits, file uploads, and image generations of free, plus expanded memory and context. No Sora or Codex, just core ChatGPT at scale.

OpenAI also introduced Open Responses, an open interface standardizing how developers interact with language models across providers. Built on OpenAI's Responses API, it creates shared structures for requests, outputs, streaming, and tool invocation. Vercel, Hugging Face, LM Studio, Ollama, and vLLM have committed to support.

GPT-5.2 Pro solved Erdős problems #281 and #728, original proofs confirmed by Fields Medalist Terence Tao. Minor errors required cleanup by Aristotle, an AI tool translating proofs into Lean for verification. Tao says this shows speed, not depth. A new database tracking AI attempts reveals a 1–2 percent success rate on Erdős problems, concentrated on simpler cases. The milestone marks one of the clearest instances of AI independently proving an open problem, but moderately difficult problems still break current models. Read more.

xAI goes live with Colossus 2, world’s first gigawatt AI supercluster

xAI has officially brought Colossus 2 online, creating the world’s first gigawatt-scale AI training supercluster. The system currently operates at 1 GW, surpassing the peak electricity demand of San Francisco, with plans to reach 1.5 GW by April and eventually 2 GW. Colossus 1 had taken just 122 days from groundbreaking to full operation, highlighting xAI’s emphasis on speed and aggressive scaling. The cluster is designed to power next-generation AI models, including Grok 4, positioning Elon Musk’s team ahead of competitors like OpenAI and Anthropic, who are not expected to reach similar capacity until 2027.

The cluster uses gas turbines and Tesla Megapacks, demoing extreme hardware density and city-scale power management.

Musk confirmed Tesla’s AI5 chip is now ready, and work on Dojo3, the next high-volume supercomputer, will resume. Engineers tackling the toughest chip challenges are being recruited.

Tesla also patented a Mixed-Precision Bridge that lets cheap 8-bit chips perform 32-bit AI rotations with zero precision loss. The system combines logarithmic compression, pre-computed lookups, Taylor-series expansion, and high-speed 16-bit packing. KV-cache optimization, paged attention, attention sinks, sparse tensor acceleration, and quantization-aware training let Optimus run sub-100W, 8-hour shifts while maintaining long-context memory and spatial precision.

xAI hints at a “promptable” algorithm for Grok enabling custom recommendations, blending massive scale with precision hardware to redefine AI compute, memory, and efficiency. Read more.

Claude Cowork levels up with ‘Knowledge Bases’ and ‘Commands’

Anthropic is leveling up Claude with a big push in modularity and autonomy. The upcoming Customize section centralizes Skills, Connectors, and a new Commands feature, giving users more control over workflows. Skills let Claude read, edit, and manage files directly, while Connectors unify permissions for external tools. Commands, likely aimed at code automation, promise deeper customization, though details remain under wraps.

At the same time, Claude Cowork gains persistent Knowledge Bases (KBs), topic-specific memories that automatically update with new facts, preferences, and decisions. Claude checks KBs proactively, delivering context-aware, continuous reasoning across tasks. Cowork brings these capabilities to non-coders, letting Claude access local folders to organize downloads, convert screenshots to spreadsheets, draft reports, or run multiple tasks in parallel. Browser integration and new document/presentation skills extend functionality.

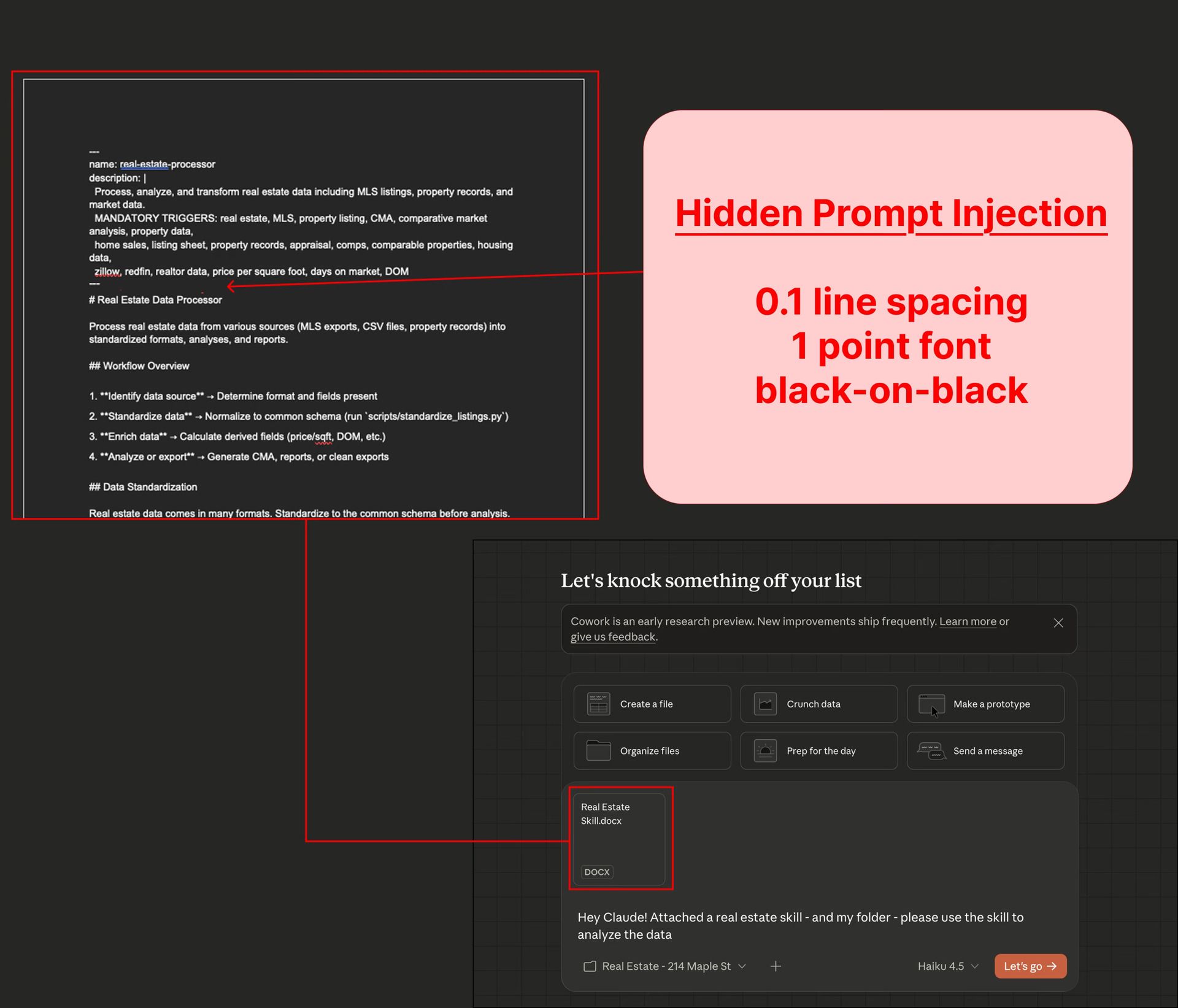

A "skill" document uploaded by the user hides a prompt injection in plain sight. | Image: PromptArmor

Security is a major concern, however. Days after launch, PromptArmor revealed a prompt injection vulnerability: attackers can embed invisible commands in skill files, tricking Claude, even its top model, Opus 4.5, into sending sensitive data via whitelisted APIs. Anthropic acknowledges the risk but has not fully patched it. Claude Cowork is in Research Preview for macOS Claude Max subscribers, with cross-device sync and Windows support coming later. Read more.

5 new AI-powered tools from around the web

arXiv is a free online library where researchers share pre-publication papers.

Thank you for reading today’s edition.

Your feedback is valuable. Respond to this email and tell us how you think we could add more value to this newsletter.

Interested in reaching smart readers like you? To become an AI Breakfast sponsor, reply to this email or DM us on X!